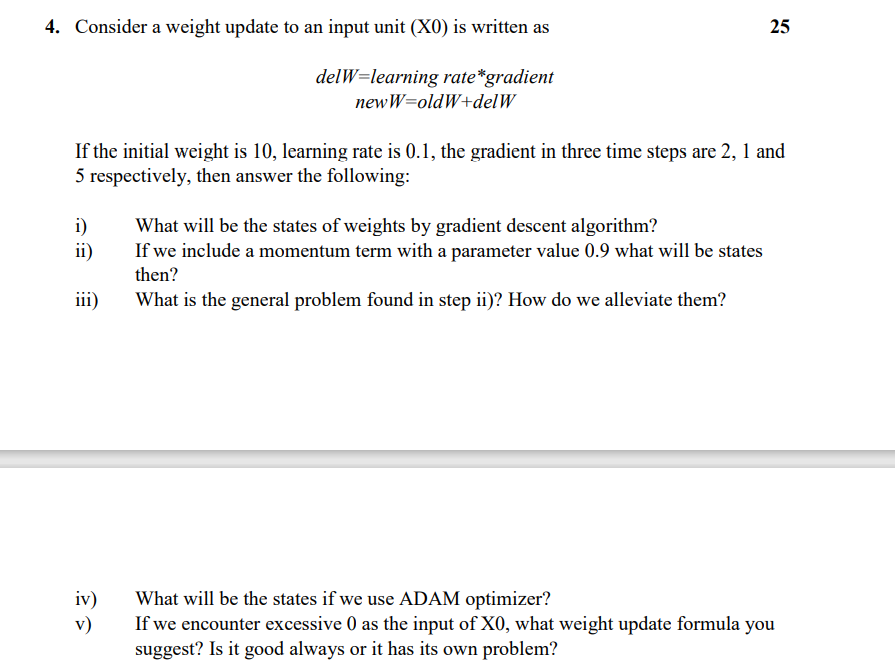

Question: 4. Consider a weight update to an input unit (XO) is written as 25 delW=learning rate*gradient newW=oldW+del W If the initial weight is 10, learning

4. Consider a weight update to an input unit (XO) is written as 25 delW=learning rate*gradient newW=oldW+del W If the initial weight is 10, learning rate is 0.1, the gradient in three time steps are 2, 1 and 5 respectively, then answer the following: i) ii) What will be the states of weights by gradient descent algorithm? If we include a momentum term with a parameter value 0.9 what will be states then? What is the general problem found in step ii)? How do we alleviate them? iii) iv) v) What will be the states if we use ADAM optimizer? If we encounter excessive 0 as the input of X0, what weight update formula you suggest? Is it good always or it has its own problem? 4. Consider a weight update to an input unit (XO) is written as 25 delW=learning rate*gradient newW=oldW+del W If the initial weight is 10, learning rate is 0.1, the gradient in three time steps are 2, 1 and 5 respectively, then answer the following: i) ii) What will be the states of weights by gradient descent algorithm? If we include a momentum term with a parameter value 0.9 what will be states then? What is the general problem found in step ii)? How do we alleviate them? iii) iv) v) What will be the states if we use ADAM optimizer? If we encounter excessive 0 as the input of X0, what weight update formula you suggest? Is it good always or it has its own

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts