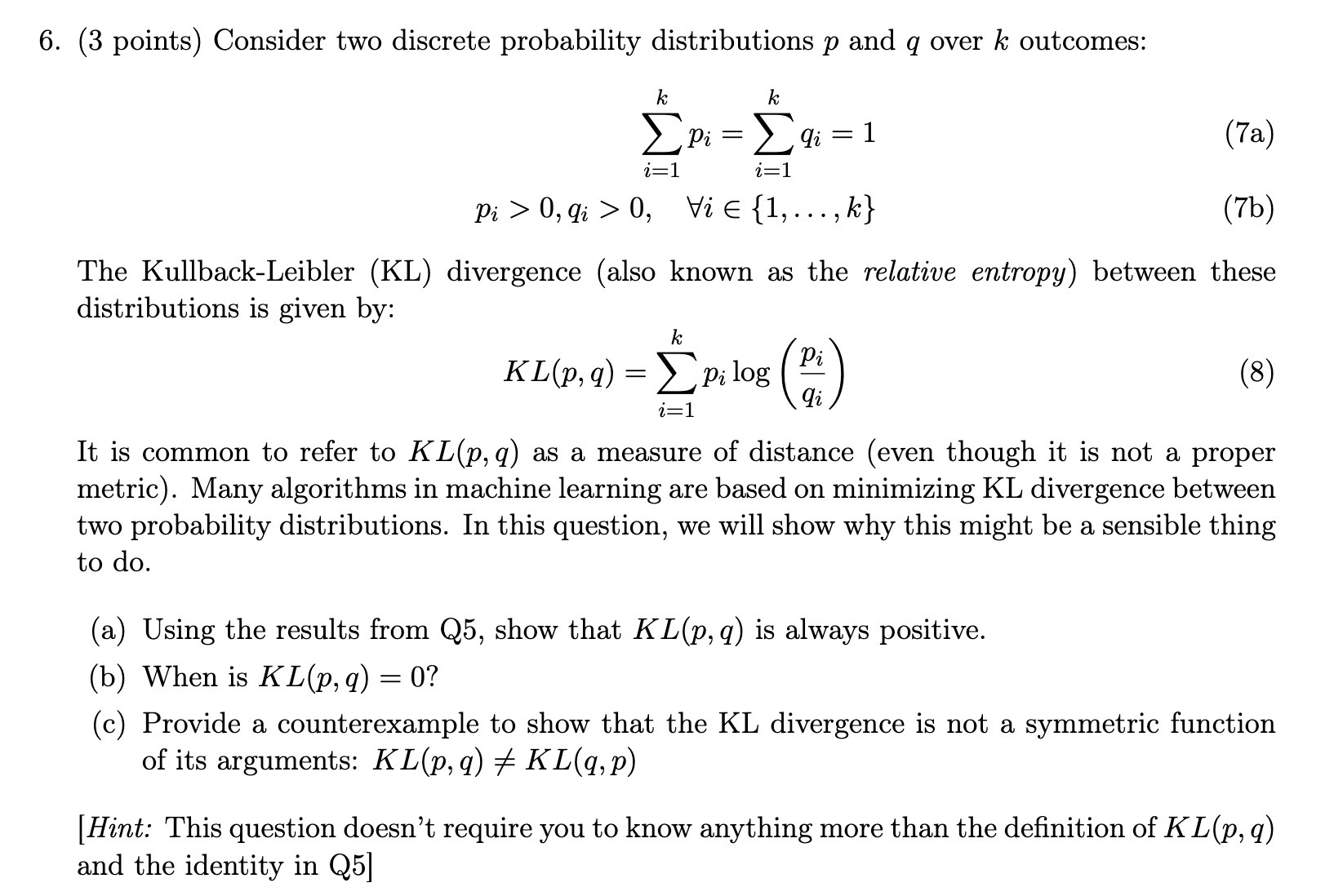

Question: 6. (3 points) Consider two discrete probability distributions 1) and q over It outcomes: k k ZPiZZQ'izl (7a) 21 21 pi>0,q>0, Vie{1,...,k} (7b) The Kullback-Leibler

6. (3 points) Consider two discrete probability distributions 1) and q over It outcomes: k k ZPiZZQ'izl (7a) 21 21 pi>0,q>0, Vie{1,...,k} (7b) The Kullback-Leibler (KL) divergence (also known as the relative entropy) between these distributions is given by: 1.; p. 103(1). q) = 2105108 (j) (8) i=1 1 It is common to refer to K L(p, q) as a measure of distance (even though it is not a proper metric). Many algorithms in machine learning are based on minimizing KL divergence between two probability distributions. In this question, we will show why this might be a sensible thing to do. (a) Using the results from Q5, Show that K LQ), q) is always positive. (b) When is KL(p, q) = 0? (c) Provide a counterexample to show that the KL divergence is not a symmetric function of its arguments: K L(p, q) 3% K L(q, p) [Hint This question doesn't require you to know anything more than the denition of K L(p, q) and the identity in Q5]

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts