Question: 6 Risk Minimization with Doubt Suppose we have a classification problem with classes labeled 1 , dots,c and an additional doubt category labeled c +

Risk Minimization with Doubt

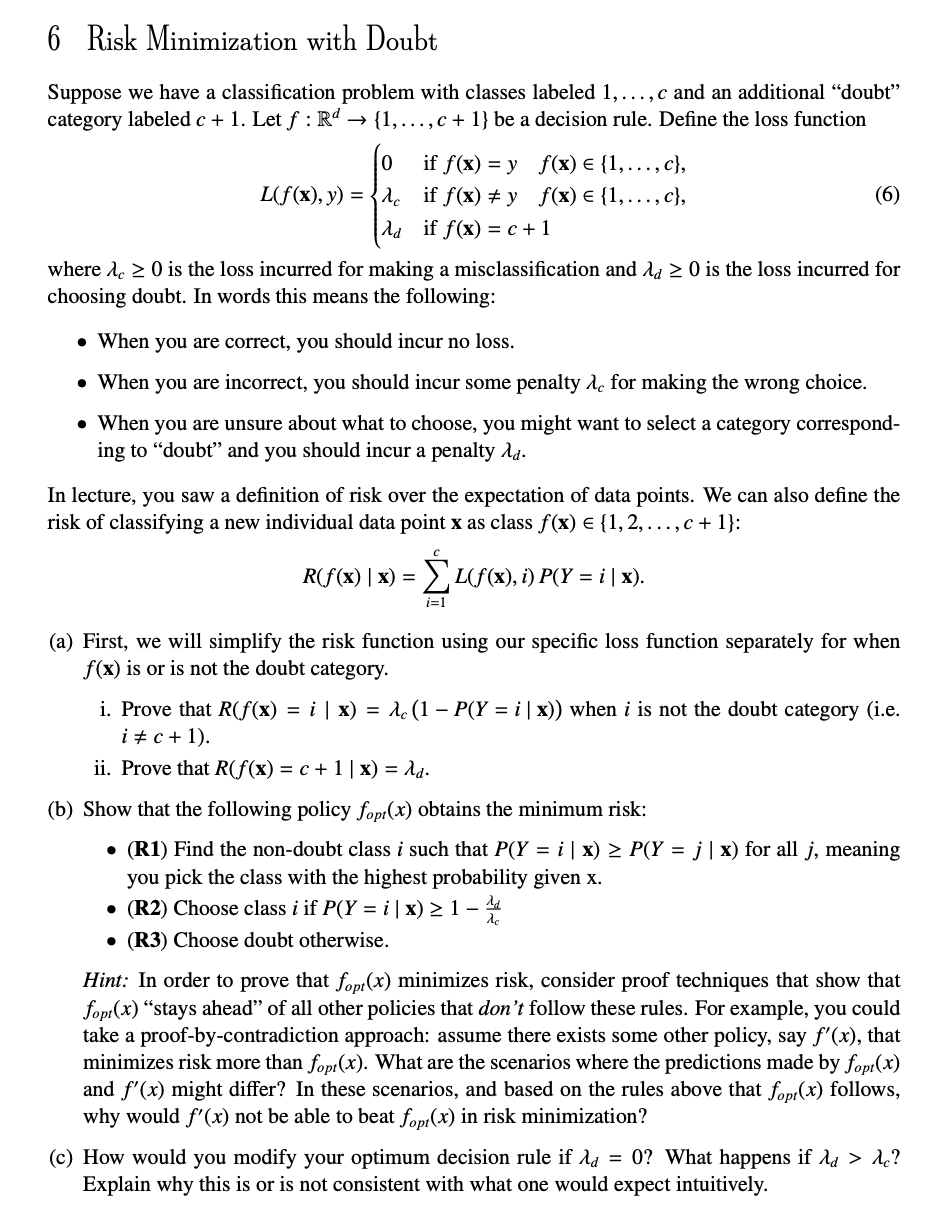

Suppose we have a classification problem with classes labeled dots,c and an additional "doubt"

category labeled c Let f:Rddots,c be a decision rule. Define the loss function

Lfxy if fxyfxindots,clambda c if fxyfxindots,clambda d if fxc:

where lambda c is the loss incurred for making a misclassification and lambda d is the loss incurred for

choosing doubt. In words this means the following:

When you are correct, you should incur no loss.

When you are incorrect, you should incur some penalty lambda c for making the wrong choice.

When you are unsure about what to choose, you might want to select a category correspond

ing to "doubt" and you should incur a penalty lambda d

In lecture, you saw a definition of risk over the expectation of data points. We can also define the

risk of classifying a new individual data point x as class fxindots,c :

Rfxxsumic LfxiPYix

a First, we will simplify the risk function using our specific loss function separately for when

fx is or is not the doubt category.

i Prove that Rfxixlambda cPYix when iic

ii Prove that Rfxcxlambda d

b Show that the following policy fopt x obtains the minimum risk:

R Find the nondoubt class i such that PYixPYjx for all j meaning

you pick the class with the highest probability given x

R Choose class i if PYixlambda dlambda c

R Choose doubt otherwise.

Hint: In order to prove that fopt x minimizes risk, consider proof techniques that show that

fopt x "stays ahead" of all other policies that don't follow these rules. For example, you could

take a proofbycontradiction approach: assume there exists some other policy, say fx that

minimizes risk more than fopt x What are the scenarios where the predictions made by fopt x

and fx might differ? In these scenarios, and based on the rules above that fopt x follows,

why would fx not be able to beat fopt x in risk minimization?

c How would you modify your optimum decision rule if lambda d What happens if lambda dlambda c

Explain why this is or is not consistent with what one would expect intuitively.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock