Question: a ) Consider a text sequence with 3 words: such that each word is represented by a 2 - d vector as given in matrix

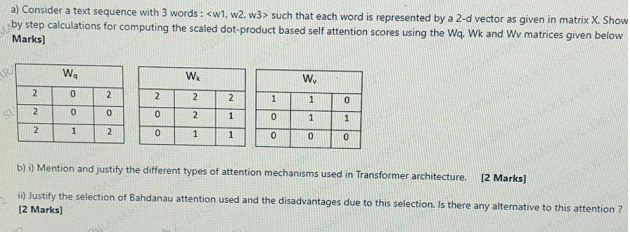

a Consider a text sequence with words: such that each word is represented by a d vector as given in matrix Show

by step calculations for computing the scaled dotproduct based self attention scores using the Wq Wk and Wv matrices given below

Marks

b i Mention and justify the different types of attention mechanisms used in Transformer architecture.

Marks

ii Justify the selection of Bahdanau attention used and the disadvantages due to this selection. Is there any alternative to this attention

Marks

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock