Question: a) Suppose we are training a neural network with one linear output unit (i.e. its output is same as its net input) and no hidden

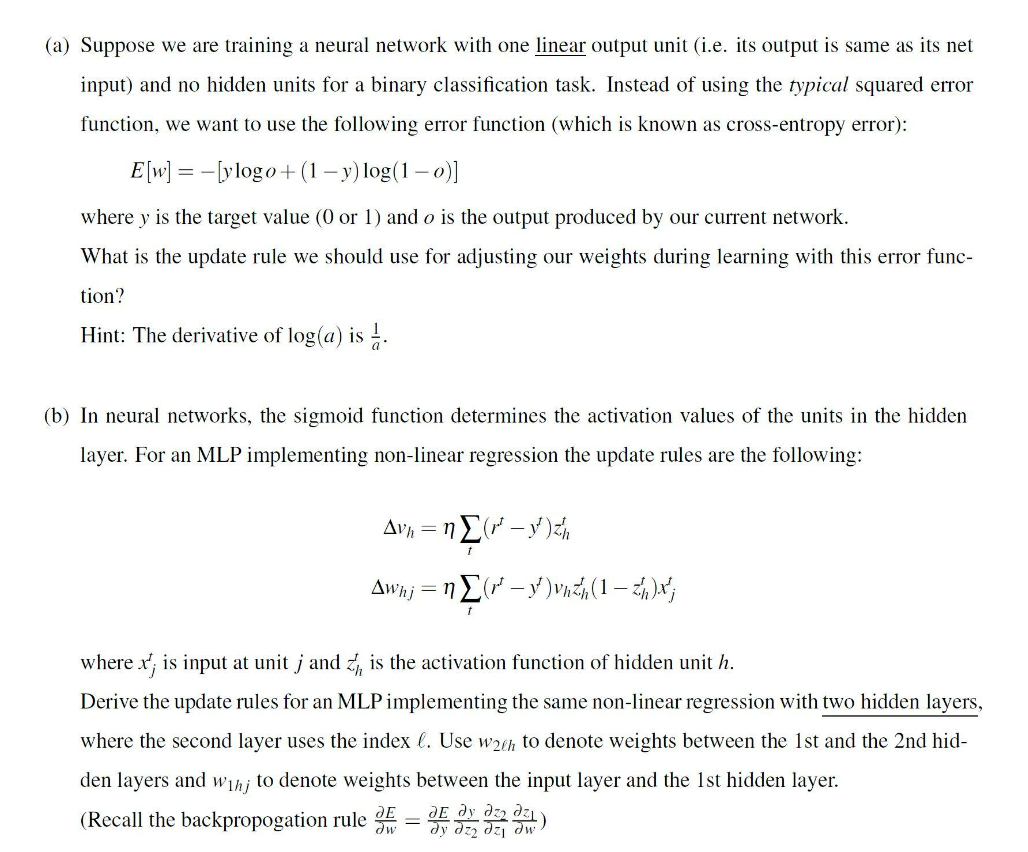

a) Suppose we are training a neural network with one linear output unit (i.e. its output is same as its net input) and no hidden units for a binary classification task. Instead of using the typical squared error function, we want to use the following error function (which is known as cross-entropy error): E[w]=[ylogo+(1y)log(1o)] where y is the target value ( 0 or 1 ) and o is the output produced by our current network. What is the update rule we should use for adjusting our weights during learning with this error function? Hint: The derivative of log(a) is a1. b) In neural networks, the sigmoid function determines the activation values of the units in the hidden layer. For an MLP implementing non-linear regression the update rules are the following: vhwhj=t(rtyt)zht=t(rtyt)vhzht(1zht)xjt where xjt is input at unit j and zht is the activation function of hidden unit h. Derive the update rules for an MLP implementing the same non-linear regression with two hidden layers, where the second layer uses the index . Use w2h to denote weights between the 1 st and the 2 nd hidden layers and w1hj to denote weights between the input layer and the 1st hidden layer. (Recall the backpropogation rule wE=yEz2yz1z2wz1 )

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts