Question: A TCP connection uses the method based on both mean and deviation to estimate the timeout. The initial value of estimated RTT is 80 ms

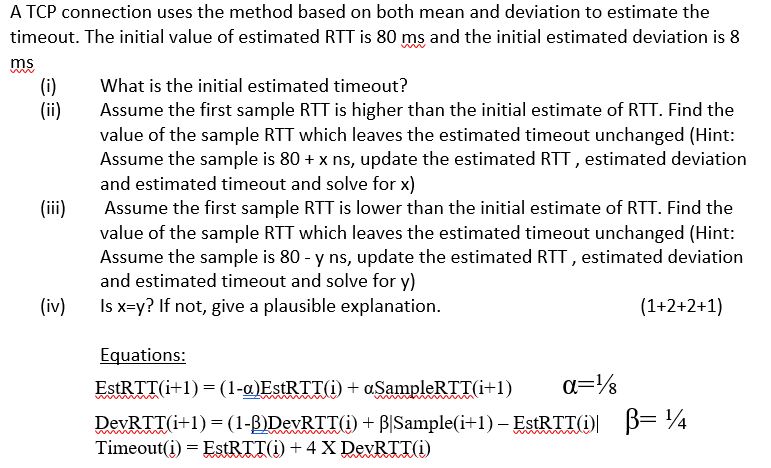

A TCP connection uses the method based on both mean and deviation to estimate the timeout. The initial value of estimated RTT is 80 ms and the initial estimated deviation is 8 ms (i) What is the initial estimated timeout? (ii) Assume the first sample RTT is higher than the initial estimate of RTT. Find the value of the sample RTT which leaves the estimated timeout unchanged (iii) Assume the first sample RTT is lower than the initial estimate of RTT. Find the value of the sample RTT which leaves the estimated timeout unchanged (iv) Is x = y? If not, give a plausible explanation. Equations: EstRTT(i+1) = (1 - alpha)EstRTT(i) + alpha SampleRTT(i + 1) alpha = 1/8 DevRTT(i + 1) = (1 - beta) DevRTT(i) + beta Sample(i + 1) - EstRTT(i) beta = 1/4 Timeout(i) = EstRTT(i) + 4 X DevRTT(i)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts