Question: AdaBoost ( Adaptive Boosting ) is another approach to the ensemble method field. It always uses the entire data and features ( unlike before )

AdaBoost Adaptive Boosting is another approach to the ensemble method field.

It always uses the entire data and features unlike before and aims to create weighted

classifiers unlike before, where each classifier had same influence The new

classification will be decided by linear combination of all the classifiers, by:

sign

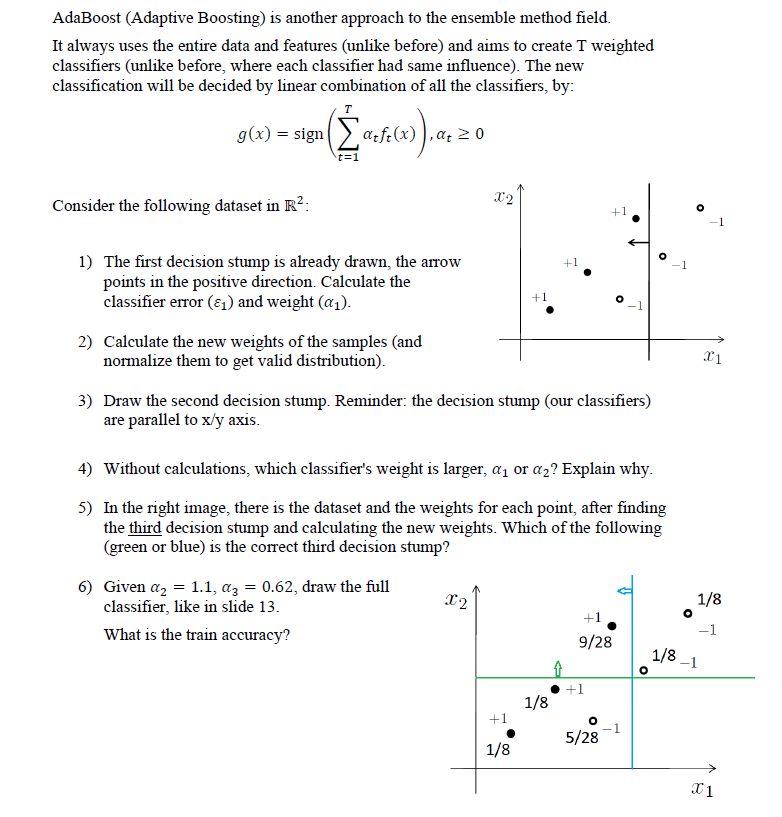

Consider the following dataset in :

The first decision stump is already drawn, the arrow

points in the positive direction. Calculate the

classifier error and weight

Calculate the new weights of the samples and

normalize them to get valid distribution

Draw the second decision stump. Reminder: the decision stump our classifiers

are parallel to axis.

Without calculations, which classifier's weight is larger, or Explain why.

In the right image, there is the dataset and the weights for each point, after finding

the third decision stump and calculating the new weights. Which of the following

green or blue is the correct third decision stump?

Given draw the full

classifier, like in slide

What is the train accuracy?

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock