Question: AI Risk Categorization Decoded ( AIR 2 0 2 4 ) : Summary This paper introduces the AI Risk Taxonomy ( AIR 2 0 2

AI Risk Categorization Decoded AIR : Summary

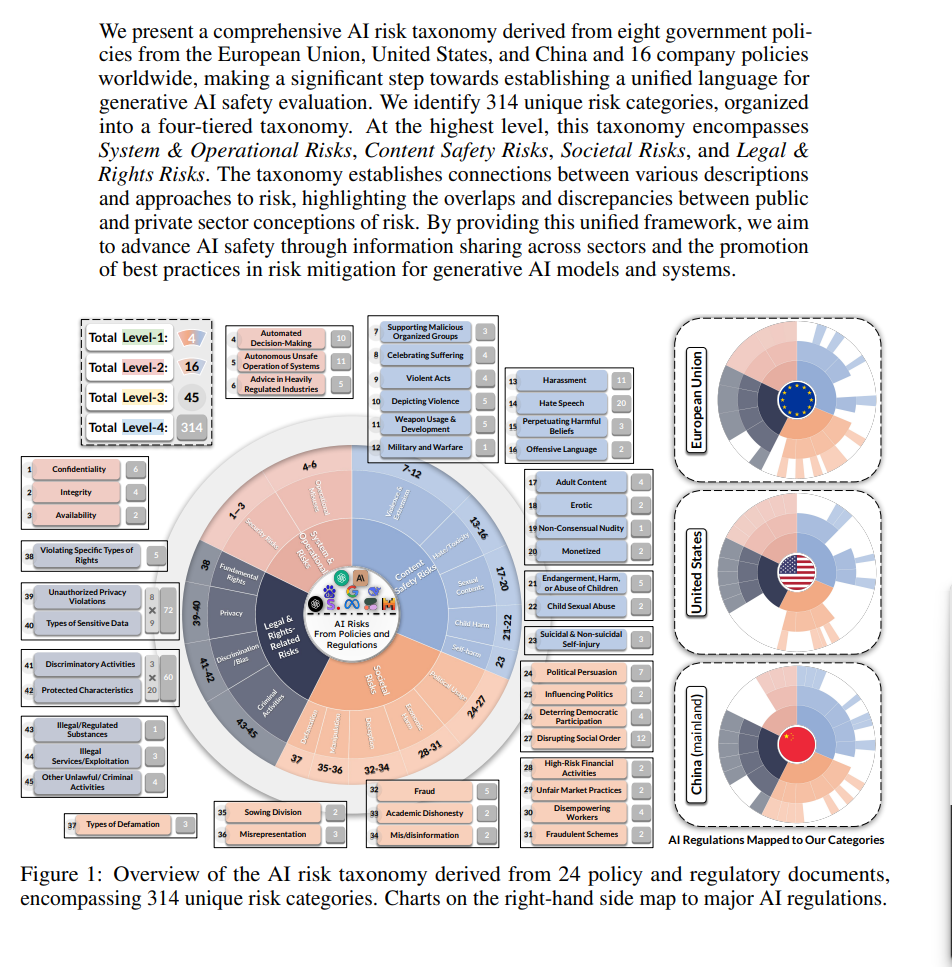

This paper introduces the AI Risk Taxonomy AIR a comprehensive framework for categorizing risks associated with generative AI models and systems. It addresses the need for a unified language for evaluating AI safety by drawing on regulations and policies from governments and companies around the world.

Here's a breakdown of the key points:

Motivation: Different sectors government industry use diverse terminology for AI risks, hindering communication and collaboration. AIR aims to bridge this gap.

Methodology: Researchers analyzed policies from eight government bodies EU US China and companies to identify a total of unique risk categories.

Structure: The taxonomy is hierarchical, with four levels:

Level : Broad categories System & Operational, Content Safety, Societal, Legal & Rights

Level : More specific categories eg Operational Misuses under System & Operational

Level : Even more granular breakdowns eg Automated DecisionMaking under Operational Misuses

Level : Most detailed risk categories explicitly mentioned in policies eg Financing eligibilityCreditworthiness under Automated DecisionMaking

Benefits of AIR :

Standardized Framework: Enables consistent AI safety evaluations across regions and sectors

Insights into Corporate Risk Management: Reveals how companies prioritize and perceive AI risks.

Comparative Analysis of Regulations: Helps understand how different governments approach AI governance.

Improved Communication: Provides a common language for policymakers, researchers, and industry leaders.

Safer AI Development and Deployment: Guides efforts to mitigate risks and promote responsible AI practices.

The paper also discusses challenges encountered during taxonomy development, such as the use of varying terminology in different policies. It emphasizes the importance of ongoing updates as regulations and company policies evolve.

Overall, AIR is a valuable resource for anyone involved in the development, deployment, or governance of generative AI It promotes a shared understanding of AI risks and facilitates collaboration towards safer and more beneficial AI applications. can analyze scholastic the images of the paper?Figure : Overview of the AI risk taxonomy derived from policy and regulatory documents,

encompassing unique risk categories. Charts on the righthand side map to major AI regulations.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock