Question: Artificial Intelligence (Machine learning & Data mining) Q6.1 Principal Direction 2 Points Which of the following vectors v = (v1, v2 ) could be the

Artificial Intelligence (Machine learning & Data mining)

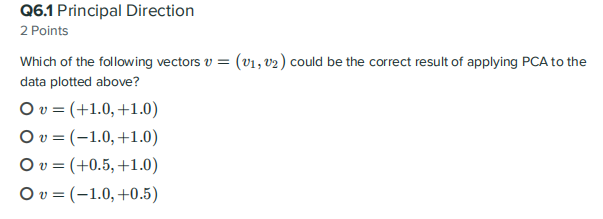

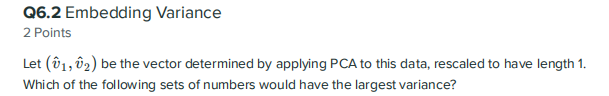

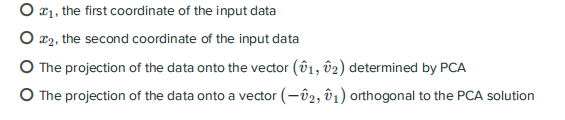

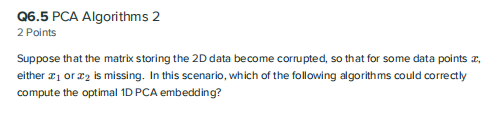

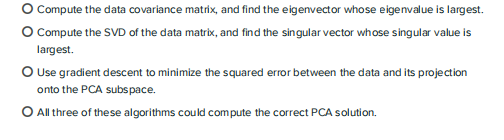

Q6.1 Principal Direction 2 Points Which of the following vectors v = (v1, v2 ) could be the correct result of applying PCA to the data plotted above? Ov=(+1.0, +1.0) Ov= (-1.0, +1.0) Ov= (+0.5, +1.0) Ov= (-1.0, +0.5) Q6.2 Embedding Variance 2 Points Let (W1, 2) be the vector determined by applying PCA to this data, rescaled to have length 1. Which of the following sets of numbers would have the largest variance? 021, the first coordinate of the input data 22, the second coordinate of the input data The projection of the data onto the vector (1, 2) determined by PCA The projection of the data onto a vector (-2, 01) orthogonal to the PCA solution Q6.3 Impact of Mean 2 Points Suppose that when computing the PCA embedding of this data, instead of using the true data mean, you instead set u = (0,0). How would this impact the variance of the 1D embedding produced by PCA? Increase: The embedding with u = (0,0) would have larger variance than the PCA embedding with u = (4,4) Constant: The embedding with = (0, ) would have the same variance as the PCA embedding with pe=(4,4) Decrease: The embedding with = (0,0) would have smaller variance than the PCA embedding with y = (4,4) Uncertain: Either embedding could possibly have larger variance, depending on detailed properties of the data which cannot be dete mined from the provided plot Q6.4 PCA Algorithms 1 2 Points Given a dataset of 2D points like the one plotted above, which of the following algorithms could correctly compute the optimal 1D PCA embedding? Compute the data covariance matrix, and find the eigenvector whose eigenvalue is largest. Compute the SVD of the data matrix, and find the singular vector whose singular value is largest Use gradient descent to minimize the squared error between the data and its projection onto the PCA subspace. O All three of these algorithms could compute the correct PCA solution. Q6.5 PCA Algorithms 2 2 Points Suppose that the matrix storing the 2D data become corrupted, so that for some data points I, either 21 or 2 is missing. In this scenario, which of the following algorithms could correctly compute the optimal 1D PCA embedding? Compute the data covariance matrix, and find the eigenvector whose eigenvalue is largest. Compute the SVD of the data matrix, and find the singular vector whose singular value is largest Use gradient descent to minimize the squared error between the data and its projection onto the PCA subspace. All three of these algorithms could compute the correct PCA solution. Q6.1 Principal Direction 2 Points Which of the following vectors v = (v1, v2 ) could be the correct result of applying PCA to the data plotted above? Ov=(+1.0, +1.0) Ov= (-1.0, +1.0) Ov= (+0.5, +1.0) Ov= (-1.0, +0.5) Q6.2 Embedding Variance 2 Points Let (W1, 2) be the vector determined by applying PCA to this data, rescaled to have length 1. Which of the following sets of numbers would have the largest variance? 021, the first coordinate of the input data 22, the second coordinate of the input data The projection of the data onto the vector (1, 2) determined by PCA The projection of the data onto a vector (-2, 01) orthogonal to the PCA solution Q6.3 Impact of Mean 2 Points Suppose that when computing the PCA embedding of this data, instead of using the true data mean, you instead set u = (0,0). How would this impact the variance of the 1D embedding produced by PCA? Increase: The embedding with u = (0,0) would have larger variance than the PCA embedding with u = (4,4) Constant: The embedding with = (0, ) would have the same variance as the PCA embedding with pe=(4,4) Decrease: The embedding with = (0,0) would have smaller variance than the PCA embedding with y = (4,4) Uncertain: Either embedding could possibly have larger variance, depending on detailed properties of the data which cannot be dete mined from the provided plot Q6.4 PCA Algorithms 1 2 Points Given a dataset of 2D points like the one plotted above, which of the following algorithms could correctly compute the optimal 1D PCA embedding? Compute the data covariance matrix, and find the eigenvector whose eigenvalue is largest. Compute the SVD of the data matrix, and find the singular vector whose singular value is largest Use gradient descent to minimize the squared error between the data and its projection onto the PCA subspace. O All three of these algorithms could compute the correct PCA solution. Q6.5 PCA Algorithms 2 2 Points Suppose that the matrix storing the 2D data become corrupted, so that for some data points I, either 21 or 2 is missing. In this scenario, which of the following algorithms could correctly compute the optimal 1D PCA embedding? Compute the data covariance matrix, and find the eigenvector whose eigenvalue is largest. Compute the SVD of the data matrix, and find the singular vector whose singular value is largest Use gradient descent to minimize the squared error between the data and its projection onto the PCA subspace. All three of these algorithms could compute the correct PCA solution

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts