Question: As you know, in deep neural networks for classification we often use a softmax activation function in the layer at the top of the network

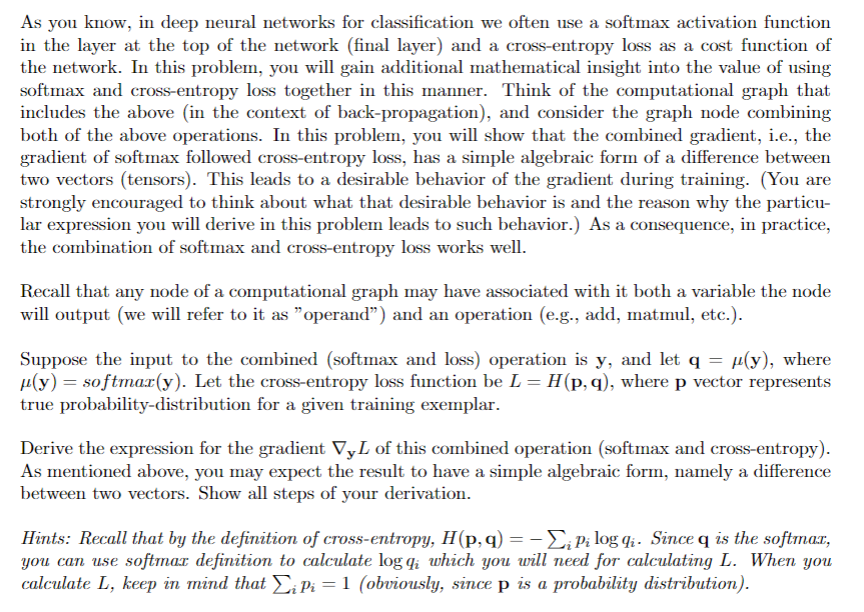

As you know, in deep neural networks for classification we often use a softmax activation function

in the layer at the top of the network final layer and a crossentropy loss as a cost function of

the network. In this problem, you will gain additional mathematical insight into the value of using

softmax and crossentropy loss together in this manner. Think of the computational graph that

includes the above in the context of backpropagation and consider the graph node combining

both of the above operations. In this problem, you will show that the combined gradient, ie the

gradient of softmax followed crossentropy loss, has a simple algebraic form of a difference between

two vectors tensors This leads to a desirable behavior of the gradient during training. You are

strongly encouraged to think about what that desirable behavior is and the reason why the particu

lar expression you will derive in this problem leads to such behavior. As a consequence, in practice,

the combination of softmax and crossentropy loss works well.

Recall that any node of a computational graph may have associated with it both a variable the node

will output we will refer to it as "operand" and an operation eg add, matmul, etc.

Suppose the input to the combined softmax and loss operation is and let where

softmax Let the crossentropy loss function be where vector represents

true probabilitydistribution for a given training exemplar.

Derive the expression for the gradient of this combined operation softmax and crossentropy

As mentioned above, you may expect the result to have a simple algebraic form, namely a difference

between two vectors. Show all steps of your derivation.

Hints: Recall that by the definition of crossentropy, Since is the softmax,

you can use softmax definition to calculate which you will need for calculating When you

calculate L keep in mind that obviously since is a probability distribution

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock