Question: Assume a random vector x E R follows a multivariate Gaussian distribution (i.e., p(x) N(x , E)). If we apply an invertible linear transformation

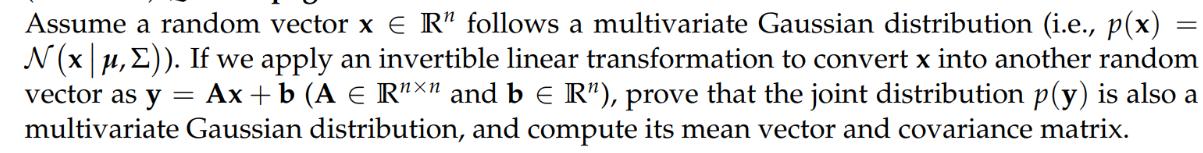

Assume a random vector x E R" follows a multivariate Gaussian distribution (i.e., p(x) N(x , E)). If we apply an invertible linear transformation to convert x into another random vector as y = Ax+ b (A Rn and b R"), prove that the joint distribution p(y) is also a multivariate Gaussian distribution, and compute its mean vector and covariance matrix. Show that mutual information satisfies the following: I(X,Y) H(X) H(XY) H(Y) - H(YX) H(X) + H(Y) - H(X,Y). = = = Compute the distance of a point x0 IR to b) a unit ball ||x|| 1; c) an elliptic surface x Ax = 1, where A E R"X" and A > 0. Hints: Give a numerical procedure if no closed-form solution exists.

Step by Step Solution

3.45 Rating (155 Votes )

There are 3 Steps involved in it

lets break it down step by step 1 Proving Gaussian Transformation Step 11 Given A random vector xRn ... View full answer

Get step-by-step solutions from verified subject matter experts