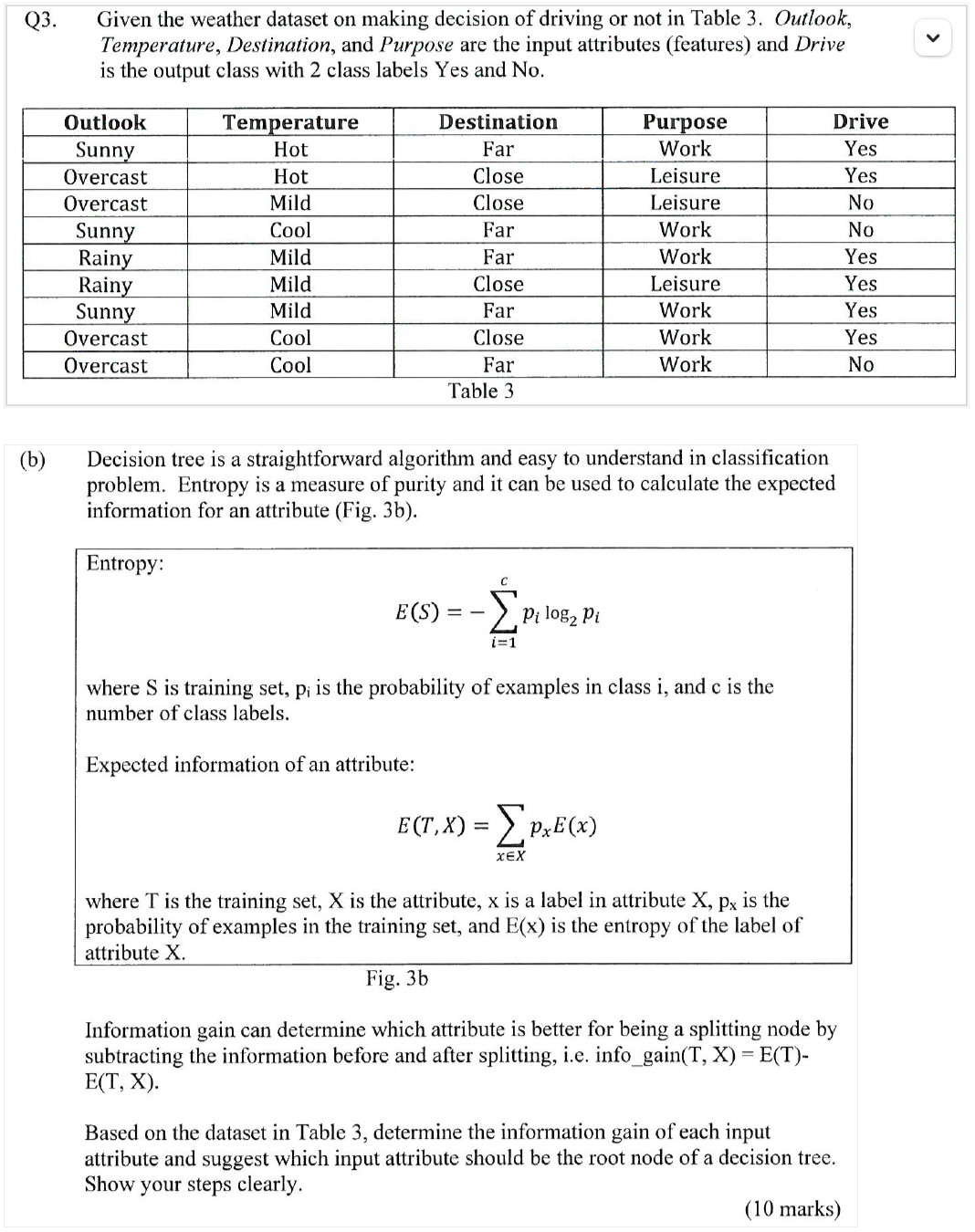

Question: ( b ) Decision tree is a straightforward algorithm and easy to understand in classification problem. Entropy is a measure of purity and it can

b Decision tree is a straightforward algorithm and easy to understand in classification

problem. Entropy is a measure of purity and it can be used to calculate the expected

information for an attribute Figb

Entropy:

where is training set, is the probability of examples in class and is the

number of class labels.

Expected information of an attribute:

where is the training set, is the attribute, is a label in attribute is the

probability of examples in the training set, and is the entropy of the label of

attribute

Fig. b

Information gain can determine which attribute is better for being a splitting node by

subtracting the information before and after splitting, ie infogain

Based on the dataset in Table determine the information gain of each input

attribute and suggest which input attribute should be the root node of a decision tree.

Show your steps clearly.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock