Question: Coding and Information Theory. Please help! It would be greatly appreciated if I can get help with this question. Thanks! 4. (18%) (Entropy and Shannon-Fano

Coding and Information Theory.

Please help! It would be greatly appreciated if I can get help with this question. Thanks!

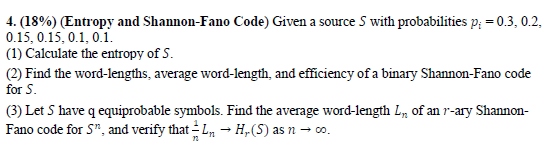

4. (18%) (Entropy and Shannon-Fano Code) Given a source S with probabilities p; = 0.3, 0.2, 0.15, 0.15, 0.1, 0.1. (1) Calculate the entropy of S. (2) Find the word-lengths, average word-length, and efficiency of a binary Shannon-Fano code for S. (3) Let S have q equiprobable symbols. Find the average word-length Ln of an r-ary Shannon- Fano code for 5, and verify that Ln - H (5) as n

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts