Question: Consider a discrete time Markov chain with finite or countable state space S and transition matrix II(x, y), x, y E S, so that

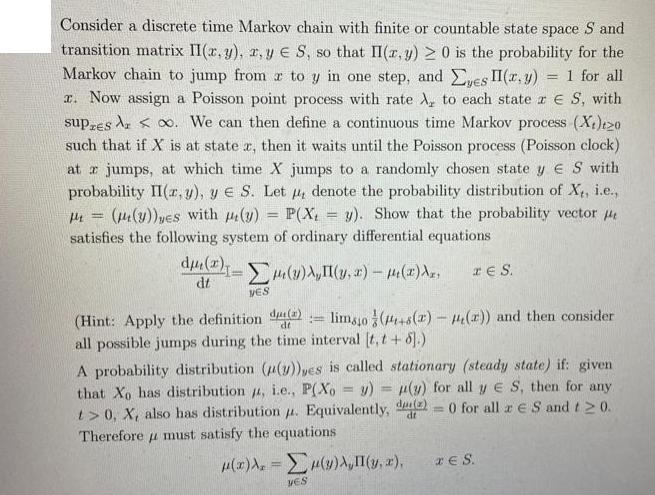

Consider a discrete time Markov chain with finite or countable state space S and transition matrix II(x, y), x, y E S, so that II(x, y) > 0 is the probability for the Markov chain to jump from z to y in one step, and yes II(x, y) ====== 1 for all x. Now assign a Poisson point process with rate A, to each state z S, with supres Aro. We can then define a continuous time Markov process (X)20 such that if X is at stater, then it waits until the Poisson process (Poisson clock) at x jumps, at which time X jumps to a randomly chosen state y S with probability II(x, y), y E S. Let denote the probability distribution of X, i.e., P(Xy). Show that the probability vector ((y))yes with (y) = satisfies the following system of ordinary differential equations .(), , 2) - () d() dt YES TES. lims40 (+8(a) 14(x)) and then consider (Hint: Apply the definition d dt all possible jumps during the time interval [t, t + 6].) = A probability distribution ((y))yes is called stationary (steady state) if: given that Xo has distribution , i.e., P(Xo y) (y) for all y E S, then for any t> 0, X, also has distribution p. Equivalently, du)=0 for all re S and t0. Therefore must satisfy the equations (x)=(y)AI(y, x), VES dt ES.

Step by Step Solution

3.50 Rating (173 Votes )

There are 3 Steps involved in it

To show that the probability vector satisfies the system of ordinary differential equations lets con... View full answer

Get step-by-step solutions from verified subject matter experts