Question: Consider a multivariate linear regression problem of mapping Rd to R, with two different objective functions. The first objective function is the sum of

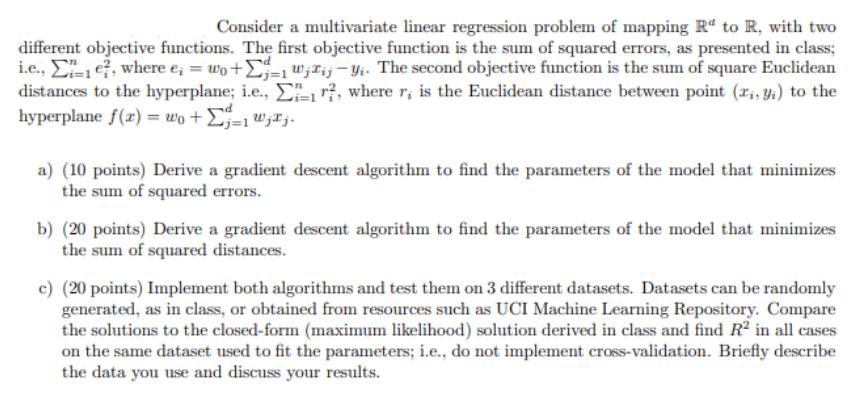

Consider a multivariate linear regression problem of mapping Rd to R, with two different objective functions. The first objective function is the sum of squared errors, as presented in class; i.e., e, where e = wo+wij-y. The second objective function is the sum of square Euclidean distances to the hyperplane; i.e., r, where r, is the Euclidean distance between point (ri, yi) to the hyperplane f(x) = wo+ a) (10 points) Derive a gradient descent algorithm to find the parameters of the model that minimizes the sum of squared errors. b) (20 points) Derive a gradient descent algorithm to find the parameters of the model that minimizes the sum of squared distances. c) (20 points) Implement both algorithms and test them on 3 different datasets. Datasets can be randomly generated, as in class, or obtained from resources such as UCI Machine Learning Repository. Compare the solutions to the closed-form (maximum likelihood) solution derived in class and find R2 in all cases on the same dataset used to fit the parameters; i.e., do not implement cross-validation. Briefly describe the data you use and discuss your results.

Step by Step Solution

There are 3 Steps involved in it

a Gradient Descent Algorithm for Sum of Squared Errors Given the objective function E ei w0 wijxi yi ... View full answer

Get step-by-step solutions from verified subject matter experts