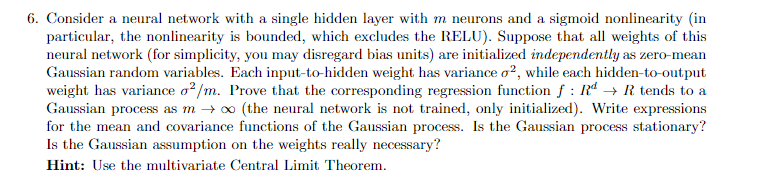

Question: Consider a neural network with a single hidden layer with m neurons and a sigmoid nonlinearity ( in particular, the nonlinearity is bounded, which excludes

Consider a neural network with a single hidden layer with neurons and a sigmoid nonlinearity in

particular, the nonlinearity is bounded, which excludes the RELU Suppose that all weights of this

neural network for simplicity, you may disregard bias units are initialized independently as zeromean

Gaussian random variables. Each inputtohidden weight has variance while each hiddentooutput

weight has variance Prove that the corresponding regression function : tends to a

Gaussian process as the neural network is not trained, only initialized Write expressions

for the mean and covariance functions of the Gaussian process. Is the Gaussian process stationary?

Is the Gaussian assumption on the weights really necessary?

Hint: Use the multivariate Central Limit Theorem.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock