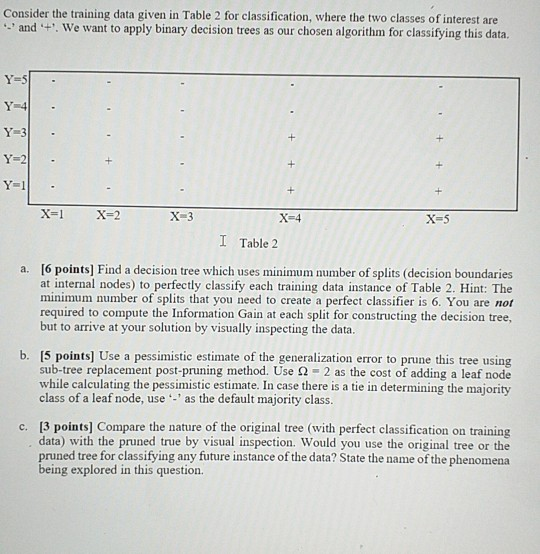

Question: Consider the training data given in Table 2 for classification, where the two classes of interest are and + We want to apply binary decision

Consider the training data given in Table 2 for classification, where the two classes of interest are and + We want to apply binary decision trees as our chosen algorithm for classifying this data. Y-51 Y-3 Y-2 . X=1 X-4 XS I Table 2 a. [6 points) Find a decision tree which uses minimum number of splits (decision boundaries at internal nodes) to perfectly classify each training data instance of Table 2. Hint: The minimum number of splits that you need to create a perfect classifier is 6. You are not required to compute the information Gain at each split for constructing the decision tree, but to arrive at your solution by visually inspecting the data. b. 15 points. Use a pessimistic estimate of the generalization error to prune this tree using sub-tree replacement post-pruning method. Use 52 2 as the cost of adding a leaf node while calculating the pessimistic estimate. In case there is a tie in determining the majority class of a leaf node, use as the default majority class. c. 13 points) Compare the nature of the original tree (with perfect classification on training data) with the pruned true by visual inspection. Would you use the original tree or the pruned tree for classifying any future instance of the data? State the name of the phenomena being explored in this question. Consider the training data given in Table 2 for classification, where the two classes of interest are and + We want to apply binary decision trees as our chosen algorithm for classifying this data. Y-51 Y-3 Y-2 . X=1 X-4 XS I Table 2 a. [6 points) Find a decision tree which uses minimum number of splits (decision boundaries at internal nodes) to perfectly classify each training data instance of Table 2. Hint: The minimum number of splits that you need to create a perfect classifier is 6. You are not required to compute the information Gain at each split for constructing the decision tree, but to arrive at your solution by visually inspecting the data. b. 15 points. Use a pessimistic estimate of the generalization error to prune this tree using sub-tree replacement post-pruning method. Use 52 2 as the cost of adding a leaf node while calculating the pessimistic estimate. In case there is a tie in determining the majority class of a leaf node, use as the default majority class. c. 13 points) Compare the nature of the original tree (with perfect classification on training data) with the pruned true by visual inspection. Would you use the original tree or the pruned tree for classifying any future instance of the data? State the name of the phenomena being explored in this

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts