Question: contributed articles sourcing, screening, interviewing, and selection. (Figure 1 depicts a slightly reinterpreted version of that funnel.) The popularity of automated hiring systems is due

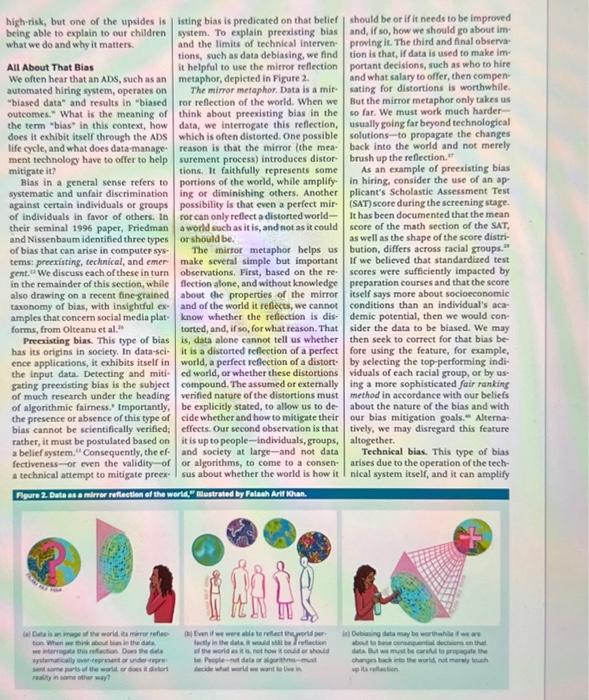

contributed articles sourcing, screening, interviewing, and selection. (Figure 1 depicts a slightly reinterpreted version of that funnel.) The popularity of automated hiring systems is due in no small part to our collective quest for efficiency. In 2019 alone, the global market for artificial intelligence (AD) in recruitment was valued at $580 million. "Employ. ers choose to use these systems to source and screen candidates faster, with less paperwork, and, in the postCOVID-19 world, as little in-person contact as is practical. Candidates are promised a more streamlined job-search experience, although they rarely have a say in whether they are screened by a machine. The tip side of efficiency afforded by automation is that we rarely understand how these systems work and, in deed, whether they work. Is a resume sereener identifying promising candidates or is it picking up irrelevant-or even diseriminatoty pattems from historical data, limiting access to es: sential economic opportunity for entire segments of the population and potentially exposing an employer to legal liability? Is a job secker participating in a farr competition if she is being systematically sereened out, with no opportunity for human intervention and recourse, despite being well-qualified for the job? If current adoption trends are any indication, automated hiring systems are poised to impacteachone of us - as employees, employers, or beth. What's key insights - Responsible data management involves incorporating ethleal and legal considerations acress the Ufe cycle of data collection, analysis, and use in alt data-intens/ve systems, whether they involve machlne learning and A ar net. - Declalons during data collection and preparation profoundly impact the robustness, falrness, and interpretability of data-intensive systems, We must oonsider these earlier Ufe cycle stages to improve data quallty, centrol for blas, and allow humans to eversee the operation of these systems. - Data alone is insufflelent to distinguish between a disterted reflection of a perfect wortd, a perfect reflection of a distorted world, or a combination of both. The assumed or externally verifled nature of the distortions must be explleitly stated to altow is to decide whether and how to mitigate their effects. high-risk, but one of the upsides is being able to explain to our children what we do and why it matters. All About That Bias We often hear that an ADS, such as an automated hiring system, operates on "biased data" and results in "biased outcomes." What is the meaning of the term "bias" in this context, how does it exhibit itself through the ADS life cycle, and what does data-management technology have to offer to help mitigate in? Bias in a general sense refers to systematic and unfair discrimination against certain individuals or groups of individuals in favor of others. In their seminal 1996 paper, Friedman and Nissenbaum identified three types of bias that can arise in computer systems: preexisting, technical, and emer gent. "We discuss each of these in turn in the remainder of this section, while also drawing on a recent fine grained taxonomy of bias, with insightful examples that concern social media platforms, from Olteanu et al.." Preceisting bias. This type of bias has its origins in society. In data-science applications, it exhibits itself in the input data. Detecting and mitigating preexisting bias is the subject of much research under the beading of algorithmic faimess.' Importantly, the presence or absence of this type of bias cannot be scientifically verified; rather, it must be postulated based on a belief system. "Consequently, the cf. fectiveness -or even the validity of a technical attempt to mitigate preex- isting bias is predicated on that belief system. To explain preexisting bias and the limits of technical interven: tions, such as data debiasing, we find it helpful to use the mirror reflection metaphor, depicted in Figure 2. The mimor metaphor. Data is a mitror reflection of the world. When we think about preexisting bias in the data, we interrogate this reflection, which is often distorted. One possible reason is that the mirror (the measurement process) introduces distortions. It faithfully represents some portions of the world, while amplify ing of diminishing others. Another possibility is that even a perfect mirfor can only reflect a distorted worlda world such as it is, and not as it could or should be. The mirtor metaphor helps us make sevenal simple but important observations. First, based on the re: flection alone, and without knowledge about the properties of the mirror and of the world it refects, we cannot know whether the refloction is distorted, and, if so, for what reason. That is, data alone cannot tell us whether it is a distorted reficetion of a perfect world, a perfect refiection of a distort ed wotld, or whether these distortions compound. The assumed or externally verified nature of the distortions must be explicitly stated, to allow us to decide whether and how to mitigate their effects. Our second observation is that it is up to prople - individuals, groups, and society at large-and not data or algorithms, to come to a consensus about whether the world is how it should be or if it needs to be improved and, If so, how we should go about im: proving it. The third and final observa: tion is that, if data is used to make im: portant decisions, such as who to hire and what salary to offer, then compensating for distortions is worthwhile. But the mirror metaphor only takes us so far. We must work much harderusually going far byond technological solutions - to propagate the changes back into the world and not merely brush up the reflection." As an example of preexisting bias in hiring, consider the use of an applicant's Scholastic Assessment Test (SAT) score during the sercening stage. It has been documented that the mean score of the math section of the SAT, as well as the shape of the score distribution, differs across racial groups." If we believed that standardized test scores were sufficiently impacted by preparation courses and that the score itself says more about socioeconomic conditions than an individual's academic potential, then we would consider the data to be blased. We may then seek to correct for that bias be fore using the feature, for example. by selecting the top-performing individuals of each racial group, or by using a more sophisticated fair ranking method in accordance with our beliefs about the nature of the bias and with our bias mitigation goals. " Niternatively, we may disregard this feature altogether. Technical bias. This type of bias arises due to the operation of the technical system itself, and it can amplify 1. What is the main point(s) of the article? 2. What ideas stood our to you and why? 3. What do your know about the topic and where does this existing knowledge come from? 4. Does this text reinforce and/or challenge any existing ideas or assumptions? 5. Did this text assist you in the understanding of the topic? If so, how

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts