Question: Could you please run the code below on spark using scala for the assignment below, if there are any mistakes please do correct. This was

Could you please run the code below on spark using scala for the assignment below, if there are any mistakes please do correct. This was done on a macbook pro using terminal. Also PLEASE POST SCREENSHOT OF OUTPUT for thumbs up.

val tf = sc.textFile("desktop/spark/linkage/block_1.csv")

def isHeader (line: String) : Boolean = {line. contains("id 1")}

val noHeader = tf.filter (x = !isHeader (x))

def toDouble(s: String) = {

if ("?".equals(s)) Double.NaN else s.toDouble

case class MatchData(id1: Int, id2: Int, scores:Array[Double], matched:Boolean)

def parse(line: String) = {

val pieces = line.split(',')

val id1 = pieces(0).toInt

val id2 = pieces(1).toInt

val matched = pieces(11).toBoolean

val scores = pieces.slice(2,11).map(toDouble)

MatchData(id1, id2, scores, matched)

}

val parsed = noHeaderRDD.map(parse(_))

import org. apache.spark.util.StatCounter

class NAStatCounter extends Serializable {

val stats: StatCounter = new statCounter)

var missing: Long = 0

def add(x: Double): NAStatCounter = {

if (java.lang.Double.isNaN(x)) {

missing += 1

}

else {

stats.merge (x)

}

this

}

def merge(other: NAStatCounter): NAStatCounter = {

stats.merge(other.stats)

missing += other.missing

this

}

override def toString = {

"stats: " + stats.tostring + "NaN: " + missing

}

}

object NAStatCounter extends Serializable {

def apply (x: Double) = new NAStatCounter(). add (x)

}

import org.apache.spark.rdd.RDD

def statsWithMissing(rdd: RDD[Array [Double]]) : Array [NAStatCounter] = {

val nasRDD = rdd. map (md => {

md.map (d => NAStatCounter (d) )

})

nasRDD.reduce ( (n1,n2) => {

n1.zip (n2).map { case (a, b) -> a.merge(b) }

val statsm = statsWithMissing(parsed.filter (_.matched).map(_.scores))

val statsn = statsWithMissing(parsed.filter (!_.matched).map(_.scores))

statsm.zip(statsn).map {case(m,n) => (m.missing + n.missing, m.stats. mean - n.stats.mean) }

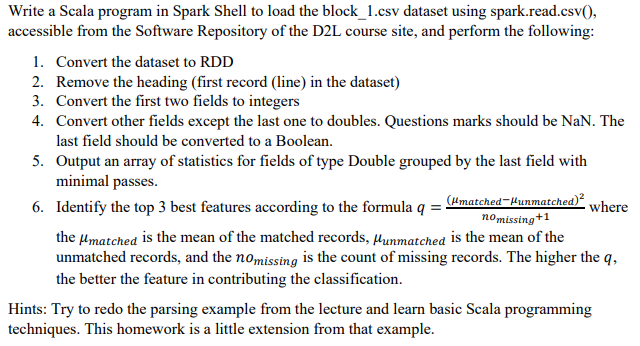

Write a Scala program in Spark Shell to load the block_1.csv dataset using spark.read.csv(), accessible from the Software Repository of the D2L course site, and perform the following: 1. Convert the dataset to RDD 2. Remove the heading (first record (line) in the dataset) 3. Convert the first two fields to integers 4. Convert other fields except the last one to doubles. Questions marks should be NaN. The last field should be converted to a Boolean. 5. Output an array of statistics for fields of type Double grouped by the last field with minimal passes. the matched is the mean of the matched records, unmatched is the mean of the unmatched records, and the nomissing is the count of missing records. The higher the q, the better the feature in contributing the classification. Hints: Try to redo the parsing example from the lecture and learn basic Scala programming techniques. This homework is a little extension from that example

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts