Question: DATA ANALYTICS Choose one dataset from the classification category (there are a total of 262 sets) of the UCI Machine Learning Repository. If the data

DATA ANALYTICS

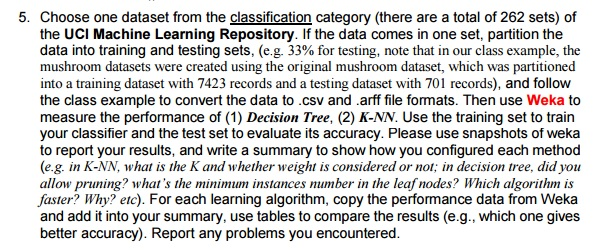

Choose one dataset from the classification category (there are a total of 262 sets) of the UCI Machine Learning Repository. If the data comes in one set, partition the data into training and testing sets, (e.g. 33% for testing, note that in our class example, the mushroom datasets were created using the original mushroom dataset, which was partitioned into a training dataset with 7423 records and a testing dataset with 701 records), and follow the class example to convert the data to .csv and .arff file formats. Then use Weka to measure the performance of (1) Decision Tree, (2) K-NN. Use the training set to train your classifier and the test set to evaluate its accuracy. Please use snapshots of weka to report your results, and write a summary to show how you configured each method (e.g. in K-NN, what is the K and whether weight is considered or not; in decision tree, did you allow priming? what is the minimum instances number in the leaf nodes? Which algorithm is faster? Why? etc). For each learning algorithm, copy the performance data from Weka and add it into your summary, use tables to compare the results (e.g., which one gives better accuracy). Report any problems you encountered. Choose one dataset from the classification category (there are a total of 262 sets) of the UCI Machine Learning Repository. If the data comes in one set, partition the data into training and testing sets, (e.g. 33% for testing, note that in our class example, the mushroom datasets were created using the original mushroom dataset, which was partitioned into a training dataset with 7423 records and a testing dataset with 701 records), and follow the class example to convert the data to .csv and .arff file formats. Then use Weka to measure the performance of (1) Decision Tree, (2) K-NN. Use the training set to train your classifier and the test set to evaluate its accuracy. Please use snapshots of weka to report your results, and write a summary to show how you configured each method (e.g. in K-NN, what is the K and whether weight is considered or not; in decision tree, did you allow priming? what is the minimum instances number in the leaf nodes? Which algorithm is faster? Why? etc). For each learning algorithm, copy the performance data from Weka and add it into your summary, use tables to compare the results (e.g., which one gives better accuracy). Report any problems you encountered

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts