Question: Decision Tree algorithm ( 4 points ) . a . Find entropy ( mathrm { H } ( mathrm { s }

Decision Tree algorithm points

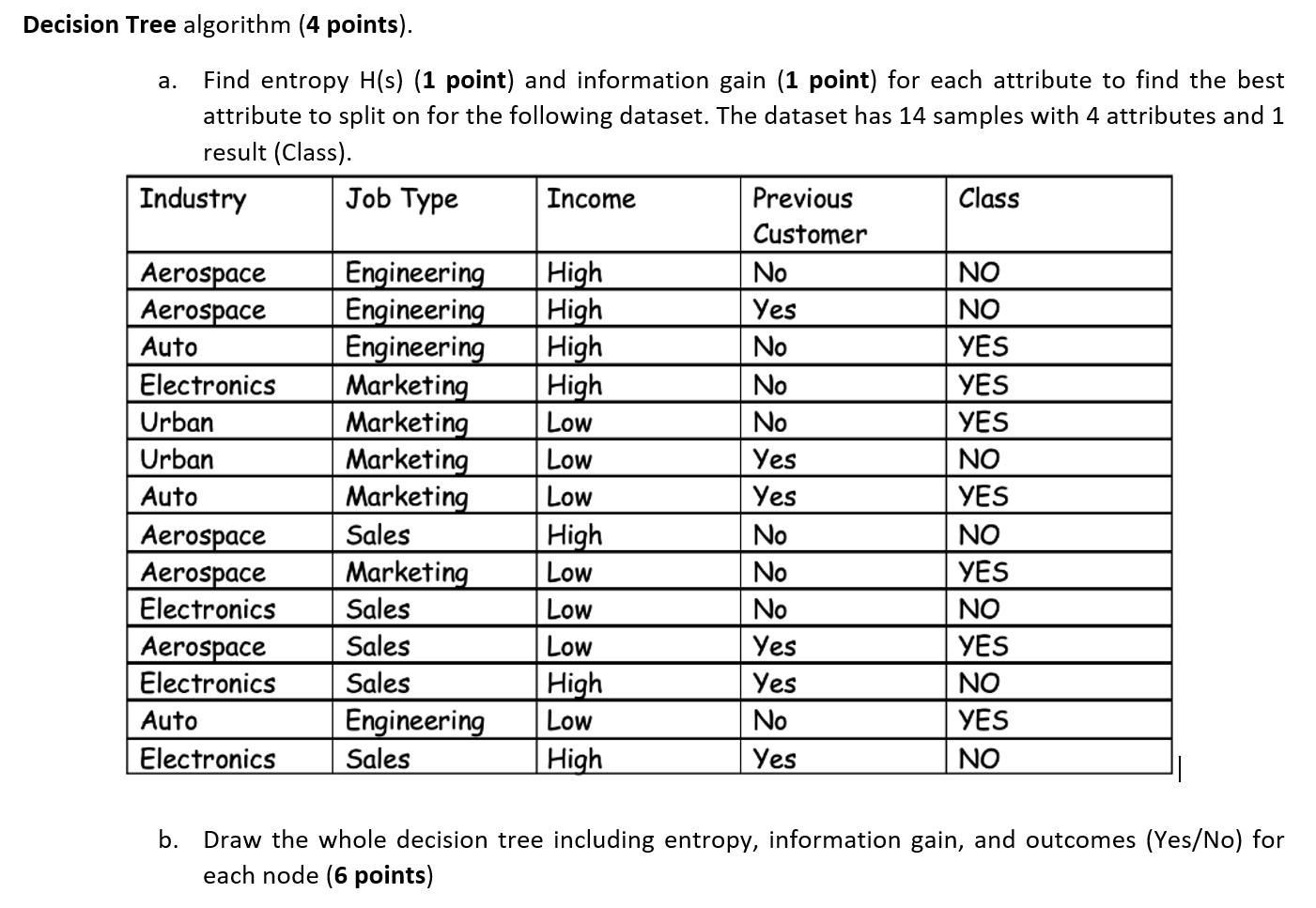

a Find entropy mathrmHmathrmsmathbf point and information gain mathbf point for each attribute to find the best attribute to split on for the following dataset. The dataset has samples with attributes and result Class

begintabularlllll

hline Industry & Job Type & Income & begintabularl

Previous

Customer

endtabular & Class

hline Aerospace & Engineering & High & No & NO

hline Aerospace & Engineering & High & Yes & NO

hline Auto & Engineering & High & No & YES

hline Electronics & Marketing & High & No & YES

hline Urban & Marketing & Low & No & YES

hline Urban & Marketing & Low & Yes & NO

hline Auto & Marketing & Low & Yes & YES

hline Aerospace & Sales & High & No & NO

hline Aerospace & Marketing & Low & No & YES

hline Electronics & Sales & Low & No & NO

hline Aerospace & Sales & Low & Yes & YES

hline Electronics & Sales & High & Yes & NO

hline Auto & Engineering & Low & No & YES

hline Electronics & Sales & High & Yes & NO

hline

endtabular

b Draw the whole decision tree including entropy, information gain, and outcomes YesNo for each node points

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock