Question: Problem 3.(20 points) In this problem, you will investigate building a decision tree for a binary classification problem. The training data is given in Table

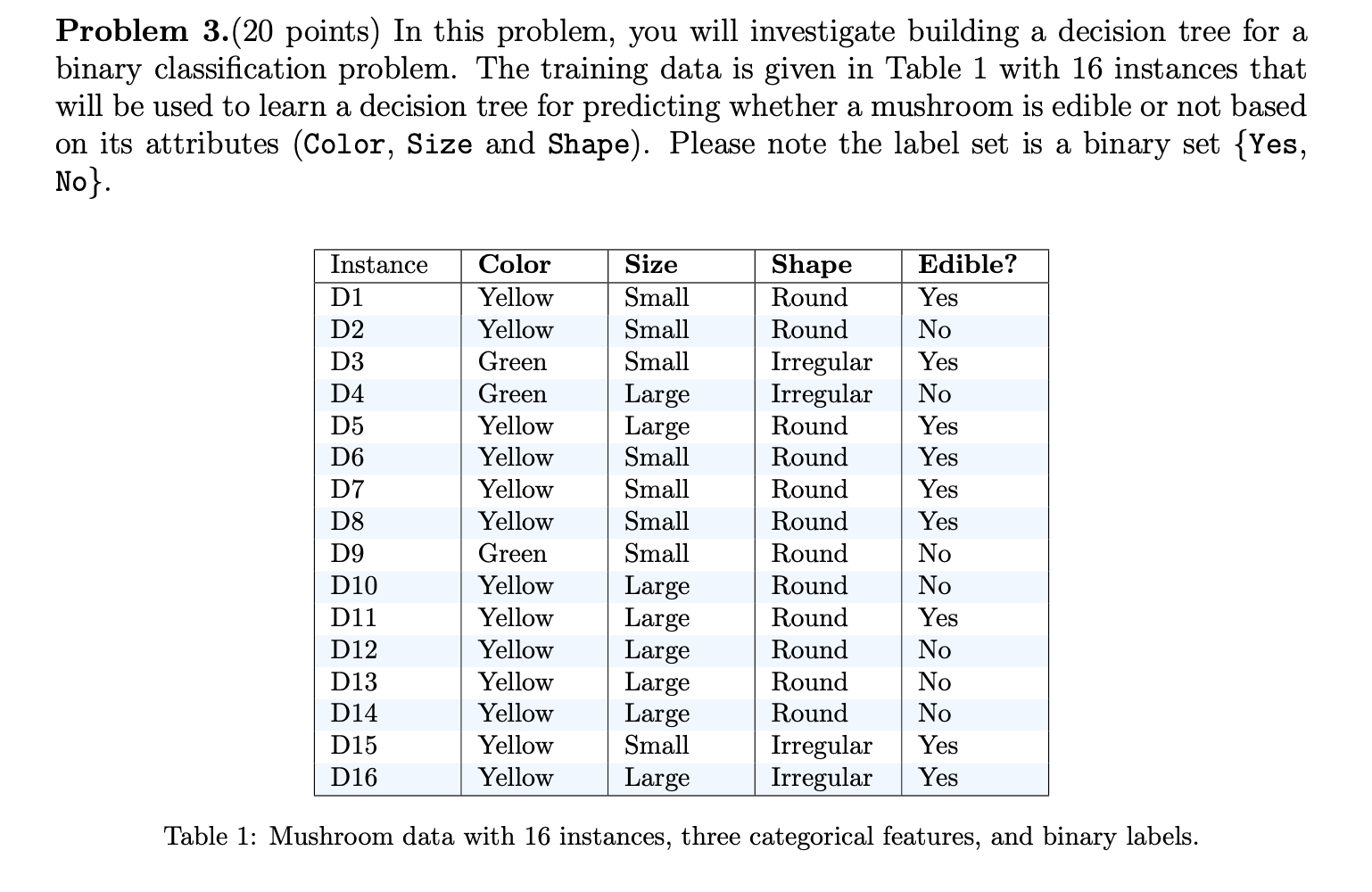

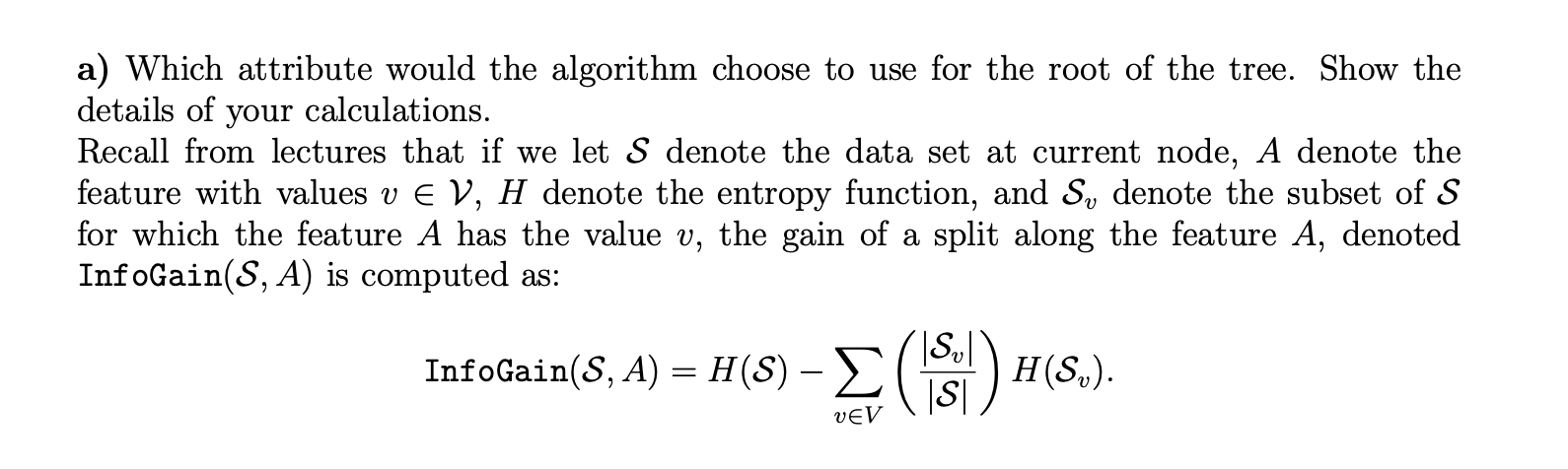

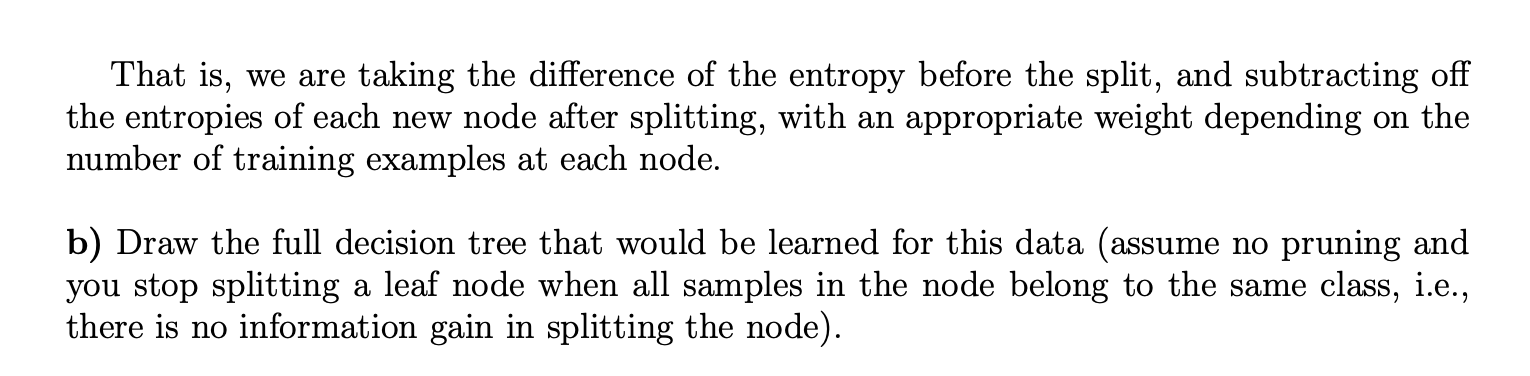

Problem 3.(20 points) In this problem, you will investigate building a decision tree for a binary classification problem. The training data is given in Table 1 with 16 instances that will be used to learn a decision tree for predicting whether a mushroom is edible or not based on its attributes (Color, Size and Shape). Please note the label set is a binary set {Yes, No}. Edible? Yes No Instance D1 D2 D3 D5 D6 D7 D8 D9 D10 D11 D12 D13 D14 D15 D16 Color Yellow Yellow Green Green Yellow Yellow Yellow Yellow Green Yellow Yellow Yellow Yellow Yellow Yellow Yellow Size Small Small Small Large Large Small Small Small Small Large Large Large Large Large Small Large Shape Round Round Irregular Irregular Round Round Round Round Round Round Round Round Round Round Irregular Irregular Yes No Yes Yes Yes Yes No No Yes No No No Yes Yes Table 1: Mushroom data with 16 instances, three categorical features, and binary labels. a) Which attribute would the algorithm choose to use for the root of the tree. Show the details of your calculations. Recall from lectures that if we let S denote the data set at current node, A denote the feature with values v EV, H denote the entropy function, and S, denote the subset of S for which the feature A has the value v, the gain of a split along the feature A, denoted InfoGain(S, A) is computed as: InfoGain(s, 4) = H(S) - E (1H(S.) VEV That is, we are taking the difference of the entropy before the split, and subtracting off the entropies of each new node after splitting, with an appropriate weight depending on the number of training examples at each node. b) Draw the full decision tree that would be learned for this data (assume no pruning and you stop splitting a leaf node when all samples in the node belong to the same class, i.e., there is no information gain in splitting the node)

Problem 3.(20 points) In this problem, you will investigate building a decision tree for a binary classification problem. The training data is given in Table 1 with 16 instances that will be used to learn a decision tree for predicting whether a mushroom is edible or not based on its attributes (Color, Size and Shape). Please note the label set is a binary set {Yes, No}. Instance Color Size Shape Edible? D1 Yellow Small Round Yes D2 Yellow Small Round No D3 Green Small Irregular Yes Green Large Irregular No D5 Yellow Large Round Yes Yellow Small Round Yes D7 Yellow Small Round Yes D8 Yellow Small Round Yes D9 Green Small Round No D10 Yellow Large Round No D11 Yellow Large Round Yes D12 Yellow Large Round No D13 Yellow Large Round No D14 Yellow Large Round No D15 Yellow Small Irregular Yes D16 Yellow Large Irregular Yes Table 1: Mushroom data with 16 instances, three categorical features, and binary labels.

Step by Step Solution

There are 3 Steps involved in it

To solve the problem well follow these steps a Calculate Information Gain for Each Attribute Step 1 Calculate the Entropy for the Whole Dataset S The ... View full answer

Get step-by-step solutions from verified subject matter experts