Question: Dropout and Tikhonov regularization. Consider a simple version of dropout for linear regression with squared-error loss. We have an nd regression matrix X= (Xi,j)Rnd, a

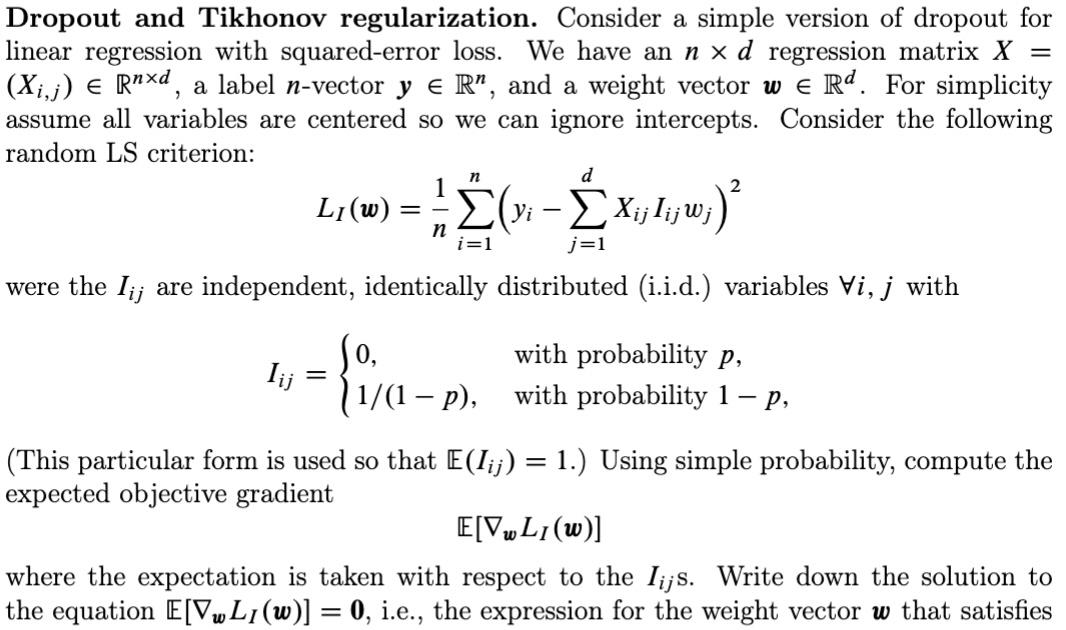

Dropout and Tikhonov regularization. Consider a simple version of dropout for linear regression with squared-error loss. We have an nd regression matrix X= (Xi,j)Rnd, a label n-vector yRn, and a weight vector wRd. For simplicity assume all variables are centered so we can ignore intercepts. Consider the following random LS criterion: LI(w)=n1i=1n(yij=1dXijIijwj)2 were the Iij are independent, identically distributed (i.i.d.) variables i,j with Iij={0,1/(1p),withprobabilitypwithprobability1p (This particular form is used so that E(Iij)=1.) Using simple probability, compute the expected objective gradient E[wLI(w)] where the expectation is taken with respect to the Iijs. Write down the solution to the equation E[wLI(w)]=0, i.e., the expression for the weight vector w that satisfies

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts