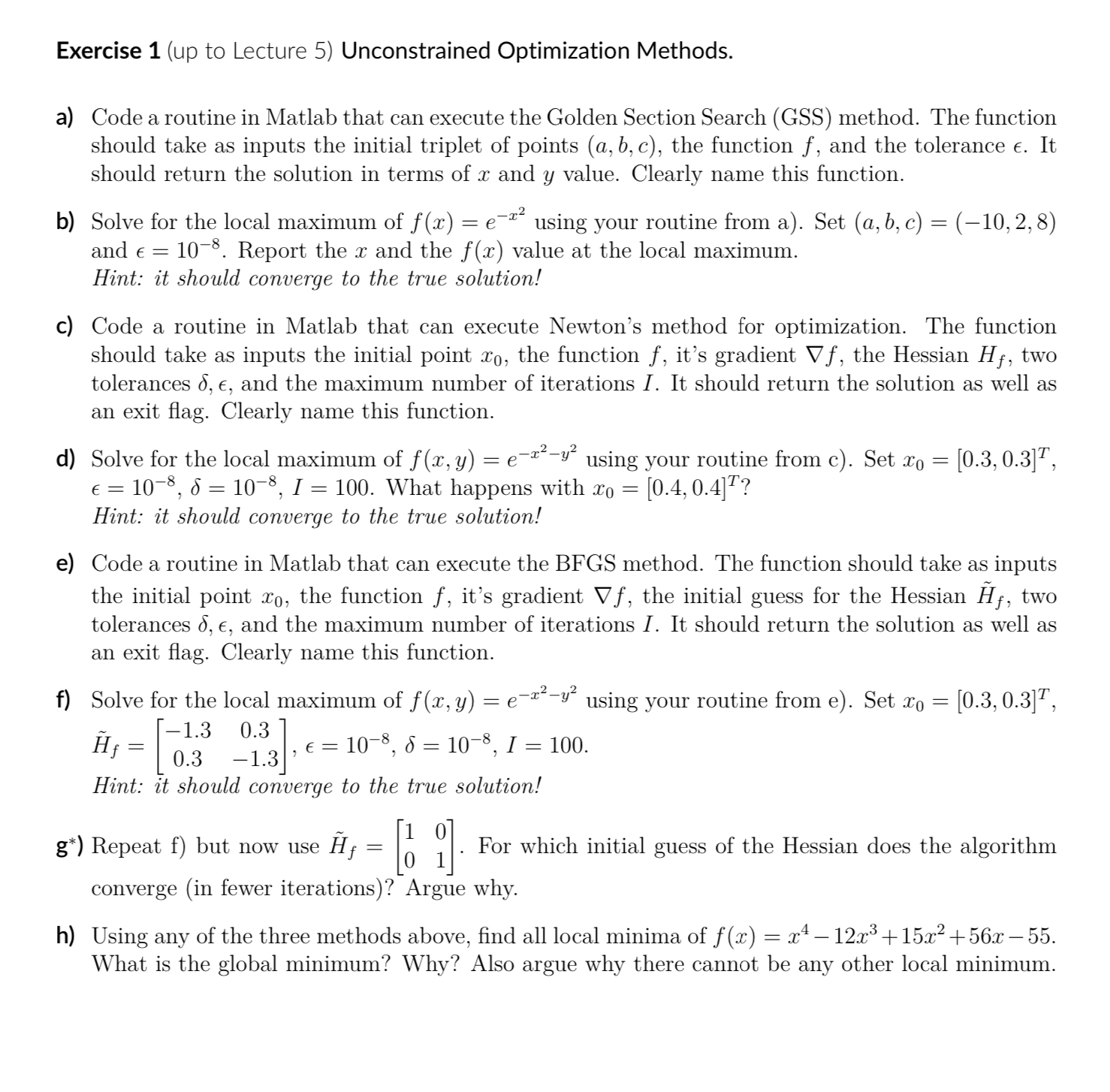

Question: Exercise 1 ( up to Lecture 5 ) Unconstrained Optimization Methods. a ) Code a routine in Matlab that can execute the Golden Section Search

Exercise up to Lecture Unconstrained Optimization Methods.

a Code a routine in Matlab that can execute the Golden Section Search GSS method. The function should take as inputs the initial triplet of points the function and the tolerance It should return the solution in terms of and value. Clearly name this function.

b Solve for the local maximum of using your routine from a Set and Report the and the value at the local maximum.

Hint: it should converge to the true solution!

c Code a routine in Matlab that can execute Newton's method for optimization. The function should take as inputs the initial point the function it's gradient gradf, the Hessian two tolerances and the maximum number of iterations I. It should return the solution as well as an exit flag. Clearly name this function.

d Solve for the local maximum of using your routine from c Set What happens with

Hint: it should converge to the true solution!

e Code a routine in Matlab that can execute the BFGS method. The function should take as inputs the initial point the function it's gradient gradf, the initial guess for the Hessian tilde two tolerances and the maximum number of iterations I. It should return the solution as well as an exit flag. Clearly name this function.

f Solve for the local maximum of using your routine from e Set tilde

Hint: it should converge to the true solution!

g Repeat f but now use tilde For which initial guess of the Hessian does the algorithm converge in fewer iterations Argue why.

h Using any of the three methods above, find all local minima of What is the global minimum? Why? Also argue why there cannot be any other local minimum.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock