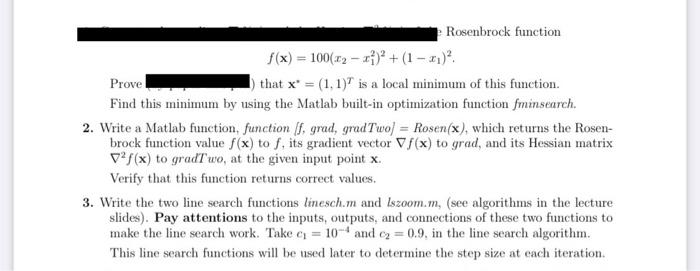

Question: please use matlab to code the functions. show output and explain. thank you Rosenbrock function f(x) = 1006.02 - 2 + (1 - 1) Prove

Rosenbrock function f(x) = 1006.02 - 2 + (1 - 1) Prove that x = (1.1)" is a local minimum of this function. Find this minimum by using the Matlab built-in optimization function fminsearch. 2. Write a Matlab function, function (f, grad, gradTwo) = Rosen(x), which returns the Rosen- brock function value f(x) to f, its gradient vector f(x) to grad, and its Hessian matrix V/(x) to gradTwo, at the given input point X. Verify that this function returns correct values. 3. Write the two line search functions linesch.m and Iszoom.m, (see algorithms in the lecture slides). Pay attentions to the inputs, outputs, and connections of these two functions to make the line search work. Take a = 10- and c = 0.9. in the line search algorithm. This line search functions will be used later to determine the step size at each iteration Homework 3, assigned February 5, due February 19. In this homework, we solve an unconstrained optimization problem by using the steepest descent method and the Newton type methods, all combined with line search Rosenbrock function f(x) = 1006.02 - 2 + (1 - 1) Prove that x = (1.1)" is a local minimum of this function. Find this minimum by using the Matlab built-in optimization function fminsearch. 2. Write a Matlab function, function (f, grad, gradTwo) = Rosen(x), which returns the Rosen- brock function value f(x) to f, its gradient vector f(x) to grad, and its Hessian matrix V/(x) to gradTwo, at the given input point X. Verify that this function returns correct values. 3. Write the two line search functions linesch.m and Iszoom.m, (see algorithms in the lecture slides). Pay attentions to the inputs, outputs, and connections of these two functions to make the line search work. Take a = 10- and c = 0.9. in the line search algorithm. This line search functions will be used later to determine the step size at each iteration Homework 3, assigned February 5, due February 19. In this homework, we solve an unconstrained optimization problem by using the steepest descent method and the Newton type methods, all combined with line search

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts