Question: Exercise 2 (20 marks). Consider the following graph which represents a road network. Each vertex represents a location, and each edge represents a road running

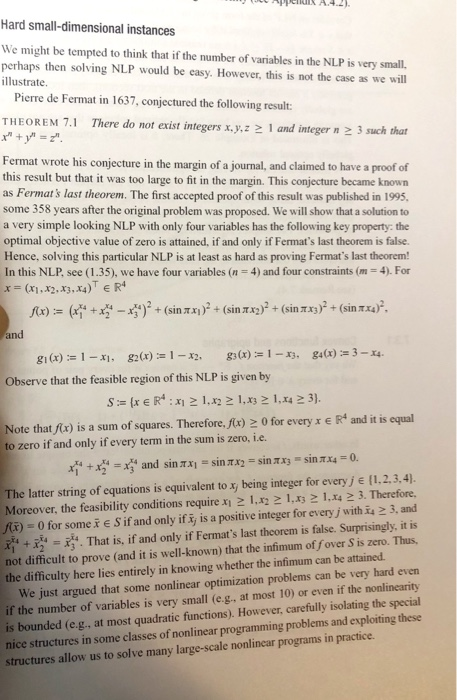

Exercise 2 (20 marks). Consider the following graph which represents a road network. Each vertex represents a location, and each edge represents a road running between the two locations. There are 100 cars that want to travel from locations to location t. There are 5 possible routes that each car can take, denoted by P1, P2, P3, P4, Ps as indicated below. For each edge e, the time it takes to travel on this edge is dependent on the number of cars that use this edge. If c cars use edge e, the travel time one for each car is t(e,c) = we- (c/40)12 where we is a constant for each edge e. The value w for each edge is labeled in the first graph. Write a nonlinear program that minimizes the total time that the 100 cars will take to go from s to t. (Note: You may want to read 7.2.2 from the textbook, or lecture 5 from the slides on Learn.) ULLAN I A.4.21 Hard small-dimensional instances We might be tempted to think that if the number of variables in the NLP is very small perhaps then solving NLP would be easy. However, this is not the case as we will illustrate. Pierre de Fermat in 1637, conjectured the following result: THEOREM 7.1 There do not exist integers x,y,z > 1 and integer 23 such that " + = 2 Fermat wrote his conjecture in the margin of a journal, and claimed to have a proof of this result but that it was too large to fit in the margin. This conjecture became known as Fermats last theorem. The first accepted proof of this result was published in 1995, some 358 years after the original problem was proposed. We will show that a solution to a very simple looking NLP with only four variables has the following key property: the optimal objective value of zero is attained, if and only if Fermat's last theorem is false. Hence, solving this particular NLP is at least as hard as proving Fermat's last theorem! In this NLP, see (1.35), we have four variables (n = 4) and four constraints (m= 4). For x= (x1,x2, x3, x4)'ER f(x) = (***+ *** - *3)* + (sin x)2 + (sin 2x2)2 + (sin 2x3)2 + (sin #xa)? and g1(x) = 1 - x1, 82(x) = 1 - x2, 03(x) = 1 - X3. g4(x) = 3 - 14. Observe that the feasible region of this NLP is given by S := (x R: X1 1,x2 1,x3 1,44 23). Note that f(x) is a sum of squares. Therefore, f(x) > 0 for every x e R* and it is equal to zero if and only if every term in the sum is zero, i.e. ** + x = x and sin Xi = sin X2 = sin XX3 = sin X4 = 0. The latter string of equations is equivalent to x being integer for everyje (1,2,3,4). Moreover, the feasibility conditions require x1 2 1.x2 2 1,13 2 1. x42 3. Therefore, 106) 0 for some i E Sif and only if x; is a positive integer for every j with 14 23, and + = 14. That is, if and only if Fermat's last theorem is false. Surprisingly, it is not difficult to prove (and it is well-known) that the infimum off over Sis zero. Thus, the difficulty here lies entirely in knowing whether the infimum can be attained. We just argued that some nonlinear optimization problems can be very hard even if the number of variables is very small (e.g., at most 10) or even if the nonlinearity is bounded (e.g., at most quadratic functions). However, carefully isolating the special nice structures in some classes of nonlinear programming problems and exploiting these structures allow us to solve many large-scale nonlinear programs in practice. Exercise 2 (20 marks). Consider the following graph which represents a road network. Each vertex represents a location, and each edge represents a road running between the two locations. There are 100 cars that want to travel from locations to location t. There are 5 possible routes that each car can take, denoted by P1, P2, P3, P4, Ps as indicated below. For each edge e, the time it takes to travel on this edge is dependent on the number of cars that use this edge. If c cars use edge e, the travel time one for each car is t(e,c) = we- (c/40)12 where we is a constant for each edge e. The value w for each edge is labeled in the first graph. Write a nonlinear program that minimizes the total time that the 100 cars will take to go from s to t. (Note: You may want to read 7.2.2 from the textbook, or lecture 5 from the slides on Learn.) ULLAN I A.4.21 Hard small-dimensional instances We might be tempted to think that if the number of variables in the NLP is very small perhaps then solving NLP would be easy. However, this is not the case as we will illustrate. Pierre de Fermat in 1637, conjectured the following result: THEOREM 7.1 There do not exist integers x,y,z > 1 and integer 23 such that " + = 2 Fermat wrote his conjecture in the margin of a journal, and claimed to have a proof of this result but that it was too large to fit in the margin. This conjecture became known as Fermats last theorem. The first accepted proof of this result was published in 1995, some 358 years after the original problem was proposed. We will show that a solution to a very simple looking NLP with only four variables has the following key property: the optimal objective value of zero is attained, if and only if Fermat's last theorem is false. Hence, solving this particular NLP is at least as hard as proving Fermat's last theorem! In this NLP, see (1.35), we have four variables (n = 4) and four constraints (m= 4). For x= (x1,x2, x3, x4)'ER f(x) = (***+ *** - *3)* + (sin x)2 + (sin 2x2)2 + (sin 2x3)2 + (sin #xa)? and g1(x) = 1 - x1, 82(x) = 1 - x2, 03(x) = 1 - X3. g4(x) = 3 - 14. Observe that the feasible region of this NLP is given by S := (x R: X1 1,x2 1,x3 1,44 23). Note that f(x) is a sum of squares. Therefore, f(x) > 0 for every x e R* and it is equal to zero if and only if every term in the sum is zero, i.e. ** + x = x and sin Xi = sin X2 = sin XX3 = sin X4 = 0. The latter string of equations is equivalent to x being integer for everyje (1,2,3,4). Moreover, the feasibility conditions require x1 2 1.x2 2 1,13 2 1. x42 3. Therefore, 106) 0 for some i E Sif and only if x; is a positive integer for every j with 14 23, and + = 14. That is, if and only if Fermat's last theorem is false. Surprisingly, it is not difficult to prove (and it is well-known) that the infimum off over Sis zero. Thus, the difficulty here lies entirely in knowing whether the infimum can be attained. We just argued that some nonlinear optimization problems can be very hard even if the number of variables is very small (e.g., at most 10) or even if the nonlinearity is bounded (e.g., at most quadratic functions). However, carefully isolating the special nice structures in some classes of nonlinear programming problems and exploiting these structures allow us to solve many large-scale nonlinear programs in practice

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts