Question: Exercises We can explore the connection between exponential families and the softmax in some more depth. Compute the second derivative of the cross - entropy

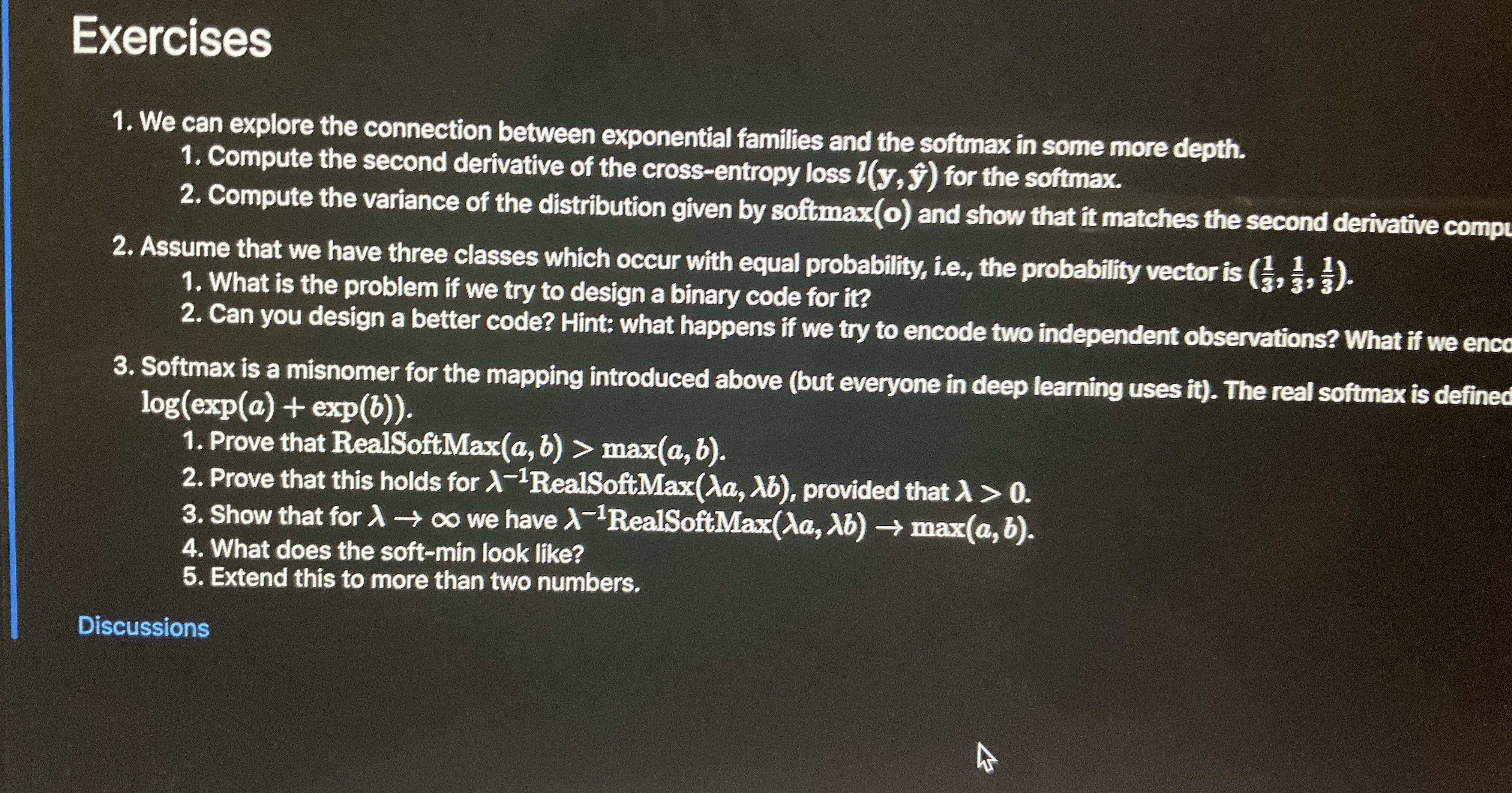

Exercises

We can explore the connection between exponential families and the softmax in some more depth.

Compute the second derivative of the crossentropy loss hat for the softmax.

Compute the variance of the distribution given by softmax and show that it matches the second derivative compt

Assume that we have three classes which occur with equal probability, ie the probability vector is

What is the problem if we try to design a binary code for it

Can you design a better code? Hint: what happens if we try to encode two independent observations? What if we ence

Softmax is a misnomer for the mapping introduced above but everyone in deep learning uses it The real softmax is defined exp

Prove that RealSoftMax max

Prove that this holds for RealSoftMax provided that

Show that for we have RealSoftMax max

What does the softmin look like?

Extend this to more than two numbers.

Discussions

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock