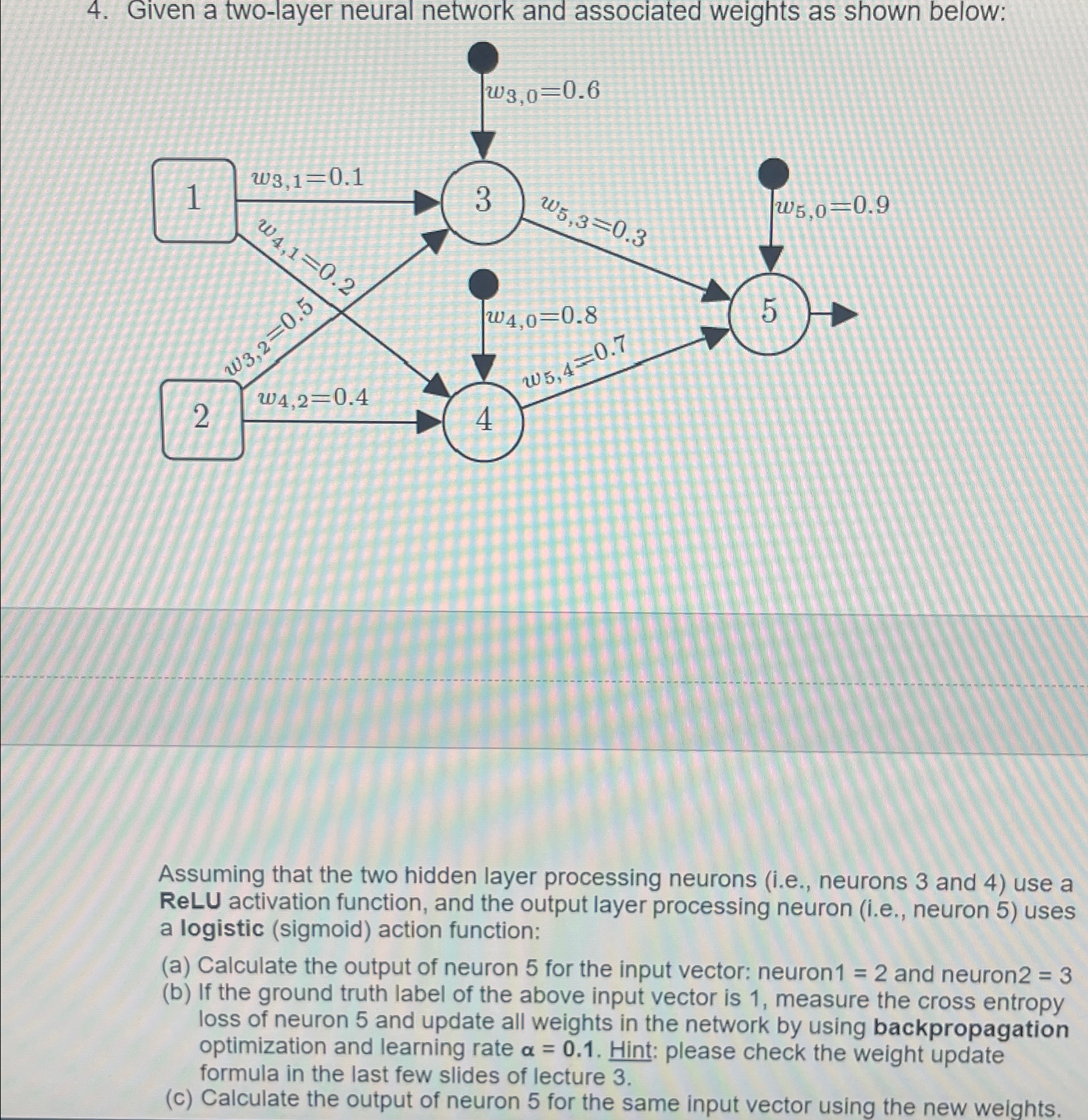

Question: Given a two - layer neural network and associated weights as shown below: Assuming that the two hidden layer processing neurons ( i . e

Given a twolayer neural network and associated weights as shown below:

Assuming that the two hidden layer processing neurons ie neurons and use a ReLU activation function, and the output layer processing neuron ie neuron uses a logistic sigmoid action function:

a Calculate the output of neuron for the input vector: neuron and neuron

b If the ground truth label of the above input vector is measure the cross entropy loss of neuron and update all weights in the network by using backpropagation optimization and learning rate Hint: please check the weight update formula in the last few slides of lecture

c Calculate the output of neuron for the same input vector using the new weights.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock