Question: Q1: Consider an RBF network with four input units, two neurons with Gaussian function in its hidden layer, and one output unit with linear activation

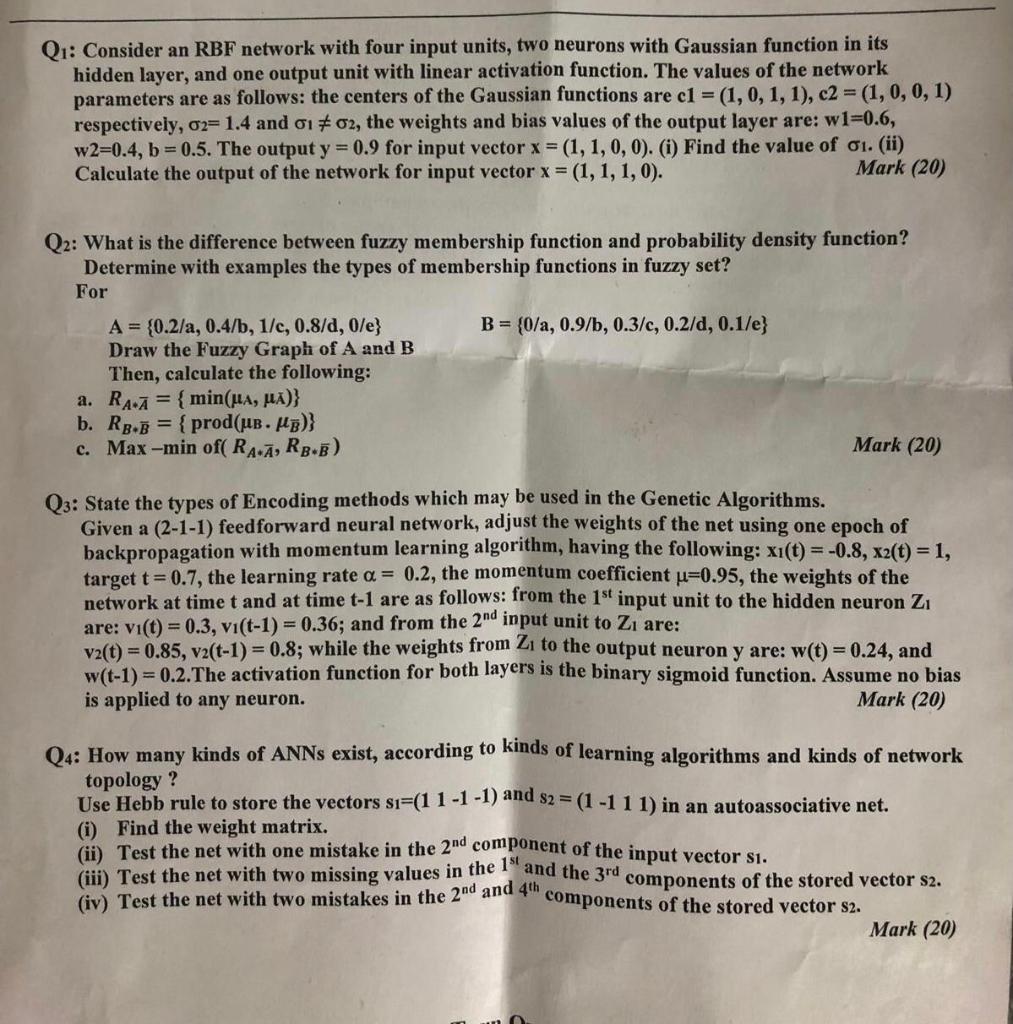

Q1: Consider an RBF network with four input units, two neurons with Gaussian function in its hidden layer, and one output unit with linear activation function. The values of the network parameters are as follows: the centers of the Gaussian functions are c1 = (1,0,1,1), c2 = (1, 0, 0, 1) respectively, 02= 1.4 and 61 #02, the weights and bias values of the output layer are: w1=0.6, w2=0.4, b=0.5. The output y = 0.9 for input vector x = (1,1,0,0). (i) Find the value of 01. (ii) Calculate the output of the network for input vector x = (1, 1, 1, 0). Mark (20) Q2: What is the difference between fuzzy membership function and probability density function? Determine with examples the types of membership functions in fuzzy set? For B = {0/a, 0.9/b, 0.3/c, 0.2/d, 0.1/e} A = {0.2/a, 0.4/b, 1/c, 0.8/2, 0/e) Draw the Fuzzy Graph of A and B Then, calculate the following: a. RAA = { min(ua, ua)} b. Rb-B = {prod(ul. HB)} c. Max-min of( RAA, RB-B) Mark (20) Q3: State the types of Encoding methods which may be used in the Genetic Algorithms. Given a (2-1-1) feedforward neural network, adjust the weights of the net using one epoch of backpropagation with momentum learning algorithm, having the following: xi(t) = -0.8, x2(t)=1, target t=0.7, the learning rate a = 0.2, the momentum coefficient u=0.95, the weights of the network at time t and at time t-1 are as follows: from the 1st input unit to the hidden neuron Zi are: vi(t) = 0.3, vi(t-1) = 0.36; and from the 2nd input unit to Z are: va(t) = 0.85, v2(t-1) = 0.8; while the weights from Z to the output neuron y are: w(t) = 0.24, and w(t-1) = 0.2.The activation function for both layers is the binary sigmoid function. Assume no bias is applied to any neuron. Mark (20) Q4: How many kinds of ANNs exist, according to kinds of learning algorithms and kinds of network topology ? Use Hebb rule to store the vectors si=(11-1-1) and 82 = (1-111) in an autoassociative net. (i) Find the weight matrix. (1) Test the net with one mistake in the 2nd component of the input vector si. ii) Test the net with two missing values in the 19" and the 3rd components of the stored vector s2. (iv) Test the net with two mistakes in the 2nd and 4th components of the stored vector s2. Mark (20)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts