Question: Hello, please modify this code: import pyspark sc = pyspark.SparkContext ( ) def NASDAQ ( line ) : try: fields = line.split ( ' ,

Hello, please modify this code:

import pyspark

sc pyspark.SparkContext

def NASDAQline:

try:

fields line.split

if lenfields:

return False

#intfields:

return True

except:

return False

def COMPANYLISTline:

try:

fields line.splitt

if lenfields or IPOyear in line and "Symbol" in line:

return False

return True

except:

return False

#Load files and clean

nasdaq sctextFilehomekiniveraBigDataPartialinputNASDAQsamplecsv

companylist sctextFilehomekiniveraBigDataPartialinputcompanylisttsv

nasdaq nasdaq.filterNASDAQ

companylist companylist.filterCOMPANYLIST

nasdaq nasdaq.maplambda l: lsplitlsplit: intlsplit #symbol,daten

companylist companylist.maplambda l: lsplitt lsplitt #symbol,sector

joinedrdd nasdaq.joincompanylist #symbol,datensector

#printjoinedrddtake

features joinedrddmaplambda row: row row row #sectordate n

# Reduce by key Year Sector by adding the number of operations

sectorcounts features.reduceByKeylambda x y: x y#sectoryearn

#printsectorcounts.take

# Find the sector with the highest number of operations for each year

maxsectorperyear sectorcounts.maplambda x: xxx #yearnsector

result maxsectorperyear.reduceByKeylambda x y: x if x y else y #yearmajor nsector

#printresulttake

#order x year

result result.sortByKey

# Convert the RDD to a format suitable for saving as text

maxsectorperyearformatted result.maplambda x: xformatx x

# Save the RDD as a text file

maxsectorperyearformatted maxsectorperyearformatted.coalesce

maxsectorperyearformatted.saveAsTextFileout"

This was the statement given for that exercise: RDD manipulation using transformation and action operations and performance optimization using RDD are evaluated. The execution time is also evaluated

This point takes into account the Nasdaq and companylist datasets. Remember the data format is: For NASDAQ: exchange, stock symbol, date, stock opening price, stock high price, stock low price, stock closing price, stock volume

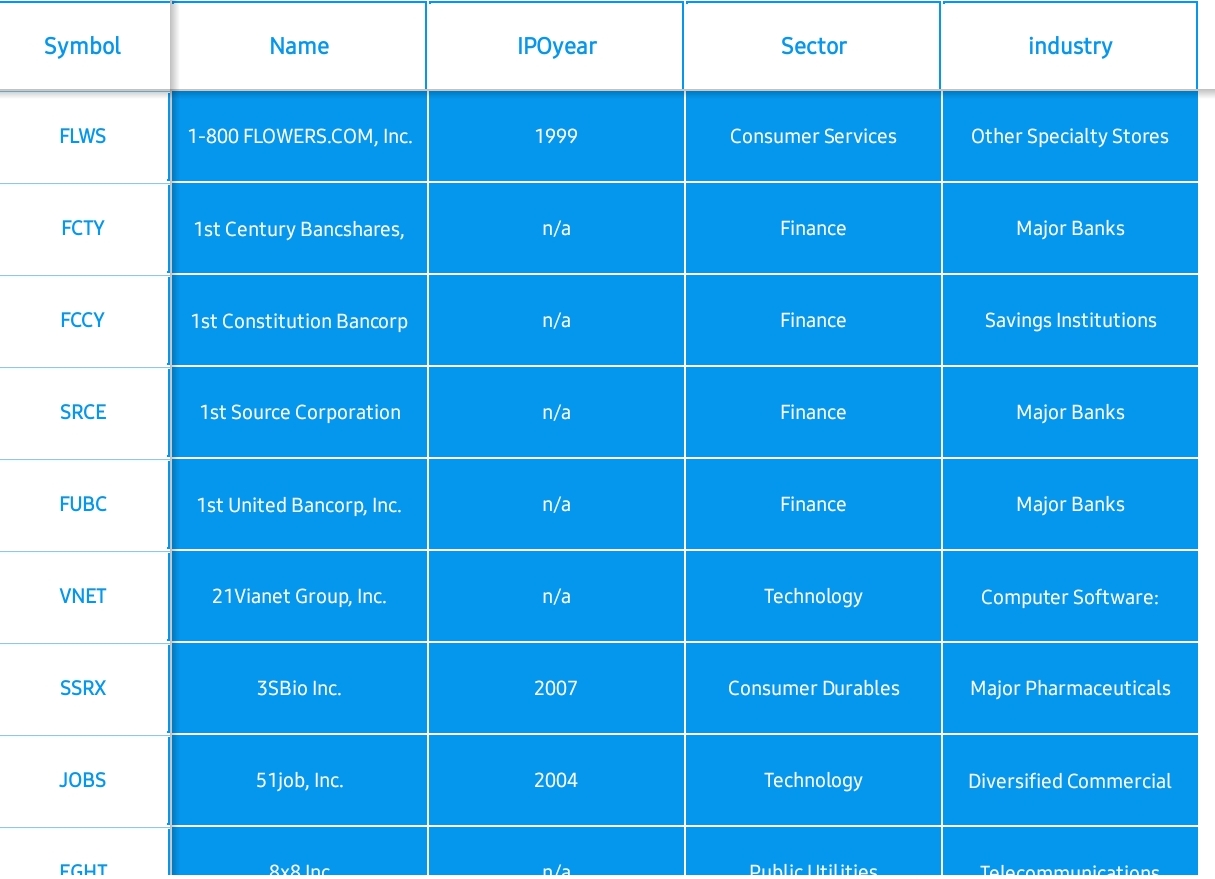

and adjusted closing price of the stock. For companylist: Symbol, Name, initial public offering year IPOyear and industry sector.

Calculate, for each year of the DataSet given for point which sector had the greatest number of operations. The output must mention the year, the name of the sector and the overall value of operations. The result should look like:

Finance,

Pharma,

Finance,

Deliverable : spark script where RDD is used to solve the problem, with the

name topsectorperyear.py the lines must be explained within the script

code fundamentals

Deliverable : Output file with the results, with the name out.txt

Now we have to solve this statement of a Big Data exercise and data frames cannot be used.

Calculate, for each company and business sector, which company grew the most per year, also listing the percentage of growth. The results should be in a format similar to:

Finance,ABCD,

Finance,VFER,

Deliverable : spark script where RDD is used to solve the problem, with the

name topcompanypersector.py The fundamental lines of BigDataspark code must be explained within the script

Deliverable : Output file with the results, with the name out.txt

This must be done in a Linux virtual machine. The data companylist.tsv has headers Name, IPOyear Sector, and industry. In IPOyear some are with na and others with dates of years. The data in NADASQsample.csv has no statements.

tableSymbolName,IPOyear,Sector,industryFLWS FLOWERS.COM, Inc.,Consumer Services,Other Specialty StoresFCTYst Century Bancshares,,naFinance,Major BanksFCCYst Constitution Bancorp,naFinance,Savings InstitutionsSRCEst Source Corporation,naFinance,Major BanksFUBCst United Bancorp, Inc.,Finance,Major BanksVNETVianet Group, Inc.,Technology,Computer Software:SSRXSBio Inc.,Consumer Durables,Major PharmaceuticalsJOBSjob, Inc.,Technology,Diversified CommercialFGHTDublic lltiliti,

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock