Question: Hi, I need help with this please. An Uber driver only works in two main cities: City A and City 8. The driver may choose

Hi, I need help with this please.

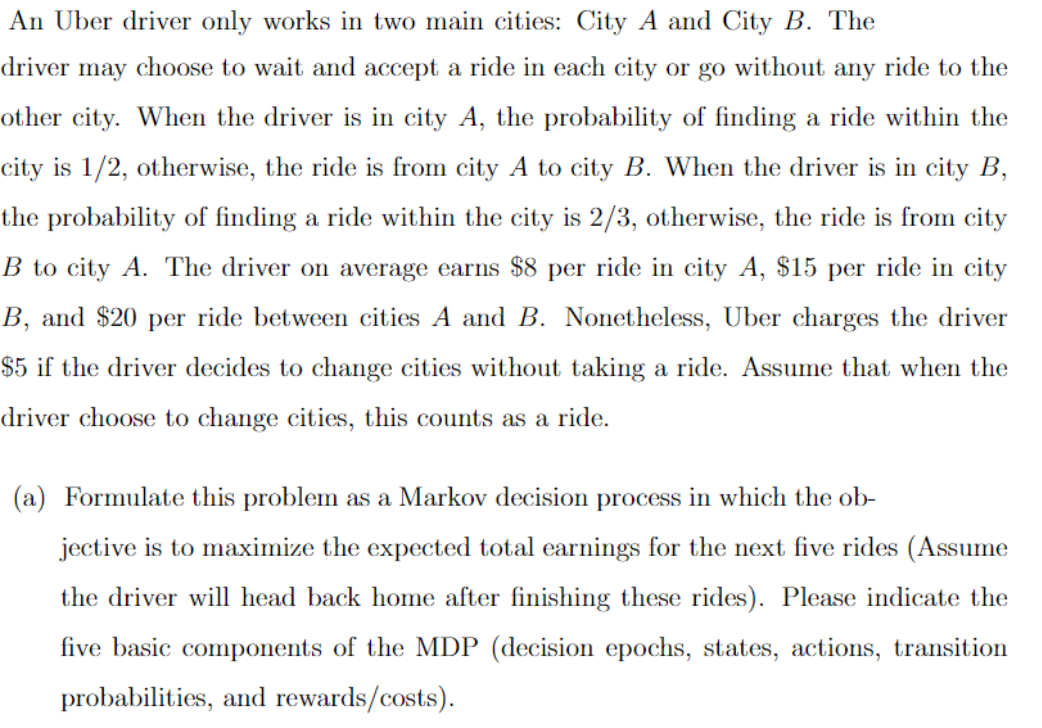

An Uber driver only works in two main cities: City A and City 8. The driver may choose to wait and accept a ride in each city or go without any ride to the other city. When the driver is in city A, the probability of nding a ride within the city is 1/2, otherwise, the ride is from city A to city B. When the driver is in city 3, the probability of finding a ride within the city is 2/3, otherwise, the ride is from city B to city A. The driver on average earns $8 per ride in city A, $15 per ride in city B, and $20 per ride between cities A and B. Nonetheless, Uber charges the driver $5 if the driver decides to change cities without taking a ride. Assume that when the driver choose to change cities, this counts as a ride. (a) Formulate this problem as a Markov decision process in which the ob jective is to maximize the expected total earnings for the next five rides (Assume the driver will head back home after nishing these rides). Please indicate the ve basic components of the MDP (decision epochs, states, actions, transition probabilities, and rewards/ costs)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts