Question: https://archive.ics.uci.edu/ml/datasets/Real+estate+valuation+data+set Ridge regression is am modified version of linear regression that penelizes the coefficients for being large. It accomplishes this by adding a so-called L2

https://archive.ics.uci.edu/ml/datasets/Real+estate+valuation+data+set

https://archive.ics.uci.edu/ml/datasets/Real+estate+valuation+data+set

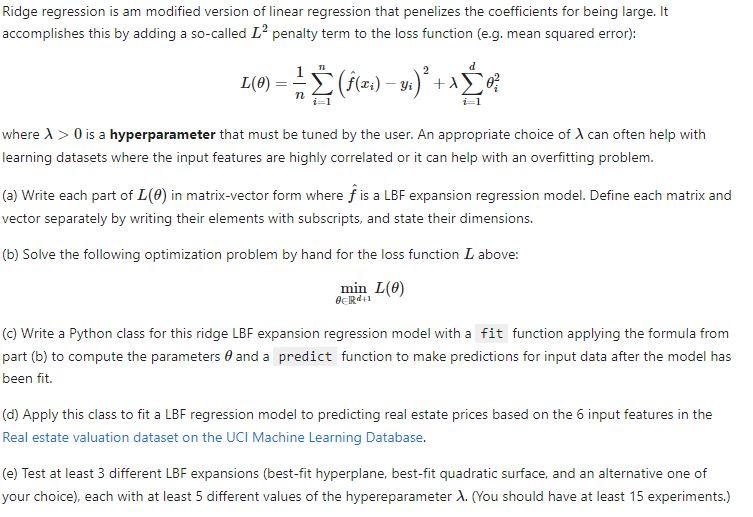

Ridge regression is am modified version of linear regression that penelizes the coefficients for being large. It accomplishes this by adding a so-called L2 penalty term to the loss function (e.g. mean squared error): L()=n1i=1n(f^(xi)yi)2+i=1di2 where >0 is a hyperparameter that must be tuned by the user. An appropriate choice of can often help with learning datasets where the input features are highly correlated or it can help with an overfitting problem. (a) Write each part of L() in matrix-vector form where f^ is a LBF expansion regression model. Define each matrix and vector separately by writing their elements with subscripts, and state their dimensions. (b) Solve the following optimization problem by hand for the loss function L above: minRd+1L() (c) Write a Python class for this ridge LBF expansion regression model with a function applying the formula from part (b) to compute the parameters and a function to make predictions for input data after the model has been fit. (d) Apply this class to fit a LBF regression model to predicting real estate prices based on the 6 input features in the Real estate valuation dataset on the UCI Machine Learning Database. (e) Test at least 3 different LBF expansions (best-fit hyperplane, best-fit quadratic surface, and an alternative one of your choice), each with at least 5 different values of the hypereparameter . (You should have at least 15 experiments.)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts