Question: I need a little help for my projecte. Objective: Comparative study of Dimensionality Reduction Techniques and their Impact on Regression and Visualization. Dataset: The dataset

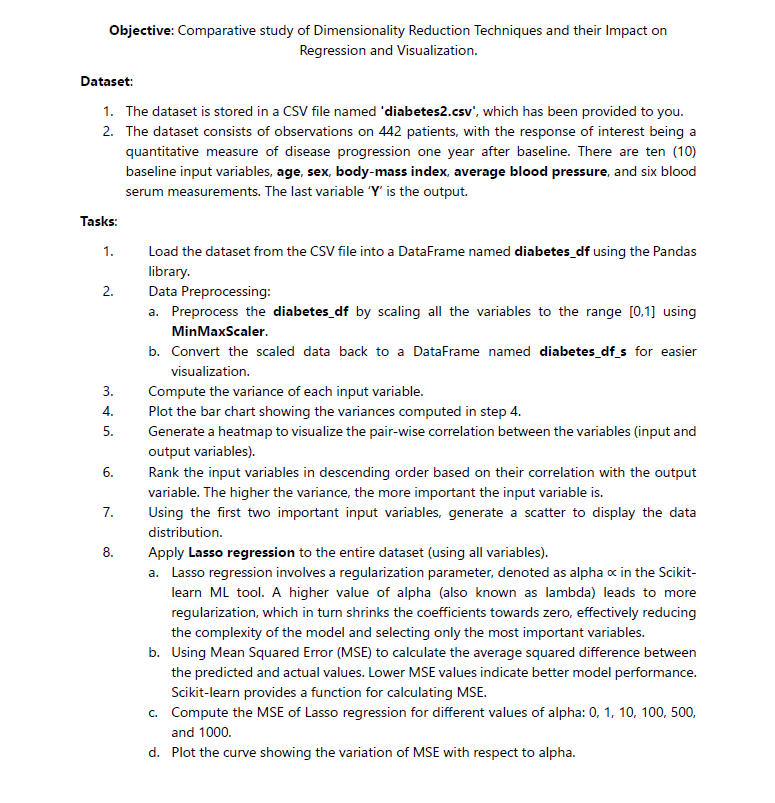

I need a little help for my projecte. Objective: Comparative study of Dimensionality Reduction Techniques and their Impact on

Regression and Visualization.

Dataset:

The dataset is stored in a CSV file named 'diabetescsv which has been provided to you.

The dataset consists of observations on patients, with the response of interest being a

quantitative measure of disease progression one year after baseline. There are ten

baseline input variables, age, sex, bodymass index, average blood pressure, and six blood

serum measurements. The last variable is the output.

Task:

Load the dataset from the CSV file into a DataFrame named diabetesdf using the Pandas

library.

Data Preprocessing:

a Preprocess the diabetesdf by scaling all the variables to the range using

MinMaxScaler.

b Convert the scaled data back to a DataFrame named diabetesdfs for easier

visualization.

Compute the variance of each input variable.

Plot the bar chart showing the variances computed in step

Generate a heatmap to visualize the pairwise correlation between the variables input and

output variables

Rank the input variables in descending order based on their correlation with the output

variable. The higher the variance, the more important the input variable is

Using the first two important input variables, generate a scatter to display the data

distribution.

Apply Lasso regression to the entire dataset using all variables

a Lasso regression involves a regularization parameter, denoted as alpha prop in the Scikit

learn ML tool. A higher value of alpha also known as lambda leads to more

regularization, which in turn shrinks the coefficients towards zero, effectively reducing

the complexity of the model and selecting only the most important variables.

b Using Mean Squared Error MSE to calculate the average squared difference between

the predicted and actual values. Lower MSE values indicate better model performance.

Scikitlearn provides a function for calculating MSE.

c Compute the MSE of Lasso regression for different values of alpha:

and

d Plot the curve showing the variation of MSE with respect to alpha.

Display the best MSE and the corresponding alpha value.

f Plot the evolution of Lasso coefficients against alpha to observe how they change and

how they are Shrunk as alpha varies.

Reduce the data dimensionality using PCA Principal Component Analysis

a Utilize PC and PC and visualize the data scatter.

b Plot the loadings to examine how the variables contribute to PC and PC

c Perform normal linear regression, using PC only.

d Plot the regression line on the scatter.

e Perform normal linear regression, using PC and PC

f Plot the regression hyperline on the scatter.

g Using bar chart, calculate, and display the MSE for both cases c and d

Reduce the data dimensionality with tSNE.

a Utilize the st and nd tSNE dimensions to visualize the data scatter, with different

perplexity values: and

b Perform normal linear regression, using only the st dimension of tSNE.

c Plot the regression line on the scatter.

d Perform normal linear regression, using the st and nd dimensions of tSNE.

e Plot the regression hyperline on the scatter.

f Using bar chart, calculate, and display the MSE for both cases b and d

Reduce the data dimensionality with UMAP.

a Utilize the st and nd UMAP dimensions to visualize the data scatter, with different

nneighbors number of neighbors values: and

b Perform normal linear regression, using only the st dimension of UMAP.

c Plot the regression line on the scatter.

d Perform normal linear regression, using the st and nd dimensions of UMAP.

e Plot the regression hyperline on the scatter.

f Using bar chart, calculate, and display the MSE for both cases b and d

g Provide a comparative table to compare Linear Regression applied to PCA, tSNE, and

UMAP data, utilizing the first three dimensions for each dimensionality reduction

method.

Objective: Comparative study of Dimensionality Reduction Techniques and their Impact on

Regression and Visualization.

Dataset:

The dataset is stored in a CSV file named 'diabetescsv which has been provided to you.

The dataset consists of observations on patients, with the response of interest being a

quantitative measure of disease progression one year after baseline. There are ten

baseline input variables, age, sex, bodymass index, average blood pressure, and six blood

serum measurements. The last variable Y is the output.

Task:

Load the dataset from the CSV file into a DataFrame named diabetesdf using the Pandas

library.

Data Preprocessing:

a Preprocess the diabetesdf by scaling all the variables to the range using

MinMaxScaler.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock