Question: I need help coding this kernel SVM in python for ML. Chegg answers aren't working: def computeK(kerneltype, X, Z, kpar=1): function K = computeK(kernel_type,

I need help coding this kernel SVM in python for ML. Chegg answers aren't working:

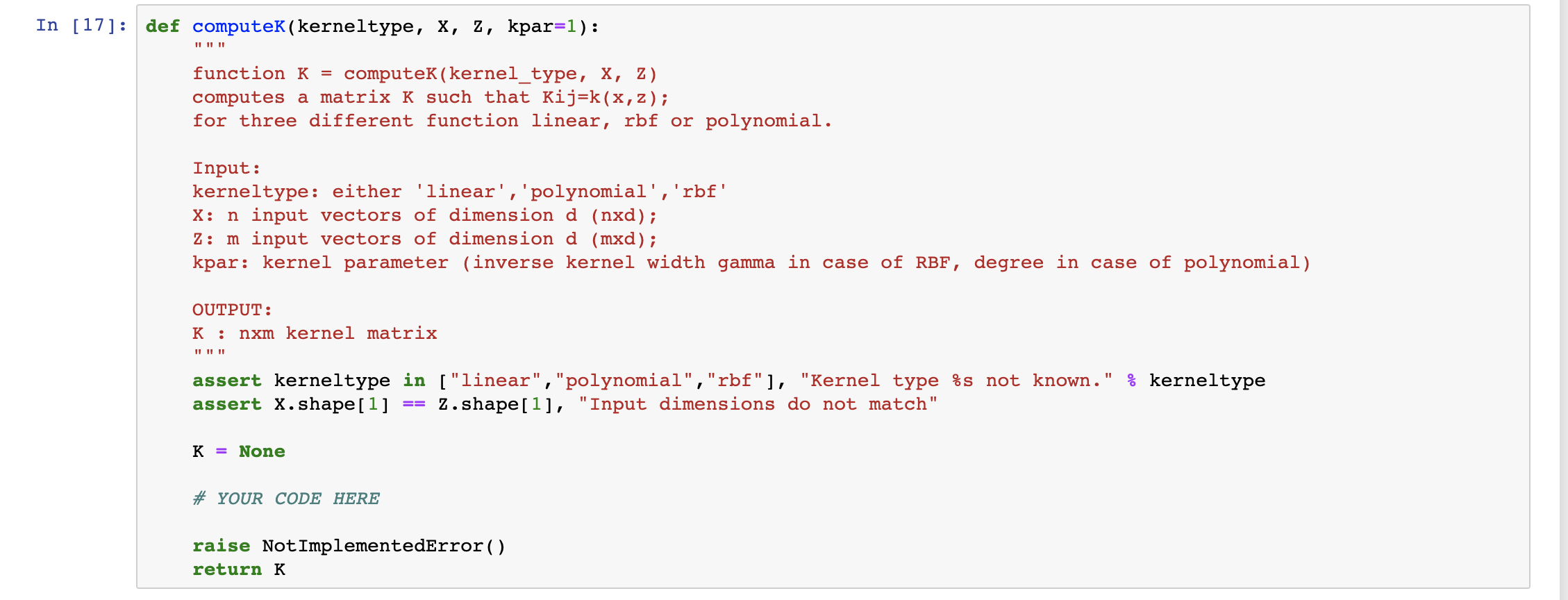

def computeK(kerneltype, X, Z, kpar=1): """ function K = computeK(kernel_type, X, Z) computes a matrix K such that Kij=k(x,z); for three different function linear, rbf or polynomial. Input: kerneltype: either 'linear','polynomial','rbf' X: n input vectors of dimension d (nxd); Z: m input vectors of dimension d (mxd); kpar: kernel parameter (inverse kernel width gamma in case of RBF, degree in case of polynomial) OUTPUT: K : nxm kernel matrix """ assert kerneltype in ["linear","polynomial","rbf"], "Kernel type %s not known." % kerneltype assert X.shape[1] == Z.shape[1], "Input dimensions do not match" K = None # YOUR CODE HERE if kerneltype == "linear": K = X.T @ Z elif kerneltype == "polynomial": K = ((X.T @ Z) + 1)**(kpar) elif kerneltype == "rbf": K = np.exp(np.dot(-1, np.dot(l2distance(X, Z), kpar))) return K raise NotImplementedError() return K

def computeK(kerneltype, X, Z, kpar=1): """ function K = computeK(kernel_type, X, Z) computes a matrix K such that Kij=k(x,z); for three different function linear, rbf or polynomial. Input: kerneltype: either 'linear','polynomial','rbf' X: n input vectors of dimension d (nxd); Z: m input vectors of dimension d (mxd); kpar: kernel parameter (inverse kernel width gamma in case of RBF, degree in case of polynomial) OUTPUT: K : nxm kernel matrix """ assert kerneltype in ["linear","polynomial","rbf"], "Kernel type %s not known." % kerneltype assert X.shape[1] == Z.shape[1], "Input dimensions do not match" K = None # YOUR CODE HERE if kerneltype == "linear": K = X.T @ Z elif kerneltype == "polynomial": K = ((X.T @ Z) + 1)**(kpar) elif kerneltype == "rbf": K = np.exp(np.dot(-1, np.dot(l2distance(X, Z), kpar))) return K raise NotImplementedError() return K

I get errors on the following tests 1,4,5,6 with the code above, please help:

# These tests test whether your computeK() is implemented correctly

xTr_test, yTr_test = generate_data(100) xTr_test2, yTr_test2 = generate_data(50) n, d = xTr_test.shape

# Checks whether computeK compute the kernel matrix with the right dimension def computeK_test1(): s1 = (computeK('rbf', xTr_test, xTr_test2, kpar=1).shape == (100, 50)) s2 = (computeK('polynomial', xTr_test, xTr_test2, kpar=1).shape == (100, 50)) s3 = (computeK('linear', xTr_test, xTr_test2, kpar=1).shape == (100, 50)) return (s1 and s2 and s3)

# Checks whether the kernel matrix is symmetric def computeK_test2(): k_rbf = computeK('rbf', xTr_test, xTr_test, kpar=1) s1 = np.allclose(k_rbf, k_rbf.T) k_poly = computeK('polynomial', xTr_test, xTr_test, kpar=1) s2 = np.allclose(k_poly, k_poly.T) k_linear = computeK('linear', xTr_test, xTr_test, kpar=1) s3 = np.allclose(k_linear, k_linear.T) return (s1 and s2 and s3)

# Checks whether the kernel matrix is positive semi-definite def computeK_test3(): k_rbf = computeK('rbf', xTr_test2, xTr_test2, kpar=1) eigen_rbf = np.linalg.eigvals(k_rbf) eigen_rbf[np.isclose(eigen_rbf, 0)] = 0 s1 = np.all(eigen_rbf >= 0) k_poly = computeK('polynomial', xTr_test2, xTr_test2, kpar=1) eigen_poly = np.linalg.eigvals(k_poly) eigen_poly[np.isclose(eigen_poly, 0)] = 0 s2 = np.all(eigen_poly >= 0) k_linear = computeK('linear', xTr_test2, xTr_test2, kpar=1) eigen_linear = np.linalg.eigvals(k_linear) eigen_linear[np.isclose(eigen_linear, 0)] = 0 s3 = np.all(eigen_linear >= 0) return (s1 and s2 and s3)

# Checks whether computeK compute the right kernel matrix with rbf kernel def computeK_test4(): k = computeK('rbf', xTr_test, xTr_test2, kpar=1) k2 = computeK_grader('rbf', xTr_test, xTr_test2, kpar=1) return np.linalg.norm(k - k2)

# Checks whether computeK compute the right kernel matrix with polynomial kernel def computeK_test5(): k = computeK('polynomial', xTr_test, xTr_test2, kpar=1) k2 = computeK_grader('polynomial', xTr_test, xTr_test2, kpar=1) return np.linalg.norm(k - k2)

# Checks whether computeK compute the right kernel matrix with linear kernel def computeK_test6(): k = computeK('linear', xTr_test, xTr_test2, kpar=1) k2 = computeK_grader('linear', xTr_test, xTr_test2, kpar=1) return np.linalg.norm(k - k2)

runtest(computeK_test1, 'computeK_test1') runtest(computeK_test2, 'computeK_test2') runtest(computeK_test3, 'computeK_test3') runtest(computeK_test4, 'computeK_test4') runtest(computeK_test5, 'computeK_test5') runtest(computeK_test6, 'computeK_test6')

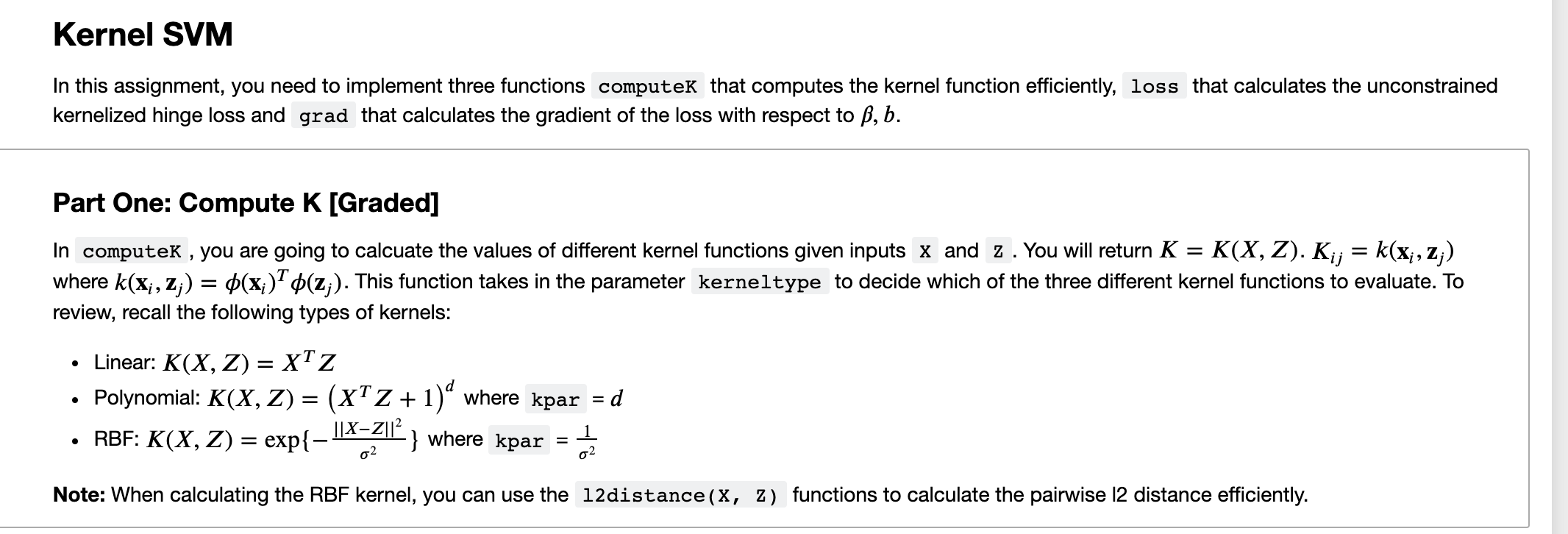

Kernel SVM In this assignment, you need to implement three functions computek that computes the kernel function efficiently, loss that calculates the unconstrained kernelized hinge loss and grad that calculates the gradient of the loss with respect to B, b. Part One: Compute K [Graded] In computer , you are going to calcuate the values of different kernel functions given inputs x and z . You will return K = K(X, Z). Kij = k(X, z;) where k(X;, Z;) = $(x;)(z;). This function takes in the parameter kerneltype to decide which of the three different kernel functions to evaluate. To review, recall the following types of kernels: = Linear: K(X, Z) = XTZ Polynomial: K(X, Z) = (xTZ + 1)" where kpar RBF: K(X, Z) = exp{- ||X-Z||2 d . } where kpar 02 02 Note: When calculating the RBF kernel, you can use the 12distance(X, Z) functions to calculate the pairwise 12 distance efficiently. In [17]: def computek (kerneltype, X, Z, kpar=1): II III function K = computek(kernel_type, X, Z) computes a matrix K such that Kij=k(x,z); for three different function linear, rbf or polynomial. Input: kerneltype: either linear', 'polynomial', 'rbf' X: n input vectors of dimension d (nxd); Z: m input vectors of dimension d (mxd); kpar: kernel parameter (inverse kernel width gamma in case of RBF, degree in case of polynomial) OUTPUT: K : nxm kernel matrix % kerneltype assert kerneltype in ["linear", "polynomial", "rbf"], "Kernel type %s not known. assert X. shape[1] Z.shape[1], "Input dimensions do not match" K = None # YOUR CODE HERE raise NotImplementedError() return K

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts