Question: Implement the ID3 decision tree learning algorithm that we discussed in class. To simplify the implementation, your system only needs to handle binary classification tasks

Implement the ID3 decision tree learning algorithm that we discussed in class. To simplify the implementation, your system only needs to handle binary classification tasks (i.e. each instance will have a class value of 0 or 1). In addition, you may assume that all attributes are binary-valued (i.e. the only possible attribute values are 0 and 1) and that there are no missing values in the training or test data. Some sample training files (train.dat, train2.dat) and test files (test.dat, test2.dat) are available from the assignment page of the course website. In these files, only lines containing non-space characters are relevant. The first relevant line holds the attribute names. Each following relevant line defines a single example. Each column holds an examples value for the attribute named at the head of the column. The last column (labeled class) holds the class label for the examples. In all of the following experiments, you should use this last class attribute to train the tree and to determine whether a tree classifies an example correctly. When building a decision tree, if you reach a leaf node but still have examples that belong to different classes (i.e., the node is not pure), then choose the most frequent class (among the instances at the leaf node). If you reach a leaf node in the decision tree and have no examples left or the examples are equally split among multiple classes, then choose the class that is most frequent in the entire training set. Do not implement pruning. A word on tie breaking: when choosing attributes using information again, if two or more attributes achieve the highest information gain value, break ties by choosing the earliest one in the list of attributes (assuming that the attributes in the first line of a training file are read in a left-to-right manner).

Your program should be able to handle any binary classification task with any number of binary-valued attributes. Consequently, both the number and names of the attributes, as well as the number of training and test instances, should be determined at runtime. In other words, these values should not be hard-coded in your program.

Your program should allow only three arguments to be specified in the command line in- vocation of your program: a training file, a test file, and the maximum number of training instances to consider. There should be no graphical user interface (GUI) of any kind. Any program that does not conform to the above specification will receive no credit.

Use logarithm base 2 when computing entropy and define 0 log2 0 to be 0.

In the input files, only lines containing non-space characters are relevant, as mentioned pre- viously. In particular, empty lines may appear anywhere in an input file, including the be- ginning and the end of the file. Care should be taken to skip over these empty lines.

Input files may be specified with absolute or relative addresses.

Your compiled code should be invocated as either:

python main.py

Where your decision tree will be built with all instances found in the specified training file if maxTrainingInstances 0.

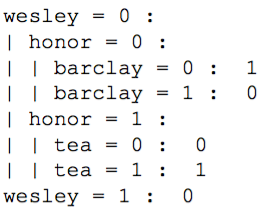

Build a decision tree using the training instances and print to stdout the tree in the same format as the example tree shown below.

According to this tree, if wesley = 0 and honor = 0 and barclay = 0, then the class value of the corresponding instance should be 1. In other words, the value appearing before a colon is an attribute value, and the value appearing after a colon is a class value.

Use the learned decision tree to classify the training instances. Print to stdout the accuracy of the tree. (In this case, the tree has been trained and tested on the same data set.) The accuracy should be computed as the percentage of examples that were correctly classified. For example, if 86 of 90 examples are classified correctly, then the accuracy of the decision tree would be 95.6%. (Note that the accuracy on the training instances will be 100% if and only if the training instances are consistent.)

Accuracy on training set (90 instances): 95.6%

Use the learned decision tree to classify the test instances. Print to stdout the accuracy of

the tree. (In this case, the decision tree has been trained and tested on different data sets.)

Accuracy on test set (10 instances): 60.0%

Now, we want to investigate how the amount of training data affects the accuracy of the resulting decision tree. Plot a learning curve (i.e., a graph of the accuracy of your algorithm on the test set against different training set sizes) by re-training your learning algorithm on train.dat using training set sizes of 100, 200, 300, . . ., 800. Briefly comment on the shape of the curve. Does it exhibit the usual properties of a learning curve? (We suggest that you plot the graph using Excel, but if you choose to draw the graph by hand, you need to scan it so that you can submit it online. We will not accept hardcopy submissions.)

wesley- 0: honor0 barclay = 1 : I honor = 1 tea = 0 1 1 tea = 1 Wesley = wesley- 0: honor0 barclay = 1 : I honor = 1 tea = 0 1 1 tea = 1 Wesley =

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts