Question: In class, we introduced the weight decay regularizer, which adds a loss term w22 where w is a vector comprising all of the parameters of

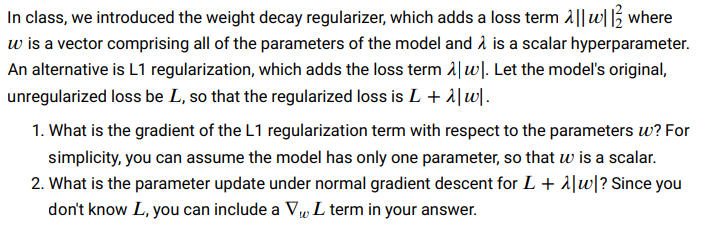

In class, we introduced the weight decay regularizer, which adds a loss term w22 where w is a vector comprising all of the parameters of the model and is a scalar hyperparameter. An alternative is L1 regularization, which adds the loss term w. Let the model's original, unregularized loss be L, so that the regularized loss is L+w. 1. What is the gradient of the L1 regularization term with respect to the parameters w ? For simplicity, you can assume the model has only one parameter, so that w is a scalar. 2. What is the parameter update under normal gradient descent for L+w ? Since you don't know L, you can include a wL term in your

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts