Question: In lecture we saw a method for language modeling called linear interpolation, where the trigram estimate q(t, I wi-2, tri-1 ) s defined as Here

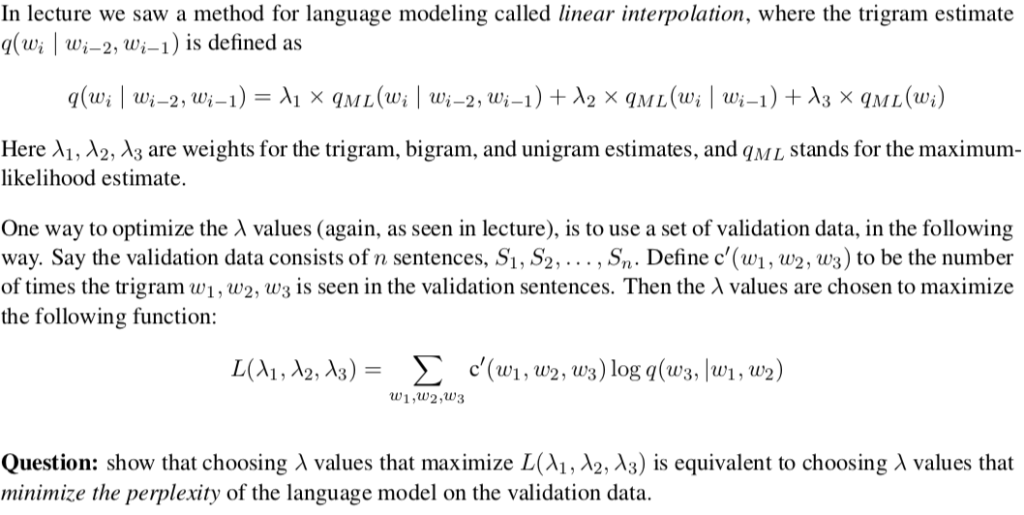

In lecture we saw a method for language modeling called linear interpolation, where the trigram estimate q(t, I wi-2, tri-1 ) s defined as Here X1, A2, 3 are weights for the trigram, bigram, and unigram estimates, and qML stands for the maximum- likelihood estimate One way to optimize the values (again, as seen in lecture), is to use a set of validation data, in the following way. Say the validation data consists of n sentences, S1, S2,.., Sn Define c'(wi, w2, ws) to be the number of times the trigram w1 , w2, w3 is seen in the validation sentences. Then the values are chosen to maximize the following function: e'(w,2 )log q(ws, lw, w2) Question: show that choosing values that maximize L(Ai, Az, 3) is equivalent to choosing values that minimize the perplexity of the language model on the validation data. In lecture we saw a method for language modeling called linear interpolation, where the trigram estimate q(t, I wi-2, tri-1 ) s defined as Here X1, A2, 3 are weights for the trigram, bigram, and unigram estimates, and qML stands for the maximum- likelihood estimate One way to optimize the values (again, as seen in lecture), is to use a set of validation data, in the following way. Say the validation data consists of n sentences, S1, S2,.., Sn Define c'(wi, w2, ws) to be the number of times the trigram w1 , w2, w3 is seen in the validation sentences. Then the values are chosen to maximize the following function: e'(w,2 )log q(ws, lw, w2) Question: show that choosing values that maximize L(Ai, Az, 3) is equivalent to choosing values that minimize the perplexity of the language model on the validation data

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts