Question: In Python Please: def train_model(loss, training_examples, params, var_x, var_y, delta=0.0001, num_iterations=10000): env = {param:0 for param in params} for iteration_idx in range(num_iterations): gradient = {param:0

In Python Please:

def train_model(loss, training_examples, params, var_x, var_y, delta=0.0001, num_iterations=10000):

env = {param:0 for param in params}

for iteration_idx in range(num_iterations):

gradient = {param:0 for param in params} # Set all gradients to 0

total_loss = 0.

for x_val, y_val in training_examples:

env[var_x] = x_val

env[var_y] = y_val

for param in params:

gradient[param] += loss.derivative(param).eval(env=env)

total_loss += loss.eval(env=env)

if (iteration_idx + 1) % 100 == 0:

print("Loss:", total_loss)

print("Params: ", {param:env[param] for param in params})

pass

for param in params:

env[param] = env[param] - (delta * gradient[param])

return total_loss, {param:env[param] for param in params}

import matplotlib

import matplotlib.pyplot as plt

matplotlib.rcParams['figure.figsize'] = (8.0, 8.0)

matplotlib_params = {'legend.fontsize': 'large',

'axes.labelsize': 'large',

'axes.titlesize':'large',

'xtick.labelsize':'large',

'ytick.labelsize':'large'}

matplotlib.rcParams.update(matplotlib_params)

import numpy as np

def plot_points_and_model(points, var_x, model, params, xlabel='x', ylabel='y'):

env = params.copy()

fig, ax = plt.subplots()

xs, ys = zip(*points)

ax.plot(xs, ys, 'r+')

x_min, x_max = np.min(xs), np.max(xs)

step = (x_max - x_min) / 100

x_list = list(np.arange(x_min, x_max + step, step))

y_list = []

for x in x_list:

env[var_x] = x

y_list.append(model.eval(env=env))

ax.plot(x_list, y_list)

plt.xlabel(xlabel)

plt.ylabel(ylabel)

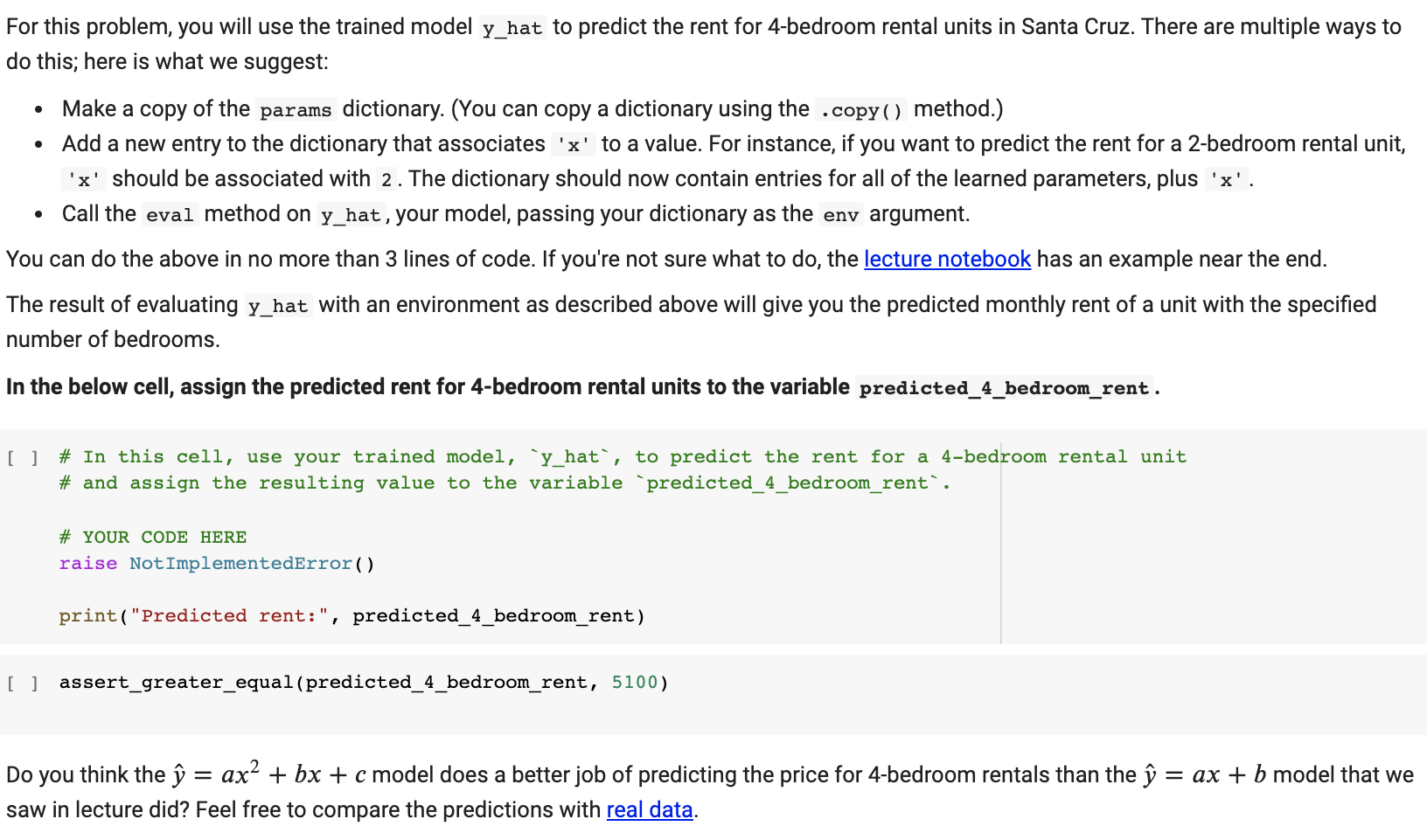

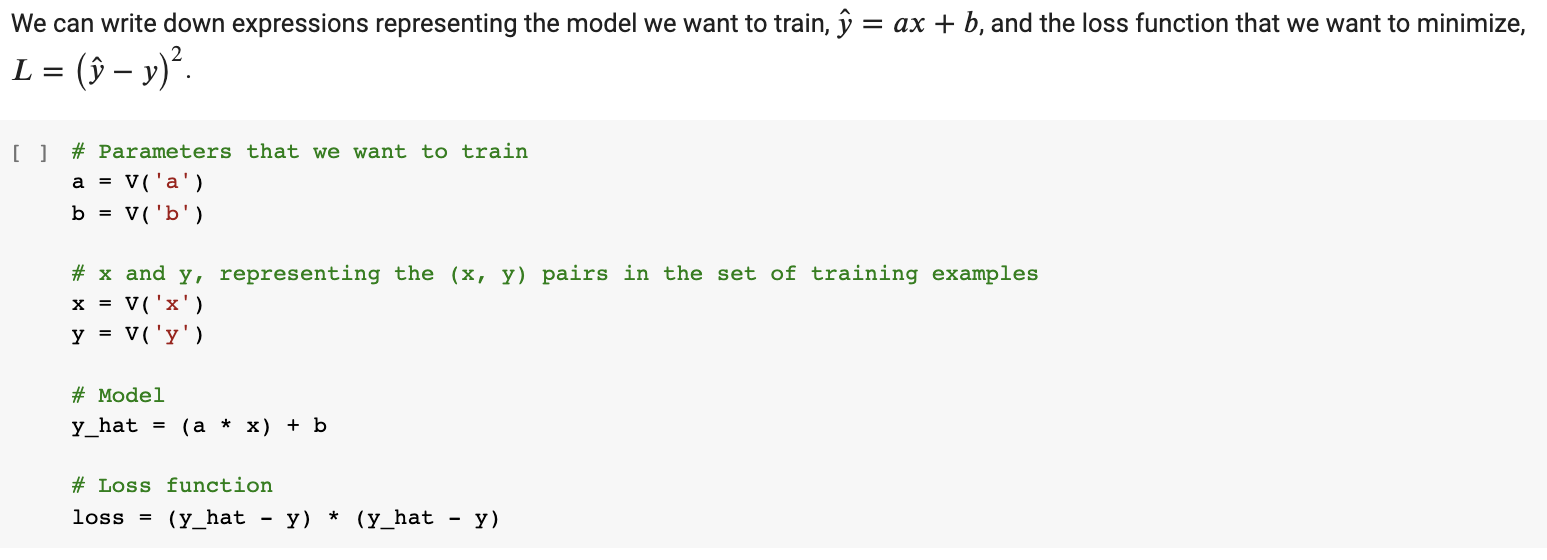

plt.show()

For this problem, you will use the trained model y_hat to predict the rent for 4-bedroom rental units in Santa Cruz. There are multiple ways to do this, here is what we suggest: Make a copy of the params dictionary. (You can copy a dictionary using the .copy() method.) Add a new entry to the dictionary that associates 'x' to a value. For instance, if you want to predict the rent for a 2-bedroom rental unit, 'x' should be associated with 2. The dictionary should now contain entries for all of the learned parameters, plus 'x'. Call the eval method on y_hat, your model, passing your dictionary as the env argument. You can do the above in no more than 3 lines of code. If you're not sure what to do, the lecture notebook has an example near the end. The result of evaluating y_hat with an environment as described above will give you the predicted monthly rent of a unit with the specified number of bedrooms. In the below cell, assign the predicted rent for 4-bedroom rental units to the variable predicted_4_bedroom_rent. [ ] # In this cell, use your trained model, 'y_hat', to predict the rent for a 4-bedroom rental unit # and assign the resulting value to the variable predicted_4_bedroom_rent'. # YOUR CODE HERE raise NotImplementedError() print("Predicted rent:", predicted_4_bedroom_rent) [ ] assert_greater_equal(predicted_4_bedroom_rent, 5100) Do you think the = ax2 + bx + c model does a better job of predicting the price for 4-bedroom rentals than the = ax + b model that we saw in lecture did? Feel free to compare the predictions with real data. We can write down expressions representing the model we want to train, = ax + b, and the loss function that we want to minimize, L = ( y)? [] # Parameters that we want to train a = v('a') b = v('b') # x and y, representing the (x, y) pairs in the set of training examples x = v('x') y = v('y') # Model y_hat = (a + x) + b # Loss function loss = (y_hat - y) * (y_hat - y) For this problem, you will use the trained model y_hat to predict the rent for 4-bedroom rental units in Santa Cruz. There are multiple ways to do this, here is what we suggest: Make a copy of the params dictionary. (You can copy a dictionary using the .copy() method.) Add a new entry to the dictionary that associates 'x' to a value. For instance, if you want to predict the rent for a 2-bedroom rental unit, 'x' should be associated with 2. The dictionary should now contain entries for all of the learned parameters, plus 'x'. Call the eval method on y_hat, your model, passing your dictionary as the env argument. You can do the above in no more than 3 lines of code. If you're not sure what to do, the lecture notebook has an example near the end. The result of evaluating y_hat with an environment as described above will give you the predicted monthly rent of a unit with the specified number of bedrooms. In the below cell, assign the predicted rent for 4-bedroom rental units to the variable predicted_4_bedroom_rent. [ ] # In this cell, use your trained model, 'y_hat', to predict the rent for a 4-bedroom rental unit # and assign the resulting value to the variable predicted_4_bedroom_rent'. # YOUR CODE HERE raise NotImplementedError() print("Predicted rent:", predicted_4_bedroom_rent) [ ] assert_greater_equal(predicted_4_bedroom_rent, 5100) Do you think the = ax2 + bx + c model does a better job of predicting the price for 4-bedroom rentals than the = ax + b model that we saw in lecture did? Feel free to compare the predictions with real data. We can write down expressions representing the model we want to train, = ax + b, and the loss function that we want to minimize, L = ( y)? [] # Parameters that we want to train a = v('a') b = v('b') # x and y, representing the (x, y) pairs in the set of training examples x = v('x') y = v('y') # Model y_hat = (a + x) + b # Loss function loss = (y_hat - y) * (y_hat - y)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts