Question: In the Learning Without Forgetting (LWF) algorithm the parameters are updated by minimizing oLold (Yo, Yo) + Lnew (Yn, Yn) where Lold (Yo, Yo)

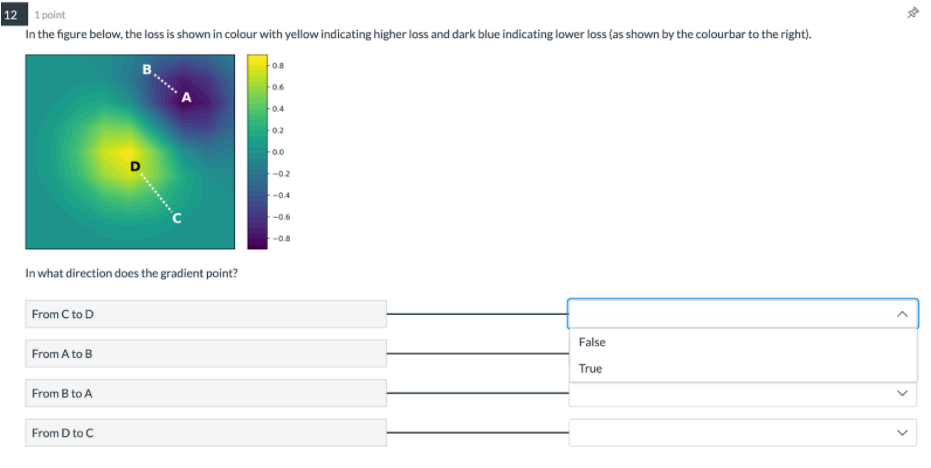

In the Learning Without Forgetting (LWF) algorithm the parameters are updated by minimizing oLold (Yo, Yo) + Lnew (Yn, Yn) where Lold (Yo, Yo) and new (Yn, Yn) are the losses for the old and new tasks, respectively. However, what role does the parameter X, play? It controls the trade-off between plasticity and stability. It is the L2 regularization parameter for the entire network. It is the L1 regularization parameter for the entire network. It controls the drop-out rate. 12 1 point In the figure below, the loss is shown in colour with yellow indicating higher loss and dark blue indicating lower loss (as shown by the colourbar to the right). From C to D In what direction does the gradient point? From A to B From B to A B....... A From D to C .C -0.8 0.6 0.4 0.2 0.0 -0.2 -0.4 -0.6 False True 42

Step by Step Solution

3.44 Rating (157 Votes )

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts