Question: In the lectures, we introduced Gradient Descent, an optimization method to find the minimum value of a function. In this problem we try to solve

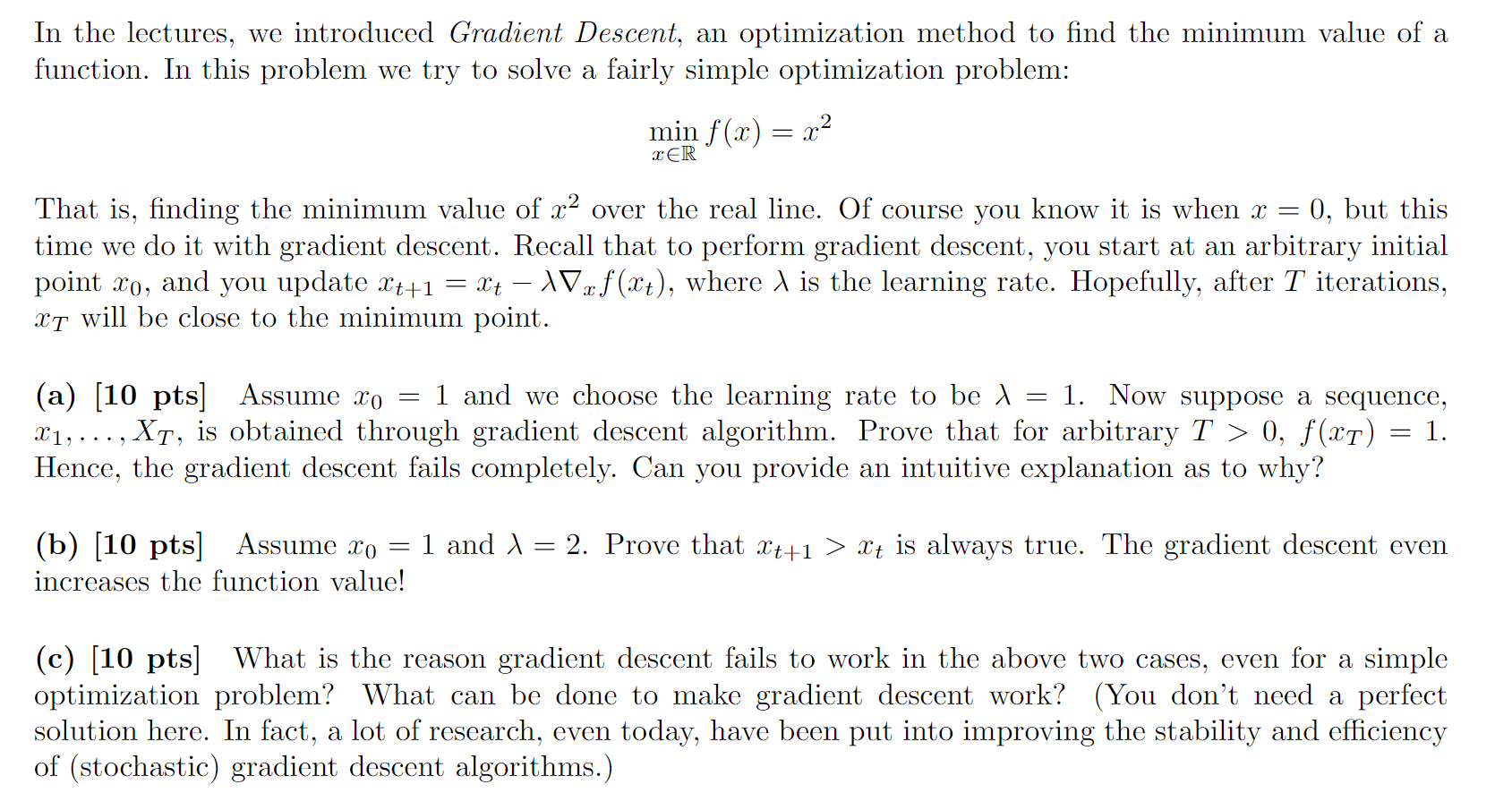

In the lectures, we introduced Gradient Descent, an optimization method to find the minimum value of a function. In this problem we try to solve a fairly simple optimization problem: min f(x) = x2 XER That is, finding the minimum value of x2 over the real line. Of course you know it is when x = 0, but this time we do it with gradient descent. Recall that to perform gradient descent, you start at an arbitrary initial point xo, and you update Xt+1 = Xt 1Vxf(xt), where is the learning rate. Hopefully, after T iterations, XT will be close to the minimum point. (a) (10 pts] Assume Xo = 1 and we choose the learning rate to be l = 1. Now suppose a sequence, X1, ..., XT, is obtained through gradient descent algorithm. Prove that for arbitrary T > 0, f(xT) = 1. Hence, the gradient descent fails completely. Can you provide an intuitive explanation as to why? (b) [10 pts] Assume xo = 1 and 1= 2. Prove that Xt+1 > xt is always true. The gradient descent even increases the function value! (c) (10 pts What is the reason gradient descent fails to work in the above two cases, even for a simple optimization problem? What can be done to make gradient descent work? (You don't need a perfect solution here. In fact, a lot of research, even today, have been put into improving the stability and efficiency of (stochastic) gradient descent algorithms.) In the lectures, we introduced Gradient Descent, an optimization method to find the minimum value of a function. In this problem we try to solve a fairly simple optimization problem: min f(x) = x2 XER That is, finding the minimum value of x2 over the real line. Of course you know it is when x = 0, but this time we do it with gradient descent. Recall that to perform gradient descent, you start at an arbitrary initial point xo, and you update Xt+1 = Xt 1Vxf(xt), where is the learning rate. Hopefully, after T iterations, XT will be close to the minimum point. (a) (10 pts] Assume Xo = 1 and we choose the learning rate to be l = 1. Now suppose a sequence, X1, ..., XT, is obtained through gradient descent algorithm. Prove that for arbitrary T > 0, f(xT) = 1. Hence, the gradient descent fails completely. Can you provide an intuitive explanation as to why? (b) [10 pts] Assume xo = 1 and 1= 2. Prove that Xt+1 > xt is always true. The gradient descent even increases the function value! (c) (10 pts What is the reason gradient descent fails to work in the above two cases, even for a simple optimization problem? What can be done to make gradient descent work? (You don't need a perfect solution here. In fact, a lot of research, even today, have been put into improving the stability and efficiency of (stochastic) gradient descent algorithms.)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts