Question: In this example, we model an autonomous vacuum cleaner operating in a room as an MDP with four states. We assume that there is

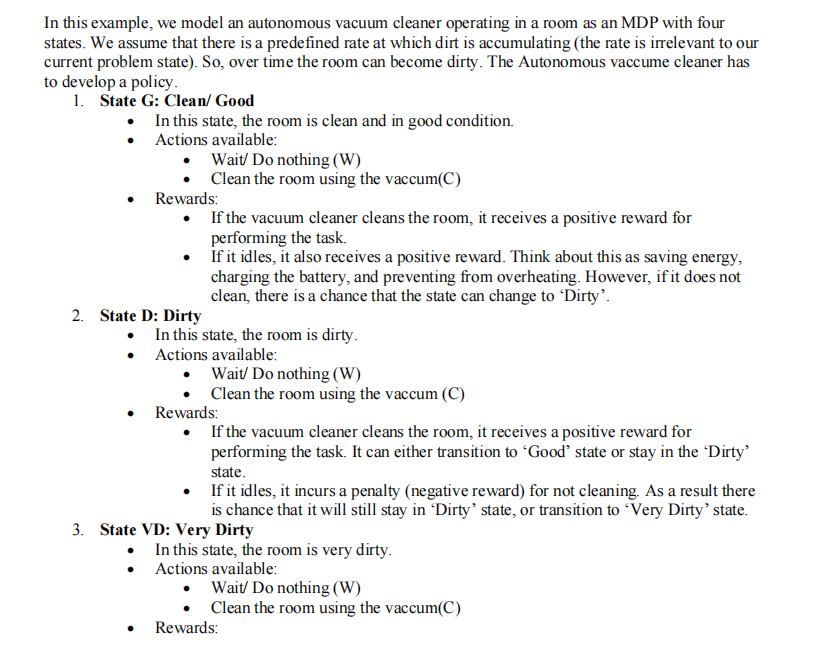

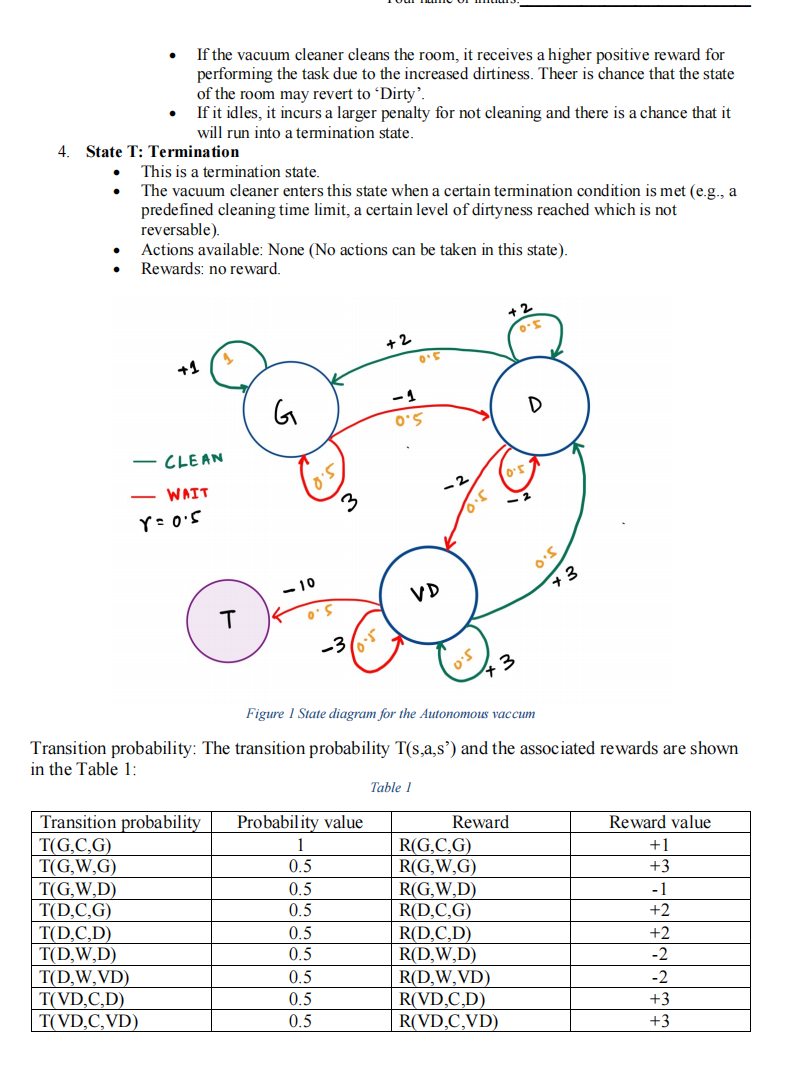

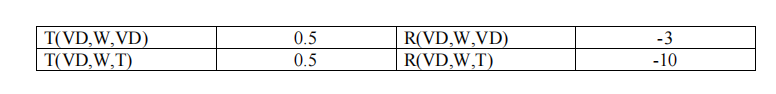

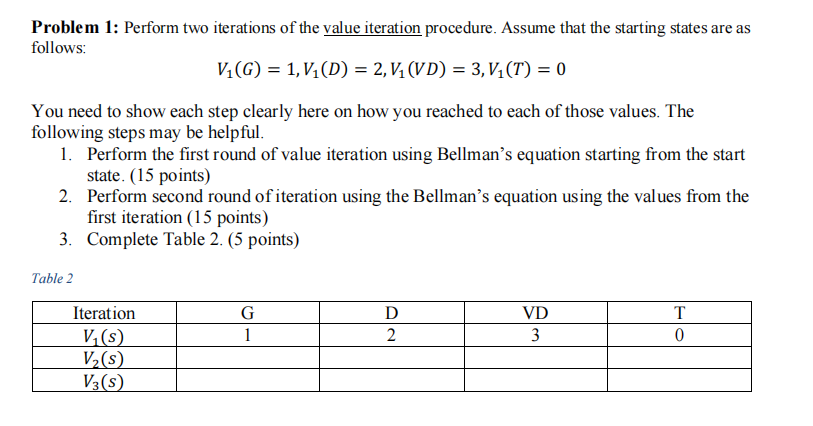

In this example, we model an autonomous vacuum cleaner operating in a room as an MDP with four states. We assume that there is a predefined rate at which dirt is accumulating (the rate is irrelevant to our current problem state). So, over time the room can become dirty. The Autonomous vaccume cleaner has to develop a policy. 1. State G: Clean/Good In this state, the room is clean and in good condition. Actions available: 2. State D: Dirty Wait/ Do nothing (W) Clean the room using the vaccum(C) Rewards: If the vacuum cleaner cleans the room, it receives a positive reward for performing the task. In this state, the room is dirty. Actions available: If it idles, it also receives a positive reward. Think about this as saving energy, charging the battery, and preventing from overheating. However, if it does not clean, there is a chance that the state can change to 'Dirty'. Wait/ Do nothing (W) Clean the room using the vaccum (C) Rewards: If the vacuum cleaner cleans the room, it receives a positive reward for performing the task. It can either transition to 'Good' state or stay in the 'Dirty' state. If it idles, it incurs a penalty (negative reward) for not cleaning. As a result there is chance that it will still stay in 'Dirty' state, or transition to "Very Dirty' state. 3. State VD: Very Dirty In this state, the room is very dirty. Actions available: Wait/ Do nothing (W) Clean the room using the vaccum(C) Rewards: 4. State T: Termination T(G,W,D) T(D,C,G) T(D,C,D) T(D,W,D) If the vacuum cleaner cleans the room, it receives a higher positive reward for performing the task due to the increased dirtiness. Theer is chance that the state of the room may revert to 'Dirty'. If it idles, it incurs a larger penalty for not cleaning and there is a chance that it will run into a termination state. - This is a termination state. The vacuum cleaner enters this state when a certain termination condition is met (e.g., a predefined cleaning time limit, a certain level of dirtyness reached which is not reversable). Actions available: None (No actions can be taken in this state). Rewards: no reward. T(D,W,VD) T(VD,C,D) T(VD,C,VD) +1 CLEAN - WAIT Y = 0.5 2 T G -10 Transition probability Probability value T(G,C,G) T(G,W,G) 1 0.5 0.5 0.5 3 0.5 0.5 -3 0.5 0.5 0.5 +2 0.5 -1 0.5 Figure 1 State diagram for the Autonomous vaccum Transition probability: The transition probability T(s,a,s') and the associated rewards are shown in the Table 1: VD Table 1 0.5 R(G,C,G) R(G,W,G) R(G,W,D) R(D,C,G) Reward R(D,C,D) R(D,W,D) +2 0.5 +3 R(D,W,VD) R(VD,C,D) R(VD,C,VD) D +3 Reward value +1 +3 -1 +2 +2 -2 -2 +3 +3 T(VD,W,VD) T(VD,W,T) 0.5 0.5 R(VD,W,VD) R(VD,W,T) -3 -10 Problem 1: Perform two iterations of the value iteration procedure. Assume that the starting states are as follows: V(G) = 1, V(D) = 2, V (VD) = 3, V(T) = 0 You need to show each step clearly here on how you reached to each of those values. The following steps may be helpful. 1. Perform the first round of value iteration using Bellman's equation starting from the start state. (15 points) 2. Perform second round of iteration using the Bellman's equation using the values from the first iteration (15 points) 3. Complete Table 2. (5 points) Table 2 Iteration V(s) V (s) V3(S) G 1 D 2 VD 3 T 0

Step by Step Solution

3.37 Rating (153 Votes )

There are 3 Steps involved in it

First round of value iteration We start by applying Bellmans equation to each state V2G maxa RG gamma sums Pssa V1s ight For state G the possible actions are D and VD If the agent takes action D it wi... View full answer

Get step-by-step solutions from verified subject matter experts