Question: In this problem, we consider mild modifications of the standard MDP setting. (a) (10 points) Sometimes MDPs are formulated with a reward function R(s)

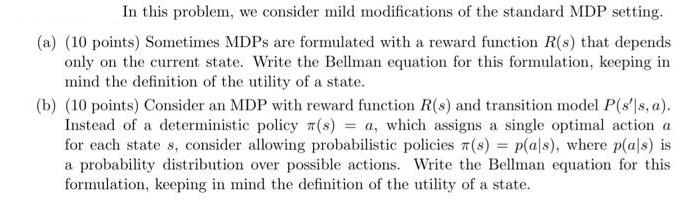

In this problem, we consider mild modifications of the standard MDP setting. (a) (10 points) Sometimes MDPs are formulated with a reward function R(s) that depends only on the current state. Write the Bellman equation for this formulation, keeping in mind the definition of the utility of a state. (b) (10 points) Consider an MDP with reward function R(s) and transition model P(s's, a). Instead of a deterministic policy (s) = a, which assigns a single optimal action a for each states, consider allowing probabilistic policies (s) = p(a|s), where p(als) is a probability distribution over possible actions. Write the Bellman equation for this formulation, keeping in mind the definition of the utility of a state.

Step by Step Solution

There are 3 Steps involved in it

a Bellman Equation for MDP with Reward Function Rs In a Markov Decision Process MDP where the reward ... View full answer

Get step-by-step solutions from verified subject matter experts