Question: In this problem, we will show that the convergence rate for optimizing smooth convex functions can be recovered (up to a logarithmic factor) by the

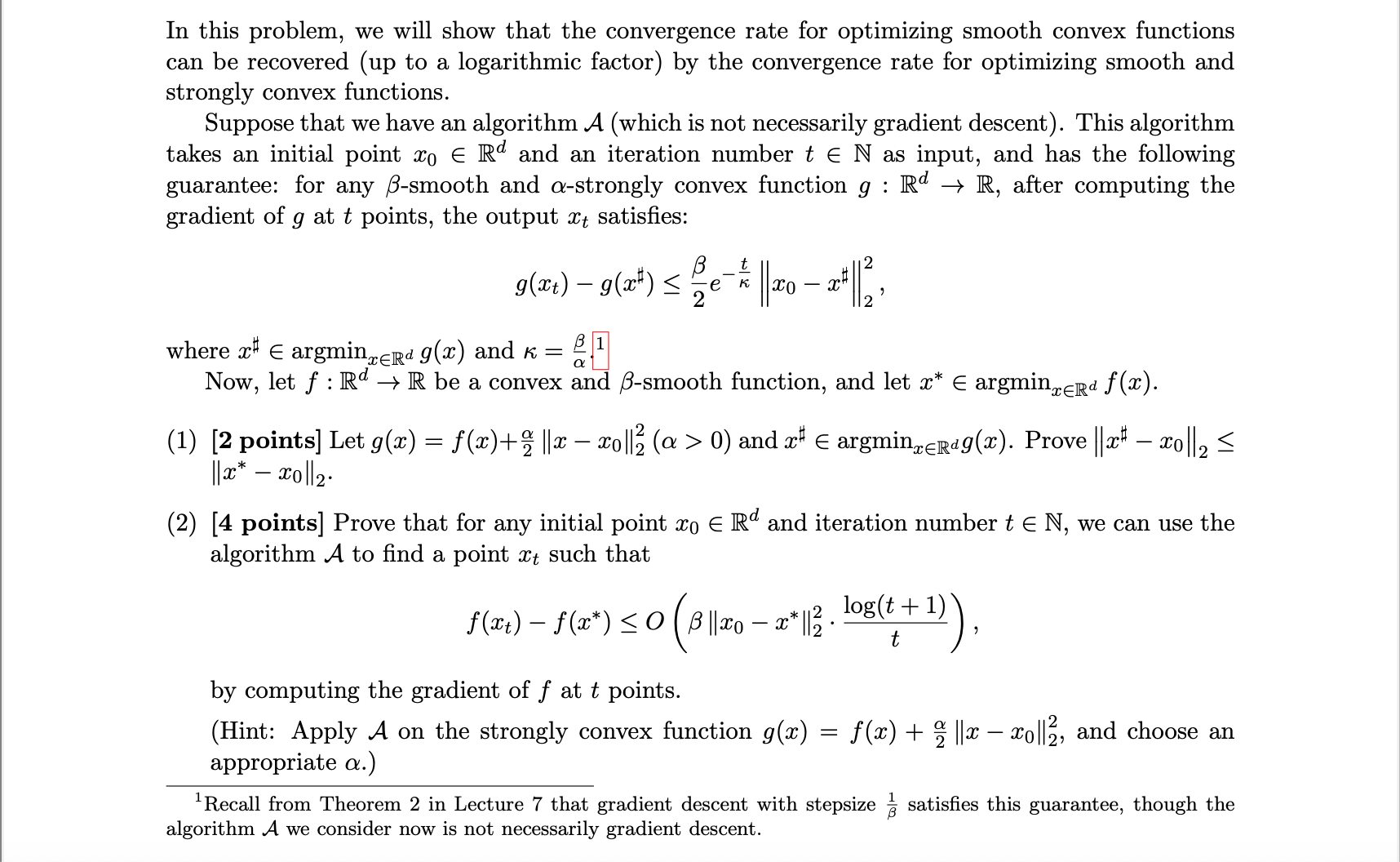

In this problem, we will show that the convergence rate for optimizing smooth convex functions can be recovered (up to a logarithmic factor) by the convergence rate for optimizing smooth and strongly convex functions. Suppose that we have an algorithm A (which is not necessarily gradient descent). This algorithm takes an initial point x0 E Rd and an iteration number t E N as input, and has the following guarantee: for any B-smooth and a-strongly convex function g : Rd -> R, after computing the gradient of g at t points, the output at satisfies: g(xt) - g(x) R be a convex and B-smooth function, and let x* E argminTERd f(x). (1) [2 points] Let g(x) = f(x)+ , lx - coll2 (a > 0) and x E argminceRag(x). Prove |la - xol|2 (2) [4 points] Prove that for any initial point ro E Rd and iteration number t E N, we can use the algorithm A to find a point at such that f(xt) - f(a*)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts