Question: In this question we will explore and show some nice properties of Generalized Linear Models, specifically those related to its use of Exponential Family

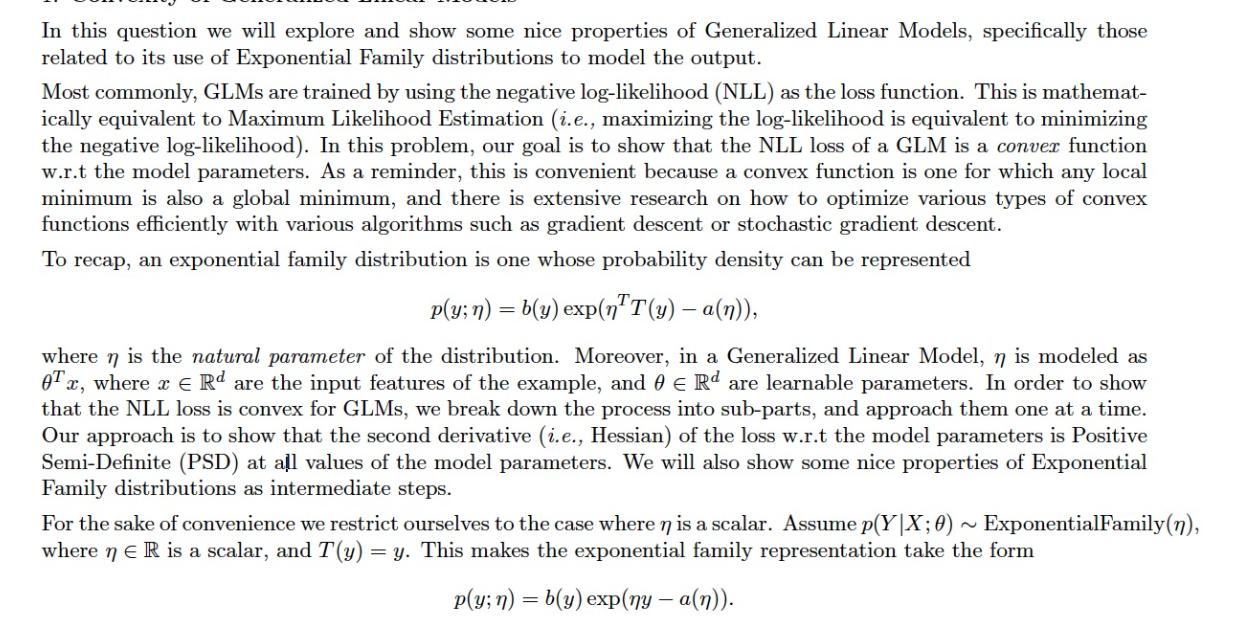

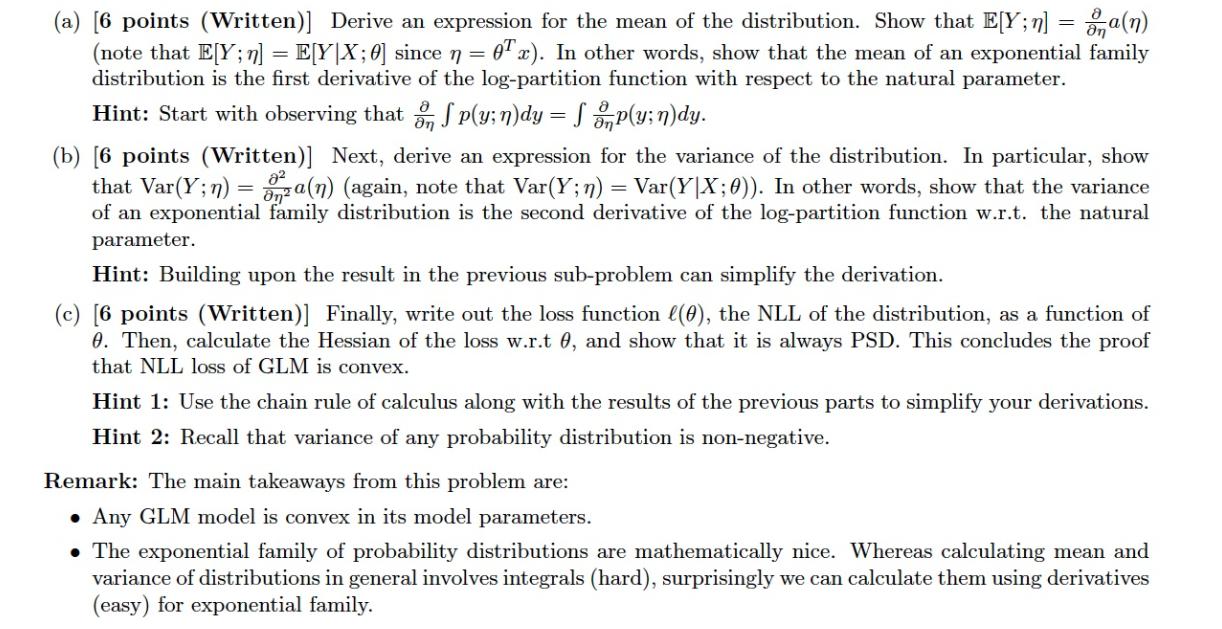

In this question we will explore and show some nice properties of Generalized Linear Models, specifically those related to its use of Exponential Family distributions to model the output. Most commonly, GLMs are trained by using the negative log-likelihood (NLL) as the loss function. This is mathemat- ically equivalent to Maximum Likelihood Estimation (i.e., maximizing the log-likelihood is equivalent to minimizing the negative log-likelihood). In this problem, our goal is to show that the NLL loss of a GLM is a conver function w.r.t the model parameters. As a reminder, this is convenient because a convex function is one for which any local minimum is also a global minimum, and there is extensive research on how to optimize various types of convex functions efficiently with various algorithms such as gradient descent or stochastic gradient descent. To recap, an exponential family distribution is one whose probability density can be represented p(y;n) = b(y) exp(nT(y) - a(n)), where n is the natural parameter of the distribution. Moreover, in a Generalized Linear Model, nis modeled as Tx, where x Rd are the input features of the example, and 0 R are learnable parameters. In order to show that the NLL loss is convex for GLMs, we break down the process into sub-parts, and approach them one at a time. Our approach is to show that the second derivative (i.e., Hessian) of the loss w.r.t the model parameters is Positive Semi-Definite (PSD) at all values of the model parameters. We will also show some nice properties of Exponential Family distributions as intermediate steps. For the sake of convenience we restrict ourselves to the case where n is a scalar. Assume p(Y|X; 0) ~ ExponentialFamily(n), where n E R is a scalar, and T(y) = ) = y. This makes the exponential family representation take the form p(y;n) - b(y) exp(ny - a(n)). (a) [6 points (Written)] Derive an expression for the mean of the distribution. Show that E[Y; n] = a(n) (note that E[Y; n] = E[Y|X;0] since n=0x). In other words, show that the mean of an exponential family distribution is the first derivative of the log-partition function with respect to the natural parameter. Hint: Start with observing that fp(y;n)dy = fp(y;n)dy. (b) [6 points (Written)] Next, derive an expression for the variance of the distribution. In particular, show that Var(Y;n) = a(n) (again, note that Var(Y; n) = Var(Y|X; 0)). In other words, show that the variance of an exponential family distribution is the second derivative of the log-partition function w.r.t. the natural parameter. Hint: Building upon the result in the previous sub-problem can simplify the derivation. (c) [6 points (Written)] Finally, write out the loss function (0), the NLL of the distribution, as a function of 0. Then, calculate the Hessian of the loss w.r.t , and show that it is always PSD. This concludes the proof that NLL loss of GLM is convex. Hint 1: Use the chain rule of calculus along with the results of the previous parts to simplify your derivations. Hint 2: Recall that variance of any probability distribution is non-negative. Remark: The main takeaways from this problem are: . Any GLM model is convex in its model parameters. . The exponential family of probability distributions are mathematically nice. Whereas calculating mean and variance of distributions in general involves integrals (hard), surprisingly we can calculate them using derivatives (easy) for exponential family.

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts