Question: In transfer learning for deep neural networks, why is it 1 0 common to use models pre - trained on large datasets like ImageNet? Because

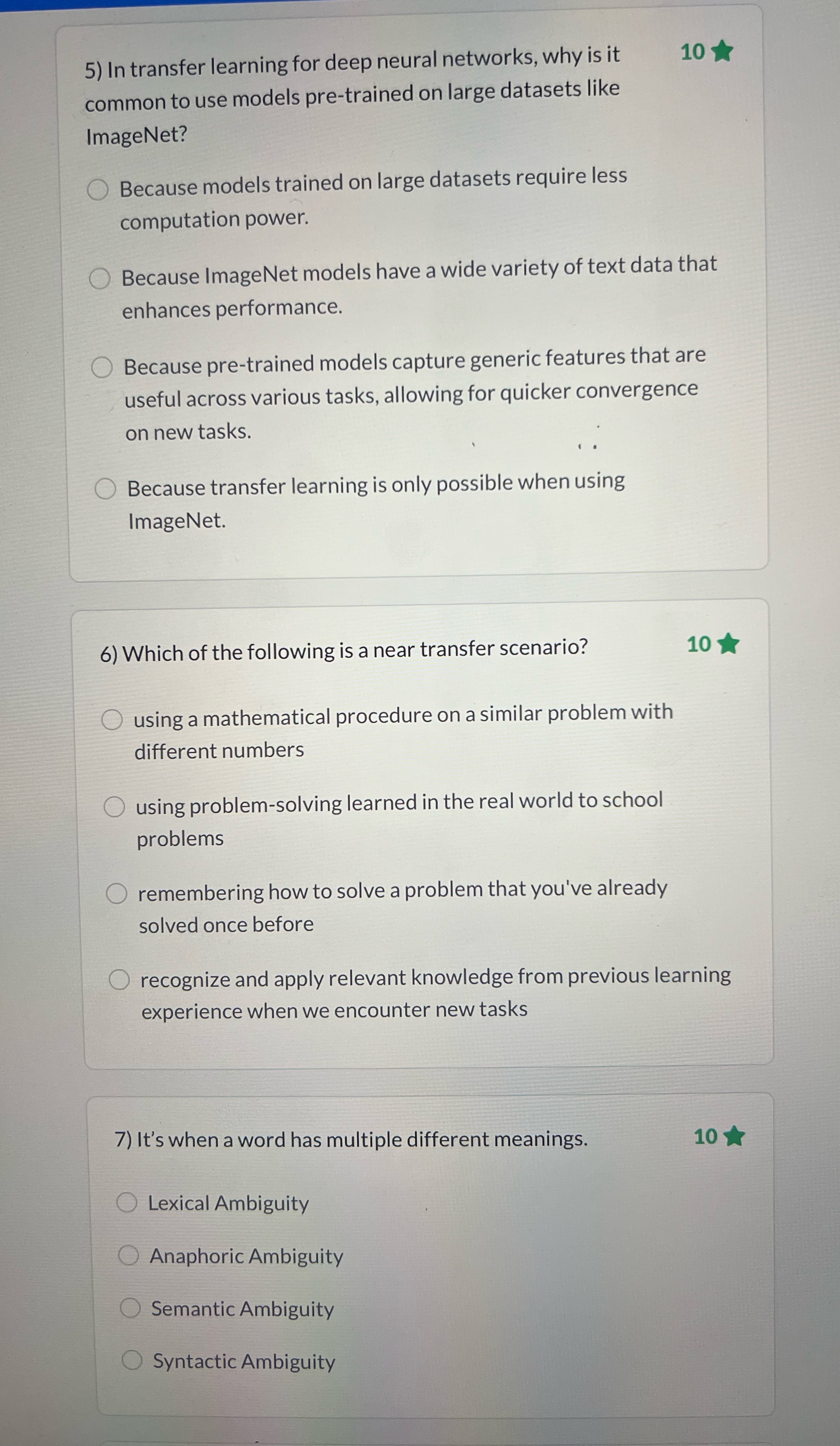

In transfer learning for deep neural networks, why is it

common to use models pretrained on large datasets like ImageNet?

Because models trained on large datasets require less computation power.

Because ImageNet models have a wide variety of text data that enhances performance.

Because pretrained models capture generic features that are useful across various tasks, allowing for quicker convergence on new tasks.

Because transfer learning is only possible when using ImageNet.

Which of the following is a near transfer scenario?

using a mathematical procedure on a similar problem with different numbers

using problemsolving learned in the real world to school problems

remembering how to solve a problem that you've already solved once before

recognize and apply relevant knowledge from previous learning experience when we encounter new tasks

It's when a word has multiple different meanings.

Lexical Ambiguity

Anaphoric Ambiguity

Semantic Ambiguity

Syntactic Ambiguity

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock