Question: Let A E RNXN, B E RXD be symmetric, positive definite matrices. From the lectures, we can use symmetric positive definite matrices to define a

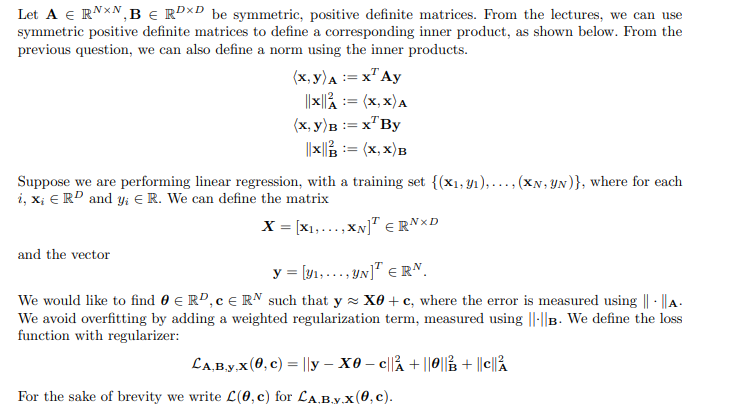

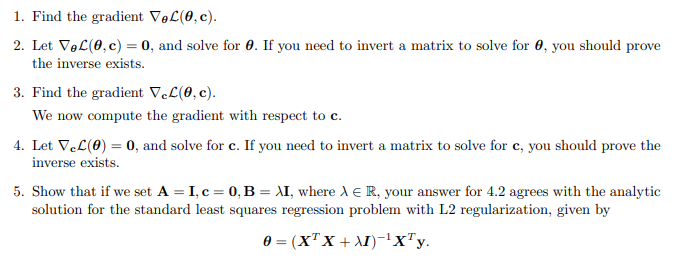

Let A E RNXN, B E RXD be symmetric, positive definite matrices. From the lectures, we can use symmetric positive definite matrices to define a corresponding inner product, as shown below. From the previous question, we can also define a norm using the inner products. (x, y)A : = x Ay | x|A := (X, X) A (x, y)B := x By |x/B := (x, x)B Suppose we are performing linear regression, with a training set { (x1, 11). ....(xN, UN)}, where for each i, x; E R" and y; E R. We can define the matrix X = [x1, . . . .XN]TERNXD and the vector y = [y1, . .. . UN]TERN. We would like to find O e R", ce R~ such that y ~ X0 + c, where the error is measured using | . A. We avoid overfitting by adding a weighted regularization term, measured using | | |B. We define the loss function with regularizer: CA, By.x (0, c) = lly - XO - clla + 1/el/B + 1/clIA For the sake of brevity we write C(0, c) for CA.B.y.x(0, c).1. Find the gradient Vec(0, c). 2. Let Vec(0, c) = 0, and solve for 0. If you need to invert a matrix to solve for 0, you should prove the inverse exists. 3. Find the gradient Vec(0, c). We now compute the gradient with respect to c. 4. Let VcC(0) =0, and solve for c. If you need to invert a matrix to solve for c, you should prove the inverse exists. 5. Show that if we set A = I, c = 0, B = AI, where A e R, your answer for 4.2 agrees with the analytic solution for the standard least squares regression problem with L2 regularization, given by 0 = (X"X +AI)-ixTy

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts