Question: Let's consider a simplified version of question's 1 grid world where the agent gets a reward of +1 when it lands on state A and

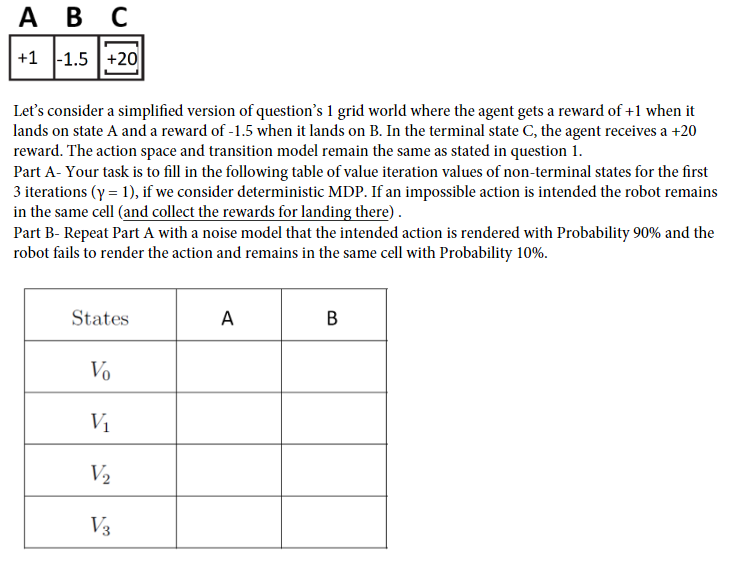

Let's consider a simplified version of question's 1 grid world where the agent gets a reward of +1 when it lands on state A and a reward of 1.5 when it lands on B. In the terminal state C, the agent receives a+20 reward. The action space and transition model remain the same as stated in question 1 . Part A- Your task is to fill in the following table of value iteration values of non-terminal states for the first 3 iterations (=1), if we consider deterministic MDP. If an impossible action is intended the robot remains in the same cell (and collect the rewards for landing there) . Part B- Repeat Part A with a noise model that the intended action is rendered with Probability 90% and the robot fails to render the action and remains in the same cell with Probability 10%

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts