Question: Linear Layer. Next, we pass the embeddings through a linear layer in the following fash- ion: hlxh = ReLU(wixd emb. Web+ Pemb olxdpos Wedpos *h

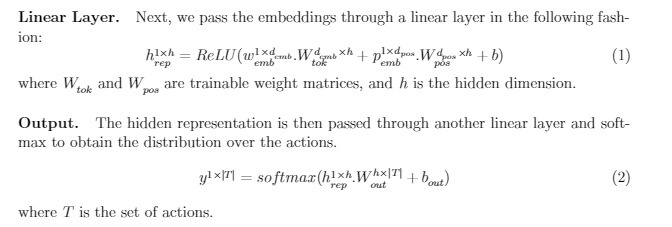

Linear Layer. Next, we pass the embeddings through a linear layer in the following fash- ion: hlxh = ReLU(wixd emb. Web+ Pemb olxdpos Wedpos *h + b) rep pos (1) where We and W are trainable weight matrices, and h is the hidden dimension. Output. The hidden representation is then passed through another linear layer and soft- max to obtain the distribution over the actions. yl xITI = softmax(hixh.Whx|?| +bout) (2) where T is the set of actions

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts