Question: (Machine Learning) In Python3 Don't use preprocessing from sklearn ### Batch gradient descent def batch_grad_descent (x, y, alpha-0.1, num step-1000, grad check-False): In this question

(Machine Learning) In Python3

Don't use preprocessing from sklearn

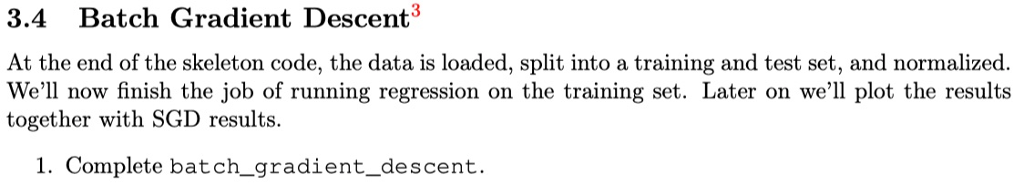

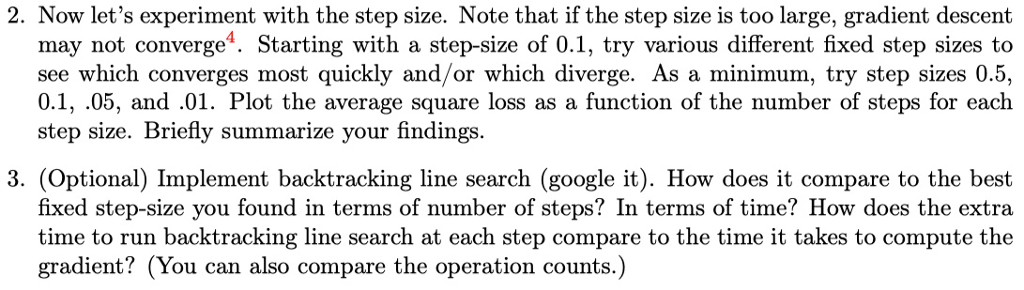

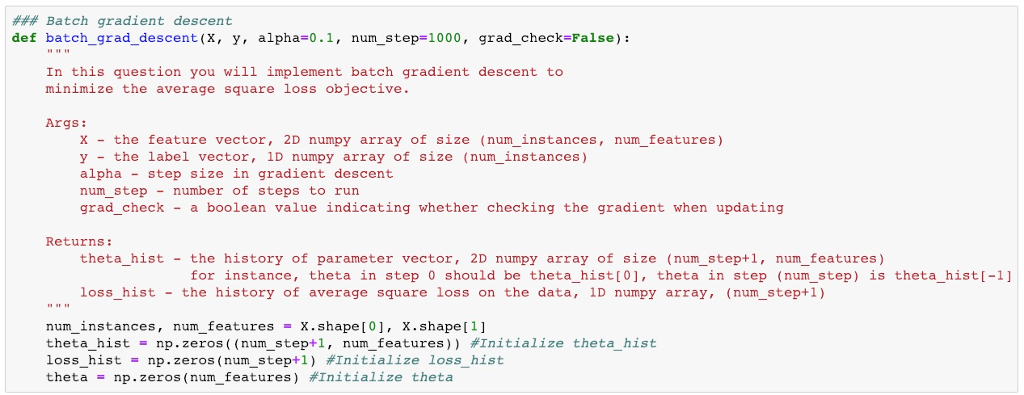

\### Batch gradient descent def batch_grad_descent (x, y, alpha-0.1, num step-1000, grad check-False): In this question you will implement batch gradient descent to minimize the average square loss objective Args: x - the feature vector, 2D numpy array of size (num_instances, num_features) y the label vector, 1D numpy array of size (numinstances) alpha step size in gradient descent num_step number of steps to run grad_check - a boolean value indicating whether checking the gradient when updating Returns: theta_hist - the history of parameter vector, 2D numpy array of size (num_step+1, num features) for instance, theta in step 0 should be theta-hist[0], theta in step (num-step ) is theta-hist[-1] loss_hist- the history of average square loss on the data, 1D numpy array, (num_ step+1) num_instances, num_features-X.shape[0], x.shapel1 theta-hist np .zeros ((num-step+1, num-features)) #Initialize loss-hist - np .zeros (num. Step+1) #Initialize loss-hist theta np.zeros (num-features ) #Initialize theta theta-hist

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts