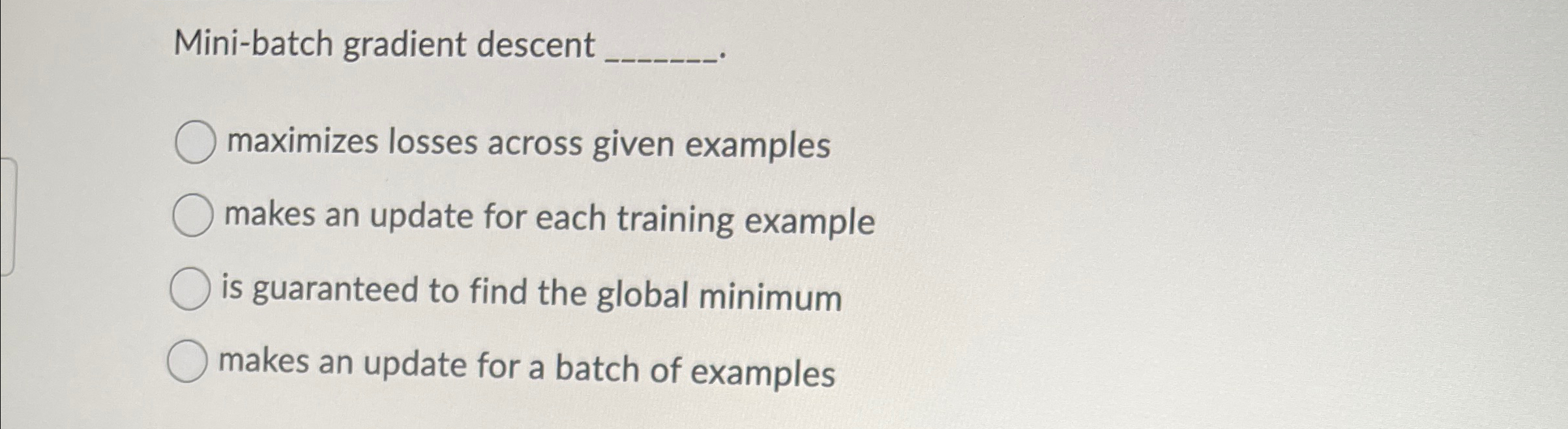

Question: Mini - batch gradient descent maximizes losses across given examples makes an update for each training example is guaranteed to find the global minimum makes

Minibatch gradient descent

maximizes losses across given examples

makes an update for each training example

is guaranteed to find the global minimum

makes an update for a batch of examples

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock